General OCR Pipeline Tutorial¶

1. Introduction to the OCR pipeline¶

OCR (Optical Character Recognition) is a technology that converts text in images into editable text. It is widely used in document digitization, information extraction, and data processing. OCR can recognize printed text, handwritten text, and even certain types of fonts and symbols.

The General OCR pipeline is designed to solve text recognition tasks, extracting text information from images and outputting it in text form. This pipeline integrates the end-to-end OCR series systems, PP-OCRv5 and PP-OCRv4, supporting recognition of over 80 languages. Additionally, it includes functions for image orientation correction and distortion correction. Based on this pipeline, precise text content prediction at the millisecond level on CPUs can be achieved, covering a wide range of applications including general, manufacturing, finance, and transportation sectors. The pipeline also provides flexible deployment options, supporting calls in various programming languages on multiple hardware platforms. Moreover, it offers the capability for custom development, allowing you to train and optimize on your own dataset. The trained models can also be seamlessly integrated.

The General OCR pipeline includes mandatory text detection and text recognition modules, as well as optional document image orientation classification, text image correction, and text line orientation classification modules. The document image orientation classification and text image correction modules are integrated as a document preprocessing sub-line into the General OCR pipeline. Each module contains multiple models, and you can choose the model based on the benchmark test data below.

1.1 Model benchmark data¶

If you prioritize model accuracy, choose a high-accuracy model; if you prioritize inference speed, choose a faster inference model; if you care about model storage size, choose a smaller model.

The inference time only includes the model inference time and does not include the time for pre- or post-processing.

Document Image Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Acc (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-LCNet_x1_0_doc_ori | Inference Model/Training Model | 99.06 | 2.62 / 0.59 | 3.24 / 1.19 | 7 | A document image classification model based on PP-LCNet_x1_0, with four categories: 0 degrees, 90 degrees, 180 degrees, and 270 degrees. |

Text Image Correction Module (Optional):

| Model | Model Download Link | CER | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| UVDoc | Inference Model/Training Model | 0.179 | 19.05 / 19.05 | - / 869.82 | 30.3 | High-accuracy text image rectification model |

Text Line Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Acc (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-LCNet_x0_25_textline_ori | Inference Model/Training Model | 98.85 | 2.16 / 0.41 | 2.37 / 0.73 | 0.96 | Text line classification model based on PP-LCNet_x0_25, with two classes: 0 degrees and 180 degrees |

| PP-LCNet_x1_0_textline_ori | Inference Model/Training Model | 99.42 | - / - | 2.98 / 2.98 | 6.5 | Text line classification model based on PP-LCNet_x1_0, with two classes: 0 degrees and 180 degrees |

Text Detection Module:

| Model | Model Download Link | Detection Hmean (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_det | Inference Model/Training Model | 83.8 | 89.55 / 70.19 | 383.15 / 383.15 | 84.3 | PP-OCRv5 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv5_mobile_det | Inference Model/Training Model | 79.0 | 10.67 / 6.36 | 57.77 / 28.15 | 4.7 | PP-OCRv5 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

| PP-OCRv4_server_det | Inference Model/Training Model | 69.2 | 127.82 / 98.87 | 585.95 / 489.77 | 109 | PP-OCRv4 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv4_mobile_det | Inference Model/Training Model | 63.8 | 9.87 / 4.17 | 56.60 / 20.79 | 4.7 | PP-OCRv4 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

| PP-OCRv3_mobile_det | Inference Model/Training Model | Accuracy comparable to PP-OCRv4_mobile_det | 9.90 / 3.60 | 41.93 / 20.76 | 2.1 | PP-OCRv3 mobile text detection model with higher efficiency, suitable for edge device deployment |

| PP-OCRv3_server_det | Inference Model/Training Model | Accuracy comparable to PP-OCRv4_server_det | 119.50 / 75.00 | 379.35 / 318.35 | 102.1 | PP-OCRv3 server text detection model with higher accuracy, suitable for deployment on high-performance servers |

Text Recognition Module:

| Model | Model Download Link | Recognition Avg Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Training Model | 86.38 | 8.46 / 2.36 | 31.21 / 31.21 | 81 | PP-OCRv5_rec is a next-generation text recognition model. This model is dedicated to efficiently and accurately supporting four major languages—Simplified Chinese, Traditional Chinese, English, and Japanese—with a single model. It supports complex text scenarios, including handwritten, vertical text, pinyin, and rare characters. While maintaining recognition accuracy, it also balances inference speed and model robustness, providing efficient and precise technical support for document understanding in various scenarios. |

| PP-OCRv5_mobile_rec | Inference Model/Training Model | 81.29 | 5.43 / 1.46 | 21.20 / 5.32 | 16 | |

| PP-OCRv4_server_rec_doc | Inference Model/Training Model | 86.58 | 8.69 / 2.78 | 37.93 / 37.93 | 182 | PP-OCRv4_server_rec_doc is trained on a mixed dataset of more Chinese document data and PP-OCR training data based on PP-OCRv4_server_rec. It has added the ability to recognize some traditional Chinese characters, Japanese, and special characters, and can support the recognition of more than 15,000 characters. In addition to improving the text recognition capability related to documents, it also enhances the general text recognition capability. |

| PP-OCRv4_mobile_rec | Inference Model/Training Model | 78.74 | 5.26 / 1.12 | 17.48 / 3.61 | 10.5 | The lightweight recognition model of PP-OCRv4 has high inference efficiency and can be deployed on various hardware devices, including edge devices. |

| PP-OCRv4_server_rec | Inference Model/Training Model | 85.19 | 8.75 / 2.49 | 36.93 / 36.93 | 173 | The server-side model of PP-OCRv4 offers high inference accuracy and can be deployed on various types of servers. |

| en_PP-OCRv4_mobile_rec | Inference Model/Training Model | 70.39 | 4.81 / 1.23 | 17.20 / 4.18 | 7.5 | The ultra-lightweight English recognition model, trained based on the PP-OCRv4 recognition model, supports the recognition of English letters and numbers. |

❗ The above list features the 4 core models that the text recognition module primarily supports. In total, this module supports 18 models. The complete list of models is as follows:

👉Model List Details

* PP-OCRv5 Multi-Scenario Model| Model | Model Download Link | Chinese Recognition Avg Accuracy (%) | English Recognition Avg Accuracy (%) | Traditional Chinese Recognition Avg Accuracy (%) | Japanese Recognition Avg Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Training Model | 86.38 | 64.70 | 93.29 | 60.35 | 8.46 / 2.36 | 31.21 / 31.21 | 81 | PP-OCRv5_rec is a next-generation text recognition model. This model efficiently and accurately supports four major languages with a single model: Simplified Chinese, Traditional Chinese, English, and Japanese. It recognizes complex text scenarios including handwritten, vertical text, pinyin, and rare characters. While maintaining recognition accuracy, it balances inference speed and model robustness, providing efficient and precise technical support for document understanding in various scenarios. |

| PP-OCRv5_mobile_rec | Inference Model/Training Model | 81.29 | 66.00 | 83.55 | 54.65 | 5.43 / 1.46 | 21.20 / 5.32 | 16 |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-OCRv4_server_rec_doc | Inference Model/Training Model | 86.58 | 8.69 / 2.78 | 37.93 / 37.93 | 182 | PP-OCRv4_server_rec_doc is trained on a mixed dataset of more Chinese document data and PP-OCR training data based on PP-OCRv4_server_rec. It has added the recognition capabilities for some traditional Chinese characters, Japanese, and special characters. The number of recognizable characters is over 15,000. In addition to the improvement in document-related text recognition, it also enhances the general text recognition capability. |

| PP-OCRv4_mobile_rec | Inference Model/Training Model | 78.74 | 5.26 / 1.12 | 17.48 / 3.61 | 10.5 | The lightweight recognition model of PP-OCRv4 has high inference efficiency and can be deployed on various hardware devices, including edge devices. |

| PP-OCRv4_server_rec | Inference Model/Training Model | 85.19 | 8.75 / 2.49 | 36.93 / 36.93 | 173 | The server-side model of PP-OCRv4 offers high inference accuracy and can be deployed on various types of servers. |

| PP-OCRv3_mobile_rec | Inference Model/Training Model | 72.96 | 3.89 / 1.16 | 8.72 / 3.56 | 10.3 | PP-OCRv3’s lightweight recognition model is designed for high inference efficiency and can be deployed on a variety of hardware devices, including edge devices. |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| ch_SVTRv2_rec | Inference Model/Training Model | 68.81 | 10.38 / 8.31 | 66.52 / 30.83 | 80.5 | SVTRv2 is a server text recognition model developed by the OpenOCR team of Fudan University's Visual and Learning Laboratory (FVL). It won the first prize in the PaddleOCR Algorithm Model Challenge - Task One: OCR End-to-End Recognition Task. The end-to-end recognition accuracy on the A list is 6% higher than that of PP-OCRv4. |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| ch_RepSVTR_rec | Inference Model/Training Model | 65.07 | 6.29 / 1.57 | 20.64 / 5.40 | 48.8 | The RepSVTR text recognition model is a mobile text recognition model based on SVTRv2. It won the first prize in the PaddleOCR Algorithm Model Challenge - Task One: OCR End-to-End Recognition Task. The end-to-end recognition accuracy on the B list is 2.5% higher than that of PP-OCRv4, with the same inference speed. |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| en_PP-OCRv5_mobile_rec | Inference Model/Training Model | 85.25 | - | - | 7.5 | The ultra-lightweight English recognition model trained based on the PP-OCRv5 recognition model supports the recognition of English and numbers. |

| en_PP-OCRv4_mobile_rec | Inference Model/Training Model | 70.39 | 4.81 / 1.23 | 17.20 / 4.18 | 7.5 | The ultra-lightweight English recognition model trained based on the PP-OCRv4 recognition model supports the recognition of English and numbers. |

| en_PP-OCRv3_mobile_rec | Inference Model/Training Model | 70.69 | 3.56 / 0.78 | 8.44 / 5.78 | 17.3 | The ultra-lightweight English recognition model trained based on the PP-OCRv3 recognition model supports the recognition of English and numbers. |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| korean_PP-OCRv5_mobile_rec | Inference Model/Pre-trained Model | 90.45 | 5.43 / 1.46 | 21.20 / 5.32 | 14 | An ultra-lightweight Korean text recognition model trained based on the PP-OCRv5 recognition framework. Supports Korean, English and numeric text recognition. |

| latin_PP-OCRv5_mobile_rec | Inference Model/Pre-trained Model | 84.7 | 5.43 / 1.46 | 21.20 / 5.32 | 14 | A Latin-script text recognition model trained based on the PP-OCRv5 recognition framework. Supports most Latin alphabet languages and numeric text recognition. |

| eslav_PP-OCRv5_mobile_rec | Inference Model/Pre-trained Model | 85.8 | 5.43 / 1.46 | 21.20 / 5.32 | 14 | An East Slavic language recognition model trained based on the PP-OCRv5 recognition framework. Supports East Slavic languages, English and numeric text recognition. |

| th_PP-OCRv5_mobile_rec | Inference Model/Training Model | 82.68 | - | - | 7.5 | The Thai recognition model trained based on the PP-OCRv5 recognition model supports recognition of Thai, English, and numbers. |

| el_PP-OCRv5_mobile_rec | Inference Model/Training Model | 89.28 | - | - | 7.5 | The Greek recognition model trained based on the PP-OCRv5 recognition model supports recognition of Greek, English, and numbers. |

| arabic_PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 81.27 | - | - | 7.6 | Ultra-lightweight Arabic character recognition model trained based on the PP-OCRv5 recognition model, supports Arabic letters and number recognition |

| cyrillic_PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 80.27 | - | - | 7.7 | Ultra-lightweight Cyrillic character recognition model trained based on the PP-OCRv5 recognition model, supports Cyrillic letters and number recognition |

| devanagari_PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 84.96 | - | - | 7.5 | Ultra-lightweight Devanagari script recognition model trained based on the PP-OCRv5 recognition model, supports Hindi, Sanskrit and other Devanagari letters, as well as number recognition |

| te_PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 87.65 | - | - | 7.5 | Ultra-lightweight Telugu script recognition model trained based on the PP-OCRv5 recognition model, supports Telugu script and number recognition |

| ta_PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 94.2 | - | - | 7.5 | Ultra-lightweight Tamil script recognition model trained based on the PP-OCRv5 recognition model, supports Tamil script and number recognition |

| korean_PP-OCRv3_mobile_rec | Inference Model/Training Model | 60.21 | 3.73 / 0.98 | 8.76 / 2.91 | 9.6 | The ultra-lightweight Korean recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Korean and numbers. |

| japan_PP-OCRv3_mobile_rec | Inference Model/Training Model | 45.69 | 3.86 / 1.01 | 8.62 / 2.92 | 9.8 | The ultra-lightweight Japanese recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Japanese and numbers. |

| chinese_cht_PP-OCRv3_mobile_rec | Inference Model/Training Model | 82.06 | 3.90 / 1.16 | 9.24 / 3.18 | 10.8 | The ultra-lightweight Traditional Chinese recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Traditional Chinese and numbers. |

| te_PP-OCRv3_mobile_rec | Inference Model/Training Model | 95.88 | 3.59 / 0.81 | 8.28 / 6.21 | 8.7 | The ultra-lightweight Telugu recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Telugu and numbers. |

| ka_PP-OCRv3_mobile_rec | Inference Model/Training Model | 96.96 | 3.49 / 0.89 | 8.63 / 2.77 | 17.4 | The ultra-lightweight Kannada recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Kannada and numbers. |

| ta_PP-OCRv3_mobile_rec | Inference Model/Training Model | 76.83 | 3.49 / 0.86 | 8.35 / 3.41 | 8.7 | The ultra-lightweight Tamil recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Tamil and numbers. |

| latin_PP-OCRv3_mobile_rec | Inference Model/Training Model | 76.93 | 3.53 / 0.78 | 8.50 / 6.83 | 8.7 | The ultra-lightweight Latin recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Latin script and numbers. |

| arabic_PP-OCRv3_mobile_rec | Inference Model/Training Model | 73.55 | 3.60 / 0.83 | 8.44 / 4.69 | 17.3 | The ultra-lightweight Arabic script recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Arabic script and numbers. |

| cyrillic_PP-OCRv3_mobile_rec | Inference Model/Training Model | 94.28 | 3.56 / 0.79 | 8.22 / 2.76 | 8.7 | The ultra-lightweight cyrillic alphabet recognition model trained based on the PP-OCRv3 recognition model supports the recognition of cyrillic letters and numbers. |

| devanagari_PP-OCRv3_mobile_rec | Inference Model/Training Model | 96.44 | 3.60 / 0.78 | 6.95 / 2.87 | 7.9 | The ultra-lightweight Devanagari script recognition model trained based on the PP-OCRv3 recognition model supports the recognition of Devanagari script and numbers. |

Text Line Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Acc (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-LCNet_x0_25_textline_ori | Inference Model/ Training Model | 98.85 | 2.16 / 0.41 | 2.37 / 0.73 | 0.96 | A text line orientation classification model based on PP-LCNet_x0_25, with two categories: 0 degrees and 180 degrees. |

Test Environment Description:

- Performance Test Environment

- Test Dataset:

- Document Image Orientation Classification Module: A self-built dataset using PaddleX, covering multiple scenarios such as ID cards and documents, containing 1000 images.

- Text Image Rectification Model: DocUNet

- Text Detection Model: A self-built Chinese dataset using PaddleOCR, covering multiple scenarios such as street scenes, web images, documents, and handwriting, with 500 images for detection.

- Chinese Recognition Model: A self-built Chinese dataset using PaddleOCR, covering multiple scenarios such as street scenes, web images, documents, and handwriting, with 11,000 images for text recognition.

- ch_SVTRv2_rec: Evaluation set A for "OCR End-to-End Recognition Task" in the PaddleOCR Algorithm Model Challenge

- ch_RepSVTR_rec: Evaluation set B for "OCR End-to-End Recognition Task" in the PaddleOCR Algorithm Model Challenge.

- English Recognition Model: A self-built English dataset using PaddleX.

- Multilingual Recognition Model: A self-built multilingual dataset using PaddleX.

- Text Line Orientation Classification Model: A self-built dataset using PaddleX, covering various scenarios such as ID cards and documents, containing 1000 images.

- Hardware Configuration:

- GPU: NVIDIA Tesla T4

- CPU: Intel Xeon Gold 6271C @ 2.60GHz

- Software Environment:

- Ubuntu 20.04 / CUDA 11.8 / cuDNN 8.9 / TensorRT 8.6.1.6

- paddlepaddle 3.0.0 / paddlex 3.0.3

- Test Dataset:

</li>

<li><b>Inference Mode Description</b></li>

| Mode | GPU Configuration | CPU Configuration | Acceleration Technology Combination |

|---|---|---|---|

| Normal Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of pre-selected precision types and acceleration strategies | FP32 Precision / 8 Threads | Pre-selected optimal backend (Paddle/OpenVINO/TRT, etc.) |

1.2 Pipeline benchmark data¶

Click to expand/collapse the table

| Pipeline configuration | Hardware | Avg. inference time (s) | Peak CPU utilization (%) | Avg. CPU utilization (%) | Peak host memory (MB) | Avg. host memory (MB) | Peak GPU utilization (%) | Avg. GPU utilization (%) | Peak device memory (MB) | Avg. device memory (MB) |

|---|---|---|---|---|---|---|---|---|---|---|

| OCR-default | Intel 6271C | 3.97 | 1015.40 | 917.61 | 4381.22 | 3457.78 | N/A | N/A | N/A | N/A |

| Intel 8350C | 3.79 | 1022.50 | 921.68 | 4675.46 | 3585.96 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.65 | 113.50 | 102.48 | 2240.15 | 1868.44 | 47 | 19.60 | 7612.00 | 6634.15 | |

| Intel 6271C + V100 | 1.06 | 114.90 | 103.05 | 2142.66 | 1791.43 | 72 | 20.01 | 5516.00 | 4812.81 | |

| Intel 8563C + H20 | 0.65 | 108.90 | 101.95 | 2456.05 | 2080.26 | 100 | 36.52 | 6736.00 | 6017.05 | |

| Intel 8350C + A10 | 0.74 | 115.90 | 102.22 | 2352.88 | 1993.39 | 100 | 25.56 | 6762.00 | 6039.93 | |

| Intel 6271C + T4 | 1.17 | 107.10 | 101.78 | 2361.88 | 1986.61 | 100 | 51.11 | 5282.00 | 4585.10 | |

| OCR-nopp-mobile | Intel 6271C | 1.39 | 1019.60 | 1007.69 | 2178.12 | 1873.73 | N/A | N/A | N/A | N/A |

| Intel 8350C | 1.15 | 1015.70 | 1006.87 | 2184.91 | 1916.85 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.35 | 110.80 | 103.77 | 2022.49 | 1808.11 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.27 | 110.90 | 103.80 | 1762.36 | 1525.04 | 31 | 19.30 | 4328.00 | 3356.30 | |

| Intel 6271C + V100 | 0.55 | 113.80 | 103.68 | 1728.02 | 1470.52 | 38 | 18.59 | 4198.00 | 3199.12 | |

| Intel 8563C + H20 | 0.22 | 111.90 | 103.99 | 2073.88 | 1876.14 | 32 | 20.25 | 4386.00 | 3435.86 | |

| Intel 8350C + A10 | 0.31 | 119.90 | 104.24 | 2037.38 | 1771.06 | 52 | 32.74 | 3446.00 | 2733.21 | |

| M4 | 6.51 | 147.30 | 106.24 | 3550.58 | 3236.75 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.46 | 111.90 | 103.11 | 2035.38 | 1742.39 | 65 | 46.77 | 3968.00 | 2991.91 | |

| OCR-nopp-server | Intel 6271C | 3.00 | 1016.00 | 1004.87 | 4445.46 | 3179.86 | N/A | N/A | N/A | N/A |

| Intel 8350C | 3.23 | 1010.70 | 1002.63 | 4175.39 | 3137.58 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.34 | 110.90 | 103.30 | 1904.99 | 1591.10 | 57 | 32.29 | 7494.00 | 6551.47 | |

| Intel 6271C + V100 | 0.69 | 108.90 | 102.95 | 1808.30 | 1568.64 | 72 | 35.30 | 5410.00 | 4741.18 | |

| Intel 8563C + H20 | 0.38 | 109.40 | 102.34 | 2100.00 | 1863.73 | 100 | 50.18 | 6614.00 | 5926.51 | |

| Intel 8350C + A10 | 0.41 | 109.00 | 103.18 | 2055.21 | 1845.14 | 100 | 47.15 | 6654.00 | 5951.22 | |

| Intel 6271C + T4 | 0.82 | 104.40 | 101.73 | 1906.88 | 1689.69 | 100 | 76.41 | 5178.00 | 4502.64 | |

| OCR-nopp-min736-mobile | Intel 6271C | 1.41 | 1020.10 | 1008.14 | 2184.16 | 1911.86 | N/A | N/A | N/A | N/A |

| Intel 8350C | 1.20 | 1015.70 | 1007.08 | 2254.04 | 1935.18 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.36 | 112.90 | 104.29 | 2174.58 | 1827.67 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.27 | 113.90 | 104.48 | 1717.55 | 1529.77 | 30 | 19.54 | 4328.00 | 3388.44 | |

| Intel 6271C + V100 | 0.57 | 118.80 | 104.45 | 1693.10 | 1470.74 | 40 | 19.83 | 4198.00 | 3206.91 | |

| Intel 8563C + H20 | 0.22 | 113.40 | 104.66 | 2037.13 | 1797.10 | 31 | 20.64 | 4384.00 | 3427.91 | |

| Intel 8350C + A10 | 0.31 | 119.30 | 106.05 | 1879.15 | 1732.39 | 49 | 30.40 | 3446.00 | 2751.08 | |

| M4 | 6.39 | 124.90 | 107.16 | 3578.98 | 3209.90 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.47 | 109.60 | 103.26 | 1961.40 | 1742.95 | 60 | 44.26 | 3968.00 | 3002.81 | |

| OCR-nopp-min736-server | Intel 6271C | 3.26 | 1068.50 | 1004.96 | 4582.52 | 3135.68 | N/A | N/A | N/A | N/A |

| Intel 8350C | 3.52 | 1010.70 | 1002.33 | 4723.23 | 3209.27 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.35 | 108.90 | 103.94 | 1703.65 | 1485.50 | 60 | 35.54 | 7492.00 | 6576.97 | |

| Intel 6271C + V100 | 0.71 | 110.80 | 103.54 | 1800.06 | 1559.28 | 78 | 36.65 | 5410.00 | 4741.55 | |

| Intel 8563C + H20 | 0.40 | 110.20 | 102.75 | 2012.64 | 1843.45 | 100 | 55.74 | 6614.00 | 5940.44 | |

| Intel 8350C + A10 | 0.44 | 114.90 | 103.87 | 2002.72 | 1773.17 | 100 | 49.28 | 6654.00 | 5980.68 | |

| Intel 6271C + T4 | 0.89 | 105.00 | 101.91 | 2149.31 | 1795.35 | 100 | 76.39 | 5176.00 | 4528.77 | |

| OCR-nopp-max640-mobile | Intel 6271C | 1.00 | 1033.70 | 1005.95 | 2021.88 | 1743.27 | N/A | N/A | N/A | N/A |

| Intel 8350C | 0.88 | 1043.60 | 1006.77 | 1980.82 | 1724.51 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.28 | 125.70 | 101.56 | 1962.27 | 1782.68 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.21 | 122.50 | 101.87 | 1772.39 | 1569.55 | 29 | 18.74 | 2360.00 | 2039.07 | |

| Intel 6271C + V100 | 0.43 | 133.80 | 101.82 | 1636.93 | 1464.10 | 37 | 20.94 | 2386.00 | 2055.30 | |

| Intel 8563C + H20 | 0.18 | 119.90 | 102.12 | 2119.93 | 1889.49 | 29 | 20.92 | 2636.00 | 2321.11 | |

| Intel 8350C + A10 | 0.24 | 126.80 | 101.78 | 1905.14 | 1739.93 | 48 | 30.71 | 2232.00 | 1911.18 | |

| M4 | 7.08 | 137.80 | 104.83 | 2931.08 | 2658.25 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.36 | 124.80 | 101.70 | 1983.21 | 1729.43 | 61 | 46.10 | 2162.00 | 1836.63 | |

| OCR-nopp-max960-mobile | Intel 6271C | 1.21 | 1020.00 | 1008.49 | 2200.30 | 1800.74 | N/A | N/A | N/A | N/A |

| Intel 8350C | 1.01 | 1024.10 | 1007.32 | 2038.80 | 1800.05 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.32 | 107.50 | 102.00 | 2001.21 | 1799.01 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.23 | 107.70 | 102.33 | 1727.89 | 1490.18 | 30 | 20.19 | 2646.00 | 2385.40 | |

| Intel 6271C + V100 | 0.49 | 109.90 | 102.26 | 1726.01 | 1504.90 | 38 | 20.11 | 2498.00 | 2227.73 | |

| Intel 8563C + H20 | 0.20 | 109.90 | 102.52 | 1959.46 | 1798.35 | 28 | 19.38 | 2712.00 | 2450.10 | |

| Intel 8350C + A10 | 0.27 | 102.90 | 101.19 | 1938.48 | 1741.19 | 47 | 29.27 | 3344.00 | 2585.02 | |

| M4 | 5.44 | 122.10 | 105.91 | 3094.72 | 2686.52 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.41 | 106.00 | 101.81 | 1859.88 | 1722.62 | 68 | 47.05 | 2264.00 | 2001.07 | |

| OCR-nopp-max640-server | Intel 6271C | 2.16 | 1026.30 | 1005.10 | 3467.93 | 3074.06 | N/A | N/A | N/A | N/A |

| Intel 8350C | 2.30 | 1008.70 | 1003.32 | 3435.54 | 3042.62 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.35 | 104.70 | 101.27 | 1948.85 | 1779.77 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.25 | 104.90 | 101.42 | 1833.93 | 1560.71 | 41 | 27.61 | 4480.00 | 3955.14 | |

| Intel 6271C + V100 | 0.56 | 106.20 | 101.47 | 1669.73 | 1500.87 | 58 | 31.78 | 3160.00 | 2838.78 | |

| Intel 8563C + H20 | 0.23 | 109.40 | 101.45 | 1968.77 | 1800.81 | 58 | 30.81 | 2602.00 | 2588.77 | |

| Intel 8350C + A10 | 0.30 | 106.10 | 101.55 | 2027.13 | 1749.07 | 69 | 39.10 | 3318.00 | 2795.54 | |

| M4 | 7.26 | 133.90 | 104.48 | 5473.38 | 3472.28 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.58 | 103.90 | 100.86 | 1884.23 | 1714.48 | 84 | 63.50 | 2852.00 | 2540.37 | |

| OCR-nopp-max960-server | Intel 6271C | 2.53 | 1014.50 | 1005.22 | 3625.57 | 3151.73 | N/A | N/A | N/A | N/A |

| Intel 8350C | 2.66 | 1010.60 | 1003.39 | 3580.64 | 3197.09 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.40 | 105.90 | 101.76 | 2040.65 | 1810.97 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.29 | 108.90 | 102.12 | 1821.03 | 1620.02 | 44 | 30.38 | 4290.00 | 2928.79 | |

| Intel 6271C + V100 | 0.60 | 109.90 | 101.98 | 1797.75 | 1544.96 | 61 | 32.48 | 2936.00 | 2117.71 | |

| Intel 8563C + H20 | 0.28 | 108.80 | 101.92 | 2016.22 | 1811.74 | 73 | 41.82 | 2636.00 | 2241.23 | |

| Intel 8350C + A10 | 0.34 | 111.00 | 102.75 | 1964.21 | 1750.21 | 68 | 41.25 | 2722.00 | 2293.74 | |

| M4 | 6.28 | 129.10 | 103.74 | 7780.70 | 3571.92 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.67 | 116.90 | 101.33 | 1941.09 | 1693.39 | 88 | 65.48 | 2714.00 | 1923.06 | |

| OCR-nopp-min1280-server | Intel 6271C | 4.13 | 1043.40 | 1005.45 | 5993.70 | 3454.00 | N/A | N/A | N/A | N/A |

| Intel 8350C | 4.46 | 1011.70 | 996.72 | 5633.51 | 3489.79 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.42 | 113.90 | 106.08 | 1747.88 | 1546.18 | 85 | 43.73 | 13558.00 | 11297.98 | |

| Intel 6271C + V100 | 0.82 | 116.80 | 105.18 | 1873.38 | 1609.55 | 100 | 39.57 | 10376.00 | 8427.30 | |

| Intel 8563C + H20 | 0.55 | 114.80 | 103.14 | 2036.36 | 1864.45 | 100 | 69.67 | 13224.00 | 11411.31 | |

| Intel 8350C + A10 | 0.55 | 105.90 | 101.86 | 1931.35 | 1764.44 | 100 | 56.16 | 12418.00 | 10510.77 | |

| Intel 6271C + T4 | 1.13 | 105.90 | 102.35 | 2066.73 | 1787.78 | 100 | 83.50 | 10142.00 | 8338.80 | |

| OCR-nopp-min1280-mobile | Intel 6271C | 1.59 | 1019.90 | 1008.39 | 2366.86 | 1992.03 | N/A | N/A | N/A | N/A |

| Intel 8350C | 1.29 | 1017.70 | 1007.28 | 2501.24 | 2059.99 | N/A | N/A | N/A | N/A | |

| Hygon 7490 + P800 | 0.43 | 120.90 | 107.02 | 2108.87 | 1821.91 | N/A | N/A | N/A | N/A | |

| Intel 8350C + A100 | 0.29 | 117.90 | 107.19 | 1847.97 | 1570.89 | 31 | 18.98 | 3746.00 | 3321.86 | |

| Intel 6271C + V100 | 0.61 | 122.80 | 107.07 | 1789.25 | 1542.56 | 39 | 20.52 | 4058.00 | 3487.46 | |

| Intel 8563C + H20 | 0.24 | 116.80 | 106.80 | 2092.63 | 1882.77 | 28 | 18.67 | 3902.00 | 3444.00 | |

| Intel 8350C + A10 | 0.34 | 125.80 | 106.79 | 1959.45 | 1783.97 | 49 | 32.66 | 3532.00 | 3094.29 | |

| M4 | 6.64 | 139.40 | 107.63 | 4283.97 | 3112.59 | N/A | N/A | N/A | N/A | |

| Intel 6271C + T4 | 0.51 | 116.90 | 105.06 | 1927.22 | 1675.34 | 68 | 45.78 | 3828.00 | 3283.78 |

| Pipeline configuration | description |

|---|---|

| OCR-default | Default configuration |

| OCR-nopp-mobile | Based on the default configuration, document image preprocessing is disabled and mobile det and rec models are used |

| OCR-nopp-server | Based on the default configuration, document image preprocessing is disabled |

| OCR-nopp-min736-mobile | Based on the default configuration, document image preprocessing is disabled, det model input resizing strategy is set to min+736, and mobile det and rec models are used |

| OCR-nopp-min736-server | Based on the default configuration, document image preprocessing is disabled, and the det model input resizing strategy is set to min+736 |

| OCR-nopp-max640-mobile | Based on the default configuration, document image preprocessing is disabled, det model input resizing strategy is set to max+640, and mobile det and rec models are used |

| OCR-nopp-max960-mobile | Based on the default configuration, document image preprocessing is disabled, det model input resizing strategy is set to max+960, and mobile det and rec models are used |

| OCR-nopp-max640-server | Based on the default configuration, document image preprocessing is disabled, and the det model input resizing strategy is set to max+640 |

| OCR-nopp-max960-server | Based on the default configuration, document image preprocessing is disabled, and the det model input resizing strategy is set to max+960 |

| OCR-nopp-min1280-server | Based on the default configuration, document image preprocessing is disabled, and the det model input resizing strategy is set to min+1280 |

| OCR-nopp-min1280-mobile | Based on the default configuration, document image preprocessing is disabled, det model input resizing strategy is set to min+1280, and mobile det and rec models are used |

- Test environment:

- PaddlePaddle 3.1.0、CUDA 11.8、cuDNN 8.9

- PaddleX @ develop (f1eb28e)

- Docker image: ccr-2vdh3abv-pub.cnc.bj.baidubce.com/paddlepaddle/paddle:3.1.0-gpu-cuda11.8-cudnn8.9

- Test data:

- Test data containing 200 images from document and general scenarios.

- Test strategy:

- Warm up with 20 samples, then repeat the full dataset once for performance testing.

- Note:

- Since we did not collect device memory data for NPU and XPU, the corresponding entries in the table are marked as N/A.

2. Quick Start¶

All model pipelines provided by PaddleX can be quickly experienced. You can experience the effect of the general OCR pipeline on the community platform, or you can use the command line or Python locally to experience the effect of the general OCR pipeline.

2.1 Online Experience¶

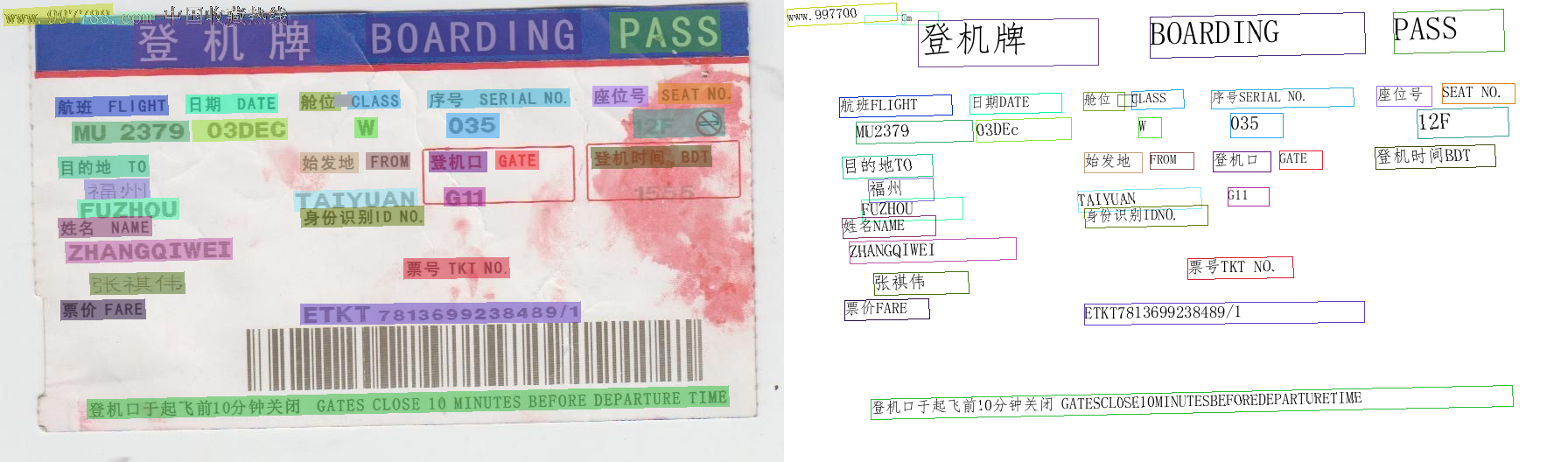

You can experience the general OCR pipeline online by recognizing the demo images provided by the official platform, for example:

If you are satisfied with the performance of the pipeline, you can directly integrate and deploy it. You can choose to download the deployment package from the cloud, or refer to the methods in Section 2.2 Local Experience for local deployment. If you are not satisfied with the effect, you can fine-tune the models in the pipeline using your private data. If you have local hardware resources for training, you can start training directly on your local machine; if not, the Star River Zero-Code platform provides a one-click training service. You don't need to write any code—just upload your data and start the training task with one click.

2.2 Local Experience¶

❗ Before using the general OCR pipeline locally, please ensure that you have completed the installation of the PaddleX wheel package according to the PaddleX Installation Guide. If you wish to selectively install dependencies, please refer to the relevant instructions in the installation guide. The dependency group corresponding to this pipeline is

ocr.

2.2.1 Command Line Experience¶

- You can quickly experience the OCR pipeline with a single command. Use the test image, and replace

--inputwith the local path for prediction.

paddlex --pipeline OCR \

--input general_ocr_002.png \

--use_doc_orientation_classify False \

--use_doc_unwarping False \

--use_textline_orientation False \

--save_path ./output \

--device gpu:0

Note: The official models would be download from HuggingFace by first. PaddleX also support to specify the preferred source by setting the environment variable PADDLE_PDX_MODEL_SOURCE. The supported values are huggingface, aistudio, bos, and modelscope. For example, to prioritize using bos, set: PADDLE_PDX_MODEL_SOURCE="bos".

For details on the relevant parameter descriptions, please refer to the parameter descriptions in 2.2.2 Python Script Integration. Supports specifying multiple devices simultaneously for parallel inference. For details, please refer to the documentation on pipeline parallel inference.

After running, the results will be printed to the terminal as follows:

{'res': {'input_path': './general_ocr_002.png', 'page_index': None, 'model_settings': {'use_doc_preprocessor': False, 'use_textline_orientation': False}, 'dt_polys': array([[[ 3, 10],

...,

[ 4, 30]],

...,

[[ 99, 456],

...,

[ 99, 479]]], dtype=int16), 'text_det_params': {'limit_side_len': 736, 'limit_type': 'min', 'thresh': 0.3, 'max_side_limit': 4000, 'box_thresh': 0.6, 'unclip_ratio': 1.5}, 'text_type': 'general', 'textline_orientation_angles': array([-1, ..., -1]), 'text_rec_score_thresh': 0.0, 'rec_texts': ['www.997700', '', 'Cm', '登机牌', 'BOARDING', 'PASS', 'CLASS', '序号SERIAL NO.', '座位号', 'SEAT NO.', '航班FLIGHT', '日期DATE', '舱位', '', 'W', '035', '12F', 'MU2379', '03DEc', '始发地', 'FROM', '登机口', 'GATE', '登机时间BDT', '目的地TO', '福州', 'TAIYUAN', 'G11', 'FUZHOU', '身份识别IDNO.', '姓名NAME', 'ZHANGQIWEI', '票号TKT NO.', '张祺伟', '票价FARE', 'ETKT7813699238489/1', '登机口于起飞前10分钟关闭 GATESCL0SE10MINUTESBEFOREDEPARTURETIME'], 'rec_scores': array([0.67634439, ..., 0.97416091]), 'rec_polys': array([[[ 3, 10],

...,

[ 4, 30]],

...,

[[ 99, 456],

...,

[ 99, 479]]], dtype=int16), 'rec_boxes': array([[ 3, ..., 30],

...,

[ 99, ..., 479]], dtype=int16)}}

The visualized results are saved under save_path, and the OCR visualization results are as follows:

2.2.2 Python Script Integration¶

- The above command line is for quick experience and effect checking. Generally, in a project, integration through code is often required. You can complete the quick inference of the pipeline with just a few lines of code. The inference code is as follows:

from paddlex import create_pipeline

pipeline = create_pipeline(pipeline="OCR")

output = pipeline.predict(

input="./general_ocr_002.png",

use_doc_orientation_classify=False,

use_doc_unwarping=False,

use_textline_orientation=False,

)

for res in output:

res.print()

res.save_to_img(save_path="./output/")

res.save_to_json(save_path="./output/")

In the above Python script, the following steps are executed:

(1) The OCR pipeline object is instantiated via create_pipeline(), with specific parameter descriptions as follows:

| Parameter | Description | Type | Default Value | |

|---|---|---|---|---|

pipeline |

The name of the pipeline or the path to the pipeline configuration file. If it is a pipeline name, it must be supported by PaddleX. | str |

None |

|

config |

Specific configuration information for the pipeline (if set simultaneously with the pipeline, it takes precedence over the pipeline, and the pipeline name must match the pipeline).

|

dict[str, Any] |

None |

|

device |

The device used for pipeline inference. It supports specifying specific GPU card numbers, such as "gpu:0", other hardware card numbers, such as "npu:0", or CPU, such as "cpu". Supports specifying multiple devices simultaneously for parallel inference. For details, please refer to Pipeline Parallel Inference. | str |

gpu:0 |

|

use_hpip |

Whether to enable the high-performance inference plugin. If set to None, the setting from the configuration file or config will be used. |

bool |

None | None |

hpi_config |

High-performance inference configuration | dict | None |

None | None |

(2) The predict() method of the OCR pipeline object is called to perform inference. This method returns a generator. Below are the parameters and their descriptions for the predict() method:

| Parameter | Description | Type | Options | Default Value |

|---|---|---|---|---|

input |

Data to be predicted, supports multiple input types (required). | Python Var|str|list |

|

None |

use_doc_orientation_classify |

Whether to use the document orientation classification module. | bool|None |

|

None |

use_doc_unwarping |

Whether to use the document unwarping module. | bool|None |

|

None |

use_textline_orientation |

Whether to use the text line orientation classification module. | bool|None |

|

None |

text_det_limit_side_len |

The limit on the side length of the image for text detection. | int|None |

|

None |

text_det_limit_type |

The type of limit on the side length of the image for text detection. | str|None |

|

None |

text_det_thresh |

The detection pixel threshold. Pixels with scores greater than this threshold in the output probability map will be considered as text pixels. | float|None |

|

None |

text_det_box_thresh |

The detection box threshold. A detection result will be considered as a text region if the average score of all pixels within the bounding box is greater than this threshold. | float|None |

|

None |

text_det_unclip_ratio |

The text detection expansion ratio. The larger this value, the larger the expanded area. | float|None |

|

None |

text_rec_score_thresh |

The text recognition score threshold. Text results with scores greater than this threshold will be retained. | float|None |

|

None |

(3) Process the prediction results. The prediction result for each sample is of type dict, and supports operations such as printing, saving as an image, and saving as a json file:

| Method | Description | Parameter | Parameter Type | Parameter Description | Default Value |

|---|---|---|---|---|---|

print() |

Print the result to the terminal | format_json |

bool |

Whether to format the output content using JSON indentation |

True |

indent |

int |

Specify the indentation level to beautify the output JSON data, making it more readable. This is only effective when format_json is True |

4 | ||

ensure_ascii |

bool |

Control whether non-ASCII characters are escaped to Unicode. When set to True, all non-ASCII characters will be escaped; False retains the original characters. This is only effective when format_json is True |

False |

||

save_to_json() |

Save the result as a JSON file | save_path |

str |

The file path for saving. When a directory is specified, the saved file name will match the input file name | None |

indent |

int |

Specify the indentation level to beautify the output JSON data, making it more readable. This is only effective when format_json is True |

4 | ||

ensure_ascii |

bool |

Control whether non-ASCII characters are escaped to Unicode. When set to True, all non-ASCII characters will be escaped; False retains the original characters. This is only effective when format_json is True |

False |

||

save_to_img() |

Save the result as an image file | save_path |

str |

The file path for saving, supporting both directory and file paths | None |

return_word_box |

Whether to return the position coordinates of each character | bool|None |

|

None |

-

Calling the

print()method will print the result to the terminal. The printed content is explained as follows:-

input_path:(str)The input path of the image to be predicted -

page_index:(Union[int, None])If the input is a PDF file, this indicates the current page number of the PDF. Otherwise, it isNone -

model_settings:(Dict[str, bool])The model parameters required for the pipeline configurationuse_doc_preprocessor:(bool)Controls whether to enable the document preprocessing sub-lineuse_textline_orientation:(bool)Controls whether to enable text line orientation classification

-

doc_preprocessor_res:(Dict[str, Union[str, Dict[str, bool], int]])The output result of the document preprocessing sub-line. This exists only whenuse_doc_preprocessor=Trueinput_path:(Union[str, None])The image path accepted by the preprocessing sub-line. When the input isnumpy.ndarray, it is saved asNonemodel_settings:(Dict)The model configuration parameters for the preprocessing sub-lineuse_doc_orientation_classify:(bool)Controls whether to enable document orientation classificationuse_doc_unwarping:(bool)Controls whether to enable document unwarping

angle:(int)The prediction result of document orientation classification. When enabled, it takes values [0,1,2,3], corresponding to [0°,90°,180°,270°]; when disabled, it is -1

-

dt_polys:(List[numpy.ndarray])A list of polygon boxes for text detection. Each detection box is represented by a numpy array of 4 vertex coordinates, with a shape of (4, 2) and data type int16 -

dt_scores:(List[float])A list of confidence scores for text detection boxes -

text_det_params:(Dict[str, Dict[str, int, float]])The configuration parameters for the text detection modulelimit_side_len:(int)The side length limit value for image preprocessinglimit_type:(str)The processing method for side length limitsthresh:(float)The confidence threshold for text pixel classificationbox_thresh:(float)The confidence threshold for text detection boxesunclip_ratio:(float)The expansion ratio for text detection boxestext_type:(str)The type of text detection, currently fixed as "general"

-

textline_orientation_angles:(List[int])The prediction results for text line orientation classification. When enabled, it returns actual angle values (e.g., [0,0,1]); when disabled, it returns [-1,-1,-1] -

text_rec_score_thresh:(float)The filtering threshold for text recognition results -

rec_texts:(List[str])A list of text recognition results, containing only texts with confidence scores abovetext_rec_score_thresh -

rec_scores:(List[float])A list of confidence scores for text recognition, filtered bytext_rec_score_thresh -

rec_polys:(List[numpy.ndarray])A list of text detection boxes filtered by confidence score, in the same format asdt_polys -

rec_boxes:(numpy.ndarray)An array of rectangular bounding boxes for detection boxes, with a shape of (n, 4) and dtype int16. Each row represents the [x_min, y_min, x_max, y_max] coordinates of a rectangle, where (x_min, y_min) is the top-left corner and (x_max, y_max) is the bottom-right corner -

text_word:(List[str])Whenreturn_word_boxis set toTrue, returns a list of the recognized text for each character. -

text_word_boxes:(List[numpy.ndarray])Whenreturn_word_boxis set toTrue, returns a list of bounding box coordinates for each recognized character.

-

-

Calling the

save_to_json()method will save the above content to the specifiedsave_path. If a directory is specified, the saved path will besave_path/{your_img_basename}_res.json. If a file is specified, it will be saved directly to that file. Since JSON files do not support saving numpy arrays, thenumpy.arraytype will be converted to a list format. -

Calling the

save_to_img()method will save the visualization results to the specifiedsave_path. If a directory is specified, the saved path will besave_path/{your_img_basename}_ocr_res_img.{your_img_extension}. If a file is specified, it will be saved directly to that file. (Since the pipeline usually contains multiple result images, it is not recommended to specify a specific file path directly, as multiple images will be overwritten and only the last image will be retained) -

Additionally, it also supports obtaining the visualization image with results and the prediction results through attributes, as follows:

| Attribute | Description |

|---|---|

json |

Get the prediction results in json format |

img |

Get the visualization image in dict format |

- The prediction results obtained through the

jsonattribute are of typedict, and the content is consistent with the data saved by calling thesave_to_json()method. - The prediction results returned by the

imgattribute are of typedict. The keys areocr_res_imgandpreprocessed_img, and the corresponding values are twoImage.Imageobjects: one for displaying the visualization image of OCR results, and the other for showing the visualization image of image preprocessing. If the image preprocessing sub-module is not used, the dictionary will only containocr_res_img.

Additionally, you can obtain the OCR pipeline configuration file and load the configuration file for prediction. You can execute the following command to save the results in my_path:

If you have obtained the configuration file, you can customize the configurations of the OCR pipeline. You just need to modify the pipeline parameter value in the create_pipeline method to the path of the pipeline configuration file. The example is as follows:

from paddlex import create_pipeline

pipeline = create_pipeline(pipeline="./my_path/OCR.yaml")

output = pipeline.predict(

input="./general_ocr_002.png",

use_doc_orientation_classify=False,

use_doc_unwarping=False,

use_textline_orientation=False,

)

for res in output:

res.print()

res.save_to_img("./output/")

res.save_to_json("./output/")

Note: The parameters in the configuration file are initialization parameters for the pipeline. If you want to change the general OCR pipeline initialization parameters, you can directly modify the parameters in the configuration file and load the configuration file for prediction. In addition, CLI prediction also supports passing in a configuration file, just specify the path of the configuration file with --pipeline.

3. Development Integration/Deployment¶

If the general OCR pipeline meets your requirements for inference speed and accuracy, you can proceed with development integration/deployment directly.

If you need to apply the general OCR pipeline directly in your Python project, you can refer to the example code in 2.2.2 Python Script Method.

In addition, PaddleX also provides three other deployment methods, which are detailed as follows:

🚀 High-Performance Inference: In actual production environments, many applications have strict performance requirements for deployment strategies, especially response speed, to ensure efficient system operation and smooth user experience. To this end, PaddleX provides a high-performance inference plugin, which aims to deeply optimize the performance of model inference and pre/post-processing, significantly speeding up the end-to-end process. For detailed high-performance inference procedures, please refer to the PaddleX High-Performance Inference Guide.

☁️ Serving: Serving is a common deployment strategy in real-world production environments. By encapsulating inference functions into services, clients can access these services via network requests to obtain inference results. PaddleX supports various solutions for serving pipelines. For detailed pipeline serving procedures, please refer to the PaddleX Pipeline Serving Guide.

Below are the API reference and multi-language service invocation examples for the basic serving solution:

API Reference

For the main operations provided by the service:

- The HTTP request method is POST.

- Both the request body and response body are JSON data (JSON objects).

- When the request is processed successfully, the response status code is

200, and the attributes of the response body are as follows:

| Name | Type | Meaning |

|---|---|---|

logId |

string |

The UUID of the request. |

errorCode |

integer |

Error code. Fixed as 0. |

errorMsg |

string |

Error message. Fixed as "Success". |

result |

object |

The result of the operation. |

- When the request is not processed successfully, the attributes of the response body are as follows:

| Name | Type | Meaning |

|---|---|---|

logId |

string |

The UUID of the request. |

errorCode |

integer |

Error code. Same as the response status code. |

errorMsg |

string |

Error message. |

The main operations provided by the service are as follows:

infer

Obtain OCR results from images.

POST /ocr

- The attributes of the request body are as follows:

| Name | Type | Meaning | Required |

|---|---|---|---|

file |

string |

The URL of an image or PDF file accessible by the server, or the Base64-encoded content of the file. By default, for PDF files exceeding 10 pages, only the first 10 pages will be processed. To remove the page limit, please add the following configuration to the pipeline configuration file: |

Yes |

fileType |

integer | null |

The type of the file. 0 for PDF files, 1 for image files. If this attribute is missing, the file type will be inferred from the URL. |

No |

useDocOrientationClassify |

boolean | null |

Please refer to the description of the use_doc_orientation_classify parameter of the pipeline object's predict method. |

No |

useDocUnwarping |

boolean | null |

Please refer to the description of the use_doc_unwarping parameter of the pipeline object's predict method. |

No |

useTextlineOrientation |

boolean | null |

Please refer to the description of the use_textline_orientation parameter of the pipeline object's predict method. |

No |

textDetLimitSideLen |

integer | null |

Please refer to the description of the text_det_limit_side_len parameter of the pipeline object's predict method. |

No |

textDetLimitType |

string | null |

Please refer to the description of the text_det_limit_type parameter of the pipeline object's predict method. |

No |

textDetThresh |

number | null |

Please refer to the description of the text_det_thresh parameter of the pipeline object's predict method. |

No |

textDetBoxThresh |

number | null |

Please refer to the description of the text_det_box_thresh parameter of the pipeline object's predict method. |

No |

textDetUnclipRatio |

number | null |

Please refer to the description of the text_det_unclip_ratio parameter of the pipeline object's predict method. |

No |

textRecScoreThresh |

number | null |

Please refer to the description of the text_rec_score_thresh parameter of the pipeline object's predict method. |

No |

returnWordBox |

boolean | null |

Please refer to the description of the return_word_box parameter of the pipeline object's predict method. |

No |

visualize |

boolean | null |

Whether to return the final visualization image and intermediate images during the processing.

For example, adding the following setting to the pipeline config file: visualize parameter in the request.If neither the request body nor the configuration file is set (If visualize is set to null in the request and not defined in the configuration file), the image is returned by default.

|

No |

- When the request is processed successfully, the response body has the following properties for

result:

| Name | Type | Description |

|---|---|---|

ocrResults |

object |

OCR results. The array length is 1 (for image input) or the actual number of document pages processed (for PDF input). For PDF input, each element in the array represents the result of each page actually processed in the PDF file. |

dataInfo |

object |

Information about the input data. |

Each element in ocrResults is an object with the following properties:

| Name | Type | Description |

|---|---|---|

prunedResult |

object |

The simplified version of the res field in the JSON representation generated by the predict method of the production object, with the input_path and the page_index fields removed. |

ocrImage |

string | null |

The OCR result image, which marks the detected text positions. The image is in JPEG format and encoded in Base64. |

docPreprocessingImage |

string | null |

The visualization result image. The image is in JPEG format and encoded in Base64. |

inputImage |

string | null |

The input image. The image is in JPEG format and encoded in Base64. |

Multi-language Service Call Example

Python

import base64

import requests

API_URL = "http://localhost:8080/ocr"

file_path = "./demo.jpg"

with open(file_path, "rb") as file:

file_bytes = file.read()

file_data = base64.b64encode(file_bytes).decode("ascii")

payload = {"file": file_data, "fileType": 1}

response = requests.post(API_URL, json=payload)

assert response.status_code == 200

result = response.json()["result"]

for i, res in enumerate(result["ocrResults"]):

print(res["prunedResult"])

ocr_img_path = f"ocr_{i}.jpg"

with open(ocr_img_path, "wb") as f:

f.write(base64.b64decode(res["ocrImage"]))

print(f"Output image saved at {ocr_img_path}")

C++

#include <iostream>

#include <fstream>

#include <vector>

#include <string>

#include "cpp-httplib/httplib.h" // https://github.com/Huiyicc/cpp-httplib

#include "nlohmann/json.hpp" // https://github.com/nlohmann/json

#include "base64.hpp" // https://github.com/tobiaslocker/base64

int main() {

httplib::Client client("localhost", 8080);

const std::string filePath = "./demo.jpg";

std::ifstream file(filePath, std::ios::binary | std::ios::ate);

if (!file) {

std::cerr << "Error opening file." << std::endl;

return 1;

}

std::streamsize size = file.tellg();

file.seekg(0, std::ios::beg);

std::vector buffer(size);

if (!file.read(buffer.data(), size)) {

std::cerr << "Error reading file." << std::endl;

return 1;

}

std::string bufferStr(buffer.data(), static_cast(size));

std::string encodedFile = base64::to_base64(bufferStr);

nlohmann::json jsonObj;

jsonObj["file"] = encodedFile;

jsonObj["fileType"] = 1;

auto response = client.Post("/ocr", jsonObj.dump(), "application/json");

if (response && response->status == 200) {

nlohmann::json jsonResponse = nlohmann::json::parse(response->body);

auto result = jsonResponse["result"];

if (!result.is_object() || !result["ocrResults"].is_array()) {

std::cerr << "Unexpected response structure." << std::endl;

return 1;

}

for (size_t i = 0; i < result["ocrResults"].size(); ++i) {

auto ocrResult = result["ocrResults"][i];

std::cout << ocrResult["prunedResult"] << std::endl;

std::string ocrImgPath = "ocr_" + std::to_string(i) + ".jpg";

std::string encodedImage = ocrResult["ocrImage"];

std::string decodedImage = base64::from_base64(encodedImage);

std::ofstream outputImage(ocrImgPath, std::ios::binary);

if (outputImage.is_open()) {

outputImage.write(decodedImage.c_str(), static_cast(decodedImage.size()));

outputImage.close();

std::cout << "Output image saved at " << ocrImgPath << std::endl;

} else {

std::cerr << "Unable to open file for writing: " << ocrImgPath << std::endl;

}

}

} else {

std::cerr << "Failed to send HTTP request." << std::endl;

if (response) {

std::cerr << "HTTP status code: " << response->status << std::endl;

std::cerr << "Response body: " << response->body << std::endl;

}

return 1;

}

return 0;

}

Java

import okhttp3.*;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.JsonNode;

import com.fasterxml.jackson.databind.node.ObjectNode;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.Base64;

public class Main {

public static void main(String[] args) throws IOException {

String API_URL = "http://localhost:8080/ocr";

String imagePath = "./demo.jpg";

File file = new File(imagePath);

byte[] fileContent = java.nio.file.Files.readAllBytes(file.toPath());

String base64Image = Base64.getEncoder().encodeToString(fileContent);

ObjectMapper objectMapper = new ObjectMapper();

ObjectNode payload = objectMapper.createObjectNode();

payload.put("file", base64Image);

payload.put("fileType", 1);

OkHttpClient client = new OkHttpClient();

MediaType JSON = MediaType.get("application/json; charset=utf-8");

RequestBody body = RequestBody.create(JSON, payload.toString());

Request request = new Request.Builder()

.url(API_URL)

.post(body)

.build();

try (Response response = client.newCall(request).execute()) {

if (response.isSuccessful()) {

String responseBody = response.body().string();

JsonNode root = objectMapper.readTree(responseBody);

JsonNode result = root.get("result");

JsonNode ocrResults = result.get("ocrResults");

for (int i = 0; i < ocrResults.size(); i++) {

JsonNode item = ocrResults.get(i);

JsonNode prunedResult = item.get("prunedResult");

System.out.println("Pruned Result [" + i + "]: " + prunedResult.toString());

String ocrImageBase64 = item.get("ocrImage").asText();

byte[] ocrImageBytes = Base64.getDecoder().decode(ocrImageBase64);

String ocrImgPath = "ocr_result_" + i + ".jpg";

try (FileOutputStream fos = new FileOutputStream(ocrImgPath)) {

fos.write(ocrImageBytes);

System.out.println("Saved OCR image to: " + ocrImgPath);

}

}

} else {

System.err.println("Request failed with HTTP code: " + response.code());

}

}

}

}

Go

package main

import (

"bytes"

"encoding/base64"

"encoding/json"

"fmt"

"io/ioutil"

"net/http"

)

func main() {

API_URL := "http://localhost:8080/ocr"

filePath := "./demo.jpg"

fileBytes, err := ioutil.ReadFile(filePath)

if err != nil {

fmt.Printf("Error reading file: %v\n", err)

return

}

fileData := base64.StdEncoding.EncodeToString(fileBytes)

payload := map[string]interface{}{

"file": fileData,

"fileType": 1,

}

payloadBytes, err := json.Marshal(payload)

if err != nil {

fmt.Printf("Error marshaling payload: %v\n", err)

return

}

client := &http.Client{}

req, err := http.NewRequest("POST", API_URL, bytes.NewBuffer(payloadBytes))

if err != nil {

fmt.Printf("Error creating request: %v\n", err)

return

}

req.Header.Set("Content-Type", "application/json")

res, err := client.Do(req)

if err != nil {

fmt.Printf("Error sending request: %v\n", err)

return

}

defer res.Body.Close()

if res.StatusCode != http.StatusOK {

fmt.Printf("Unexpected status code: %d\n", res.StatusCode)

return

}

body, err := ioutil.ReadAll(res.Body)

if err != nil {

fmt.Printf("Error reading response body: %v\n", err)

return

}

type OcrResult struct {

PrunedResult map[string]interface{} `json:"prunedResult"`

OcrImage *string `json:"ocrImage"`

}

type Response struct {

Result struct {

OcrResults []OcrResult `json:"ocrResults"`

DataInfo interface{} `json:"dataInfo"`

} `json:"result"`

}

var respData Response

if err := json.Unmarshal(body, &respData); err != nil {

fmt.Printf("Error unmarshaling response: %v\n", err)

return

}

for i, res := range respData.Result.OcrResults {

if res.OcrImage != nil {

imgBytes, err := base64.StdEncoding.DecodeString(*res.OcrImage)

if err != nil {

fmt.Printf("Error decoding image %d: %v\n", i, err)

continue

}

filename := fmt.Sprintf("ocr_%d.jpg", i)

if err := ioutil.WriteFile(filename, imgBytes, 0644); err != nil {

fmt.Printf("Error saving image %s: %v\n", filename, err)

continue

}

fmt.Printf("Output image saved at %s\n", filename)

}

}

}

C#

using System;

using System.IO;

using System.Net.Http;

using System.Text;

using System.Threading.Tasks;

using Newtonsoft.Json.Linq;

class Program

{

static readonly string API_URL = "http://localhost:8080/ocr";

static readonly string inputFilePath = "./demo.jpg";

static async Task Main(string[] args)

{

var httpClient = new HttpClient();

byte[] fileBytes = File.ReadAllBytes(inputFilePath);

string fileData = Convert.ToBase64String(fileBytes);

var payload = new JObject

{

{ "file", fileData },

{ "fileType", 1 }

};

var content = new StringContent(payload.ToString(), Encoding.UTF8, "application/json");

HttpResponseMessage response = await httpClient.PostAsync(API_URL, content);

response.EnsureSuccessStatusCode();

string responseBody = await response.Content.ReadAsStringAsync();

JObject jsonResponse = JObject.Parse(responseBody);

JArray ocrResults = (JArray)jsonResponse["result"]["ocrResults"];

for (int i = 0; i < ocrResults.Count; i++)

{

var res = ocrResults[i];

Console.WriteLine($"[{i}] prunedResult:\n{res["prunedResult"]}");

string base64Image = res["ocrImage"]?.ToString();

if (!string.IsNullOrEmpty(base64Image))

{

string outputPath = $"ocr_{i}.jpg";

byte[] imageBytes = Convert.FromBase64String(base64Image);

File.WriteAllBytes(outputPath, imageBytes);

Console.WriteLine($"OCR image saved to {outputPath}");

}

else

{

Console.WriteLine($"OCR image at index {i} is null.");

}

}

}

}

Node.js

const axios = require('axios');

const fs = require('fs');

const path = require('path');

const API_URL = 'http://localhost:8080/layout-parsing';

const imagePath = './demo.jpg';

const fileType = 1;

function encodeImageToBase64(filePath) {

const bitmap = fs.readFileSync(filePath);

return Buffer.from(bitmap).toString('base64');

}

const payload = {

file: encodeImageToBase64(imagePath),

fileType: fileType

};

axios.post(API_URL, payload)

.then(response => {

const results = response.data.result.layoutParsingResults;

results.forEach((res, index) => {

console.log(`\n[${index}] prunedResult:`);

console.log(res.prunedResult);

const outputImages = res.outputImages;

if (outputImages) {

Object.entries(outputImages).forEach(([imgName, base64Img]) => {

const imgPath = `${imgName}_${index}.jpg`;

fs.writeFileSync(imgPath, Buffer.from(base64Img, 'base64'));

console.log(`Output image saved at ${imgPath}`);

});

} else {

console.log(`[${index}] No outputImages.`);

}

});

})

.catch(error => {

console.error('Error during API request:', error.message || error);

});

PHP

<?php

$API_URL = "http://localhost:8080/ocr";

$image_path = "./demo.jpg";

$image_data = base64_encode(file_get_contents($image_path));

$payload = array(

"file" => $image_data,

"fileType" => 1

);

$ch = curl_init($API_URL);

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($payload));

curl_setopt($ch, CURLOPT_HTTPHEADER, array('Content-Type: application/json'));

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

curl_close($ch);

$result = json_decode($response, true)["result"]["ocrResults"];

foreach ($result as $i => $item) {

echo "[$i] prunedResult:\n";

print_r($item["prunedResult"]);

if (!empty($item["ocrImage"])) {

$output_img_path = "ocr_{$i}.jpg";

file_put_contents($output_img_path, base64_decode($item["ocrImage"]));

echo "OCR image saved at $output_img_path\n";

} else {

echo "No ocrImage found for item $i\n";

}

}

?>

📱 On-Device Deployment: Edge deployment is a method of placing computing and data processing capabilities directly on user devices, allowing them to process data locally without relying on remote servers. PaddleX supports deploying models on edge devices such as Android. For detailed instructions, please refer to the PaddleX On-Device Deployment Guide. You can choose the appropriate deployment method based on your needs to integrate the model pipeline into your AI applications.

4. Custom Development¶

If the default model weights provided by the General OCR pipeline do not meet your requirements in terms of accuracy or speed, you can attempt to fine-tune the existing models using your own domain-specific or application-specific data to improve the recognition performance of the General OCR pipeline in your scenario.

4.1 Model Fine-Tuning¶

Since the General OCR pipeline consists of several modules, the unsatisfactory performance of the pipeline may originate from any one of these modules. You can analyze the images with poor recognition results to identify which module is problematic and refer to the corresponding fine-tuning tutorial links in the table below for model fine-tuning.

| Scenario | Fine-Tuning Module | Fine-Tuning Reference Link |

|---|---|---|

| Text is missed in detection | Text Detection Module | Link |

| Text content is inaccurate | Text Recognition Module | Link |

| Vertical or rotated text line correction is inaccurate | Text Line Orientation Classification Module | Link |

| Whole-image rotation correction is inaccurate | Document Image Orientation Classification Module | Link |

| Image distortion correction is inaccurate | Text Image Correction Module | Fine-tuning not supported yet |

4.2 Model Application¶

After fine-tuning with your private dataset, you will obtain the local model weight files.

If you need to use the fine-tuned model weights, simply modify the pipeline configuration file by replacing the local paths of the fine-tuned model weights into the corresponding positions in the configuration file:

SubPipelines:

DocPreprocessor:

...

SubModules:

DocOrientationClassify:

module_name: doc_text_orientation

model_name: PP-LCNet_x1_0_doc_ori

model_dir: null # Replace with the path to the fine-tuned document image orientation classification model weights.

...

SubModules:

TextDetection:

module_name: text_detection

model_name: PP-OCRv5_mobile_det

model_dir: null # Replace with the path to the fine-tuned text detection model weights.

...

TextLineOrientation:

module_name: textline_orientation

model_name: PP-LCNet_x0_25_textline_ori

model_dir: null # Replace with the path to the fine-tuned textline orientation classification model weights.

batch_size: 1

TextRecognition:

module_name: text_recognition

model_name: PP-OCRv5_mobile_rec

model_dir: null # Replace with the path to the fine-tuned text recognition model weights.

batch_size: 1

Subsequently, refer to the command-line or Python script methods in 2.2 Local Experience to load the modified pipeline configuration file.

5. Multi-Hardware Support¶

PaddleX supports a variety of mainstream hardware devices, including NVIDIA GPUs, Kunlunxin XPUs, Ascend NPUs, and Cambricon MLUs. Simply modify the --device parameter to seamlessly switch between different hardware devices.

For example, if you are using an NVIDIA GPU for OCR pipeline inference, the Python command is:

paddlex --pipeline OCR \

--input general_ocr_002.png \

--use_doc_orientation_classify False \

--use_doc_unwarping False \

--use_textline_orientation False \

--save_path ./output \

--device npu:0

create_pipeline() or predict() in a Python script.

If you want to use the General OCR pipeline on more types of hardware, please refer to the PaddleX Multi-Hardware Usage Guide.