3D Multimodal Fusion Detection Module Tutorial¶

I. Overview¶

The 3D multimodal fusion detection module is a key component in the fields of computer vision and autonomous driving, responsible for locating and marking the 3D coordinates and detection box information of regions containing specific targets in images or videos. The performance of this module directly affects the accuracy and efficiency of the entire vision or autonomous driving perception system. The 3D multimodal fusion detection module typically outputs 3D bounding boxes of target regions, which are then passed as inputs to the target recognition module for further processing.

II. List of Supported Models¶

The inference time only includes the model inference time and does not include the time for pre- or post-processing.

| Model | Model Download Link | mAP(%) | NDS | Introduction |

|---|---|---|---|---|

| BEVFusion | Inference Model/Training Model | 53.9 | 60.9 | BEVFusion is a multimodal fusion model in the Bird's Eye View (BEV) perspective. It uses two branches to process data from different modalities to obtain features of lidar and camera in the BEV perspective. The camera branch uses the LSS (Lift, Splat, and Scatter) bottom-up approach to explicitly generate image BEV features, while the lidar branch uses a classic point cloud detection network. Finally, the BEV features of the two modalities are aligned and fused for application in detection heads or segmentation heads. |

Test Environment Description:

- Performance Test Environment

- Test Dataset:The above accuracy metrics are based on the nuscenes validation set with mAP(0.5:0.95) and NDS 60.9, and the precision type is FP32.

- Hardware Configuration:

- GPU: NVIDIA Tesla T4

- CPU: Intel Xeon Gold 6271C @ 2.60GHz

- Software Environment:

- Ubuntu 20.04 / CUDA 11.8 / cuDNN 8.9 / TensorRT 8.6.1.6

- paddlepaddle 3.0.0 / paddlex 3.0.3

</li>

<li><b>Inference Mode Description</b></li>

| Mode | GPU Configuration | CPU Configuration | Acceleration Technology Combination |

|---|---|---|---|

| Normal Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of pre-selected precision types and acceleration strategies | FP32 Precision / 8 Threads | Pre-selected optimal backend (Paddle/OpenVINO/TRT, etc.) |

III. Quick Integration¶

❗ Before quick integration, please install the PaddleX wheel package first. For details, refer to the PaddleX Local Installation Guide.

After completing the installation of the wheel package, you can perform inference for the object detection module with just a few lines of code. You can switch models under this module at will, and you can also integrate the model inference of the 3D multimodal fusion detection module into your project. Before running the following code, please download the sample input to your local machine.

from paddlex import create_pipeline

pipeline = create_pipeline(pipeline="3d_bev_detection")

output = pipeline.predict("nuscenes_demo_infer.tar")

for res in output:

res.print() ## Print the structured output of the prediction

res.save_to_json("./output/") ## Save the results to a JSON file```

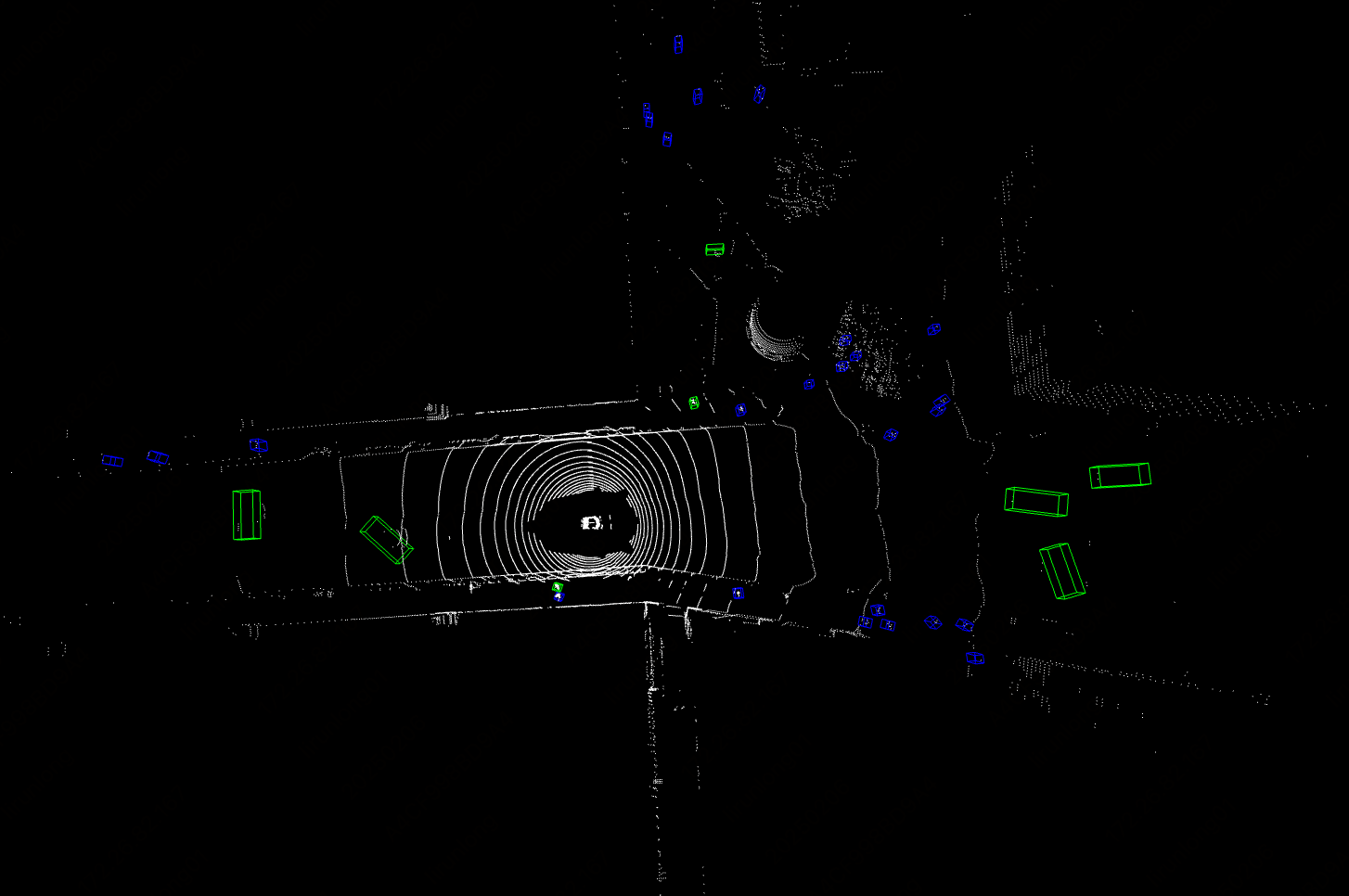

res.visualize(save_path="./output/", show=True) ## 3D result visualization. If the runtime environment has a graphical interface, set `show=True`; otherwise, set it to `False`.

Note: 1、To visualize 3D detection results, you need to install the open3d package first. The installation command is as follows:

2、If the runtime environment does not have a graphical interface, visualization will not be possible, but the results will still be saved. You can run the script in an environment that supports a graphical interface to visualize the saved results:

After running, the result obtained is:

{"res":

{

'input_path': 'samples/LIDAR_TOP/n015-2018-10-08-15-36-50+0800__LIDAR_TOP__1538984253447765.pcd.bin',

'sample_id': 'b4ff30109dd14c89b24789dc5713cf8c',

'input_img_paths': [

'samples/CAM_FRONT_LEFT/n015-2018-10-08-15-36-50+0800__CAM_FRONT_LEFT__1538984253404844.jpg',

'samples/CAM_FRONT/n015-2018-10-08-15-36-50+0800__CAM_FRONT__1538984253412460.jpg',

'samples/CAM_FRONT_RIGHT/n015-2018-10-08-15-36-50+0800__CAM_FRONT_RIGHT__1538984253420339.jpg',

'samples/CAM_BACK_RIGHT/n015-2018-10-08-15-36-50+0800__CAM_BACK_RIGHT__1538984253427893.jpg',

'samples/CAM_BACK/n015-2018-10-08-15-36-50+0800__CAM_BACK__1538984253437525.jpg',

'samples/CAM_BACK_LEFT/n015-2018-10-08-15-36-50+0800__CAM_BACK_LEFT__1538984253447423.jpg'

]

"boxes_3d": [

[

14.5425386428833,

22.142045974731445,

-1.2903141975402832,

1.8441576957702637,

4.433370113372803,

1.7367216348648071,

6.367165565490723,

0.0036598597653210163,

-0.013568558730185032

]

],

"labels_3d": [

0

],

"scores_3d": [

0.9920279383659363

]

}

}

The meanings of the run results parameters are as follows:

- input_path: Indicates the input point cloud data path of the input sample to be predicted.

- sample_id: Indicates the unique identifier of the input sample to be predicted.

- input_img_paths: Indicates the input image data path of the input sample to be predicted.

- boxes_3d: Indicates the prediction box information of the 3D sample. Each prediction box information is a list of length 9, with each element representing:

- 0: x coordinate of the center point

- 1: y coordinate of the center point

- 2: z coordinate of the center point

- 3: Width of the detection box

- 4: Length of the detection box

- 5: Height of the detection box

- 6: Rotation angle

- 7: Velocity in the x direction of the coordinate system

- 8: Velocity in the y direction of the coordinate system

- labels_3d: Indicates the predicted categories corresponding to all prediction boxes of the 3D sample.

- scores_3d: Indicates the confidence scores corresponding to all prediction boxes of the 3D sample.

The following is an explanation of relevant methods and parameters:

create_modelinstantiates a 3D detection model (here,BEVFusionis used as an example), with specific explanations as follows:

| Parameter | Parameter Description | Parameter Type | Optional | Default Value |

|---|---|---|---|---|

model_name |

Name of the model | str |

No | BEVFusion |

model_dir |

Path where the model is stored | str |

No | None |

device |

The device used for model inference | str |

It supports specifying specific GPU card numbers, such as "gpu:0", other hardware card numbers, such as "npu:0", or CPU, such as "cpu". | gpu:0 |

use_hpip |

Whether to enable the high-performance inference plugin | bool |

None | False |

hpi_config |

High-performance inference configuration | dict | None |

None | None |

-

The

model_namemust be specified. After specifyingmodel_name, the default model parameters built into PaddleX will be used. Ifmodel_diris specified, the user-defined model will be used. -

The

predict()method of the 3D detection model is called for inference prediction. The parameters of thepredict()method areinputandbatch_size, with specific explanations as follows:

| Parameter | Parameter Description | Parameter Type | Optional | Default Value |

|---|---|---|---|---|

input |

Data to be predicted, supporting multiple input types | str/list |

|

None |

batch_size |

Batch size | int |

Any integer | 1 |

- Process the prediction results. Each sample's prediction result is a corresponding Result object, and it supports operations such as printing and saving as a

jsonfile:

| Method | Method Description | Parameter | Parameter Type | Parameter Description | Default Value |

|---|---|---|---|---|---|

print() |

Print the result to the terminal | format_json |

bool |

Whether to format the output content using JSON indentation |

True |

indent |

int |

Specify the indentation level to beautify the output JSON data, making it more readable. It is only effective when format_json is True |

4 | ||

ensure_ascii |

bool |

Control whether non-ASCII characters are escaped to Unicode. When set to True, all non-ASCII characters will be escaped; False retains the original characters. It is only effective when format_json is True |

False |

||

save_to_json() |

Save the result as a JSON-formatted file | save_path |

str |

The path to save the file. When it is a directory, the saved file name will be consistent with the input file name | None |

indent |

int |

Specify the indentation level to beautify the output JSON data, making it more readable. It is only effective when format_json is True |

4 | ||

ensure_ascii |

bool |

Control whether non-ASCII characters are escaped to Unicode. When set to True, all non-ASCII characters will be escaped; False retains the original characters. It is only effective when format_json is True |

False |

- Additionally, it supports obtaining visual images with results and prediction results through attributes, as follows:

| Attribute | Attribute Description |

|---|---|

json |

Get the prediction result in json format |

For more information on the usage of PaddleX single-model inference API, please refer to PaddleX Single-Model Python Script Usage Instructions.

IV. Secondary Development¶

If you are pursuing higher precision in existing models, you can utilize the secondary development capabilities of PaddleX to develop better object detection models. Before using PaddleX to develop object detection models, please make sure to install the object detection model training plugin for PaddleX. The installation process can be referred to in the PaddleX Local Installation Guide.

4.1 Data Preparation¶

Before training a model, you need to prepare the dataset for the corresponding task module. PaddleX provides a data validation feature for each module, and only data that passes the validation can be used for model training. In addition, PaddleX offers a Demo dataset for each module, and you can complete subsequent development based on the official Demo data.

4.1.1 Downloading Demo Data¶

You can refer to the following command to download the Demo dataset to the specified folder:

wget https://paddle-model-ecology.bj.bcebos.com/paddlex/data/nuscenes_demo.tar -P ./dataset

tar -xf ./dataset/nuscenes_demo.tar -C ./dataset/

4.1.2 Data Validation¶

Data validation can be completed with a single command:

python main.py -c paddlex/configs/modules/3d_bev_detection/BEVFusion.yaml \

-o Global.mode=check_dataset \

-o Global.dataset_dir=./dataset/nuscenes_demo

👉 Verification Result Details (Click to Expand)

The specific content of the verification result file is:

{

"done_flag": true,

"check_pass": true,

"attributes": {

"num_classes": 11,

"train_mate": [

{

"sample_idx": "f9878012c3f6412184c294c13ba4bac3",

"lidar_path": "./data/nuscenes/samples/LIDAR_TOP/n008-2018-05-21-11-06-59-0400__LIDAR_TOP__1526915243047392.pcd.bin",

"image_paths" [

"./data/nuscenes/samples/CAM_FRONT_LEFT/n008-2018-05-21-11-06-59-0400__CAM_FRONT_LEFT__1526915243004917.jpg",

"./data/nuscenes/samples/CAM_FRONT/n008-2018-05-21-11-06-59-0400__CAM_FRONT__1526915243012465.jpg",

"./data/nuscenes/samples/CAM_FRONT_RIGHT/n008-2018-05-21-11-06-59-0400__CAM_FRONT_RIGHT__1526915243019956.jpg",

"./data/nuscenes/samples/CAM_BACK_RIGHT/n008-2018-05-21-11-06-59-0400__CAM_BACK_RIGHT__1526915243027813.jpg",

"./data/nuscenes/samples/CAM_BACK/n008-2018-05-21-11-06-59-0400__CAM_BACK__1526915243037570.jpg",

"./data/nuscenes/samples/CAM_BACK_LEFT/n008-2018-05-21-11-06-59-0400__CAM_BACK_LEFT__1526915243047295.jpg"

]

},

],

"val_mate": [

{

"sample_idx": "30e55a3ec6184d8cb1944b39ba19d622",

"lidar_path": "./data/nuscenes/samples/LIDAR_TOP/n015-2018-07-11-11-54-16+0800__LIDAR_TOP__1531281439800013.pcd.bin",

"image_paths": [

"./data/nuscenes/samples/CAM_FRONT_LEFT/n015-2018-07-11-11-54-16+0800__CAM_FRONT_LEFT__1531281439754844.jpg",

"./data/nuscenes/samples/CAM_FRONT/n015-2018-07-11-11-54-16+0800__CAM_FRONT__1531281439762460.jpg",

"./data/nuscenes/samples/CAM_FRONT_RIGHT/n015-2018-07-11-11-54-16+0800__CAM_FRONT_RIGHT__1531281439770339.jpg",

"./data/nuscenes/samples/CAM_BACK_RIGHT/n015-2018-07-11-11-54-16+0800__CAM_BACK_RIGHT__1531281439777893.jpg",

"./data/nuscenes/samples/CAM_BACK/n015-2018-07-11-11-54-16+0800__CAM_BACK__1531281439787525.jpg",

"./data/nuscenes/samples/CAM_BACK_LEFT/n015-2018-07-11-11-54-16+0800__CAM_BACK_LEFT__1531281439797423.jpg"

]

},

]

},

"analysis": {

"histogram": "check_dataset/histogram.png"

},

"dataset_path": "/workspace/bevfusion/Paddle3D/data/nuscenes","show_type": "txt",

"dataset_type": "NuscenesMMDataset"

}

In the verification results above, check_pass being true indicates that the dataset format meets the requirements.

4.1.3 Dataset Format Conversion / Dataset Splitting (Optional)¶

After you complete the dataset verification, you can convert the dataset format or re-split the training/validation ratio by modifying the configuration file or adding hyperparameters.

👉 Details of Format Conversion / Dataset Splitting (Click to Expand)

The 3D multimodal fusion detection module does not support dataset format conversion or dataset splitting.

4.2 Model Training¶

A single command can complete the model training. Taking the training of the 3D multimodal fusion detection model BEVFusion as an example:

python main.py -c paddlex/configs/modules/3d_bev_detection/BEVFusion.yaml \

-o Global.mode=train \

-o Global.dataset_dir=./dataset/nuscenes_demo \

- Specify the path of the model's

.yamlconfiguration file (here it isbevf_pp_2x8_1x_nusc.yaml. When training other models, you need to specify the corresponding configuration file. The correspondence between models and configuration files can be found in PaddleX Model List (CPU/GPU)). - Set the mode to model training:

-o Global.mode=train - Specify the training dataset path:

-o Global.dataset_dir - Other related parameters can be set by modifying the fields under

GlobalandTrainin the.yamlconfiguration file, or by appending parameters in the command line. For example, to specify training on the first 2 GPUs:-o Global.device=gpu:0,1; to set the number of training epochs to 10:-o Train.epochs_iters=10. For more modifiable parameters and their detailed explanations, refer to the configuration file instructions for the corresponding model task module PaddleX Common Model Configuration Parameters. - New Feature: Paddle 3.0 support CINN (Compiler Infrastructure for Neural Networks) to accelerate training speed when using GPU device. Please specify

-o Train.dy2st=Trueto enable it.

👉 More Information (Click to Expand)

- During model training, PaddleX will automatically save the model weight files, with the default directory being

output. If you need to specify a save path, you can set it through the-o Global.outputfield in the configuration file. - PaddleX abstracts away the concepts of dynamic graph weights and static graph weights from you. During model training, both dynamic and static graph weights will be generated. By default, static graph weights are used for model inference.

-

After model training is completed, all outputs are saved in the specified output directory (default is

./output/), typically including the following: -

train_result.json: The training result record file, which logs whether the training task was completed normally, as well as the weight metrics, related file paths, etc. train.log: The training log file, which records the changes in model metrics and loss during the training process.config.yaml: The training configuration file, which records the hyperparameter settings for this training session..pdparams,.pdopt,.pdiparams,.json: Model weight-related files, including network parameters, optimizer, static graph network parameters, and static graph network structure, etc.- Notice: Since Paddle 3.0.0, the format of storing static graph network structure has changed to json(the current

.jsonfile) from protobuf(the former.pdmodelfile) to be compatible with PIR and more flexible and scalable.

4.3 Model Evaluation¶

After completing model training, you can evaluate the specified model weight file on the validation set to verify the model's accuracy. With PaddleX, model evaluation can be completed with a single command:

python main.py -c paddlex/configs/modules/3d_bev_detection/BEVFusion.yaml \

-o Global.mode=evaluate \

-o Global.dataset_dir=./dataset/nuscenes_demo \

Similar to model training, the following steps are required:

- Specify the path of the model's

.yamlconfiguration file (here it isbevf_pp_2x8_1x_nusc.yaml) - Set the mode to model evaluation:

-o Global.mode=evaluate - Specify the path of the validation dataset:

-o Global.dataset_dirOther related parameters can be set by modifying the fields underGlobalandEvaluatein the.yamlconfiguration file. For details, please refer to PaddleX General Model Configuration File Parameter Description.

👉 More Information (Click to Expand)

When evaluating the model, the path of the model weight file needs to be specified. Each configuration file has a default weight save path built-in. If you need to change it, you can simply set it by appending a command-line parameter, such as -o Evaluate.weight_path=./output/best_model/best_model.pdparams.

After the model evaluation is completed, an evaluate_result.json file will be generated, which records the evaluation results. Specifically, it records whether the evaluation task was completed normally and the evaluation metrics of the model, including mAP and NDS.

4.4 Model Inference and Model Integration¶

After completing the training and evaluation of the model, you can use the trained model weights for inference prediction or integrate them into Python.

4.4.1 Model Inference¶

- To perform inference prediction via the command line, you only need the following command. Before running the code below, please download the [sample data] (

https://paddle-model-ecology.bj.bcebos.com/paddlex/det_3d/demo_det_3d/nuscenes_demo_infer.tar) to your local machine. Note: The link above may not be accessible due to network issues or an invalid URL. Please check the validity of the link and try again if necessary.

python main.py -c paddlex/configs/modules/3d_bev_detection/BEVFusion.yaml \

-o Global.mode=predict \

-o Predict.model_dir="./output/best_model/inference" \

-o Predict.input="nuscenes_demo_infer.tar"

Similar to model training and evaluation, the following steps are required:

- Specify the

.yamlconfiguration file path for the model (here it isbevf_pp_2x8_1x_nusc.yaml) - Specify the mode for model inference prediction:

-o Global.mode=predict - Specify the model weight path:

-o Predict.model_dir="./output/best_model/inference" - Specify the input data path:

-o Predict.input="..."

Other related parameters can be set by modifying the fields under Global and Predict in the .yaml configuration file. For details, please refer to PaddleX Common Model Configuration File Parameter Description.

4.4.2 Model Integration¶

The model can be directly integrated into the PaddleX pipeline or into your own project.

1.pipeline Integration

The 3D multimodal fusion detection module can be integrated into the 3D detection pipeline of PaddleX. Simply replacing the model path will complete the model update for the target detection module in the relevant pipeline. In pipeline integration, you can deploy your model using high-performance deployment and serving deployment.

2.Module Integration

The weights you generate can be directly integrated into the 3D multimodal fusion detection module. You can refer to the Python example code in Quick Integration. Just replace the model with the path of the model you have trained.