General OCR Pipeline Usage Tutorial¶

1. OCR Pipeline Introduction¶

OCR is a technology that converts text from images into editable text. It is widely used in fields such as document digitization, information extraction, and data processing. OCR can recognize printed text, handwritten text, and even certain types of fonts and symbols.

The general OCR pipeline is used to solve text recognition tasks by extracting text information from images and outputting it in text form. This pipeline supports the use of PP-OCRv3, PP-OCRv4, and PP-OCRv5 models, with the default model being the PP-OCRv5_server model released by PaddleOCR 3.0, which improves by 13 percentage points over PP-OCRv4_server in various scenarios.

The General OCR Pipeline consists of the following 5 modules. Each module can be independently trained and inferred, and includes multiple models. For detailed information, click the corresponding module to view its documentation.

- Document Image Orientation Classification Module (Optional)

- Text Image Unwarping Module (Optional)

- Text Line Orientation Classification Module (Optional)

- Text Detection Module

- Text Recognition Module

In this pipeline, you can select models based on the benchmark test data provided below.

Document Image Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Acc (%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-LCNet_x1_0_doc_ori | Inference Model/Training Model | 99.06 | 2.62 / 0.59 | 3.24 / 1.19 | 7 | Document image classification model based on PP-LCNet_x1_0, with four categories: 0°, 90°, 180°, and 270°. |

Text Image Unwarp Module (Optional):

| Model | Model Download Link | CER | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| UVDoc | Inference Model/Training Model | 0.179 | 19.05 / 19.05 | - / 869.82 | 30.3 | High-precision Text Image Unwarping model. |

Text Line Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) | Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-LCNet_x0_25_textline_ori | Inference Model/Training Model | 98.85 | 2.16 / 0.41 | 2.37 / 0.73 | 0.96 | Text line classification model based on PP-LCNet_x0_25, with two classes: 0 degrees and 180 degrees |

| PP-LCNet_x1_0_textline_ori | Inference Model/Training Model | 99.42 | - / - | 2.98 / 2.98 | 6.5 | Text line classification model based on PP-LCNet_x1_0, with two classes: 0 degrees and 180 degrees |

Text Detection Module:

| Model | Model Download Link | Detection Hmean (%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_det | Inference Model/Training Model | 83.8 | 89.55 / 70.19 | 383.15 / 383.15 | 84.3 | PP-OCRv5 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv5_mobile_det | Inference Model/Training Model | 79.0 | 10.67 / 6.36 | 57.77 / 28.15 | 4.7 | PP-OCRv5 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

| PP-OCRv4_server_det | Inference Model/Training Model | 69.2 | 127.82 / 98.87 | 585.95 / 489.77 | 109 | PP-OCRv4 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv4_mobile_det | Inference Model/Training Model | 63.8 | 9.87 / 4.17 | 56.60 / 20.79 | 4.7 | PP-OCRv4 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

Text Recognition Module:

| Model | Model Download Links | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Pretrained Model | 86.38 | 8.46 / 2.36 | 31.21 / 31.21 | 81 | PP-OCRv5_rec is a next-generation text recognition model. It aims to efficiently and accurately support the recognition of four major languages—Simplified Chinese, Traditional Chinese, English, and Japanese—as well as complex text scenarios such as handwriting, vertical text, pinyin, and rare characters using a single model. While maintaining recognition performance, it balances inference speed and model robustness, providing efficient and accurate technical support for document understanding in various scenarios. |

| PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 81.29 | 5.43 / 1.46 | 21.20 / 5.32 | 16 | |

| PP-OCRv4_server_rec_doc | Inference Model/Pretrained Model | 86.58 | 8.69 / 2.78 | 37.93 / 37.93 | 182 | PP-OCRv4_server_rec_doc is trained on a mixed dataset of more Chinese document data and PP-OCR training data, building upon PP-OCRv4_server_rec. It enhances the recognition capabilities for some Traditional Chinese characters, Japanese characters, and special symbols, supporting over 15,000 characters. In addition to improving document-related text recognition, it also enhances general text recognition capabilities. |

| PP-OCRv4_mobile_rec | Inference Model/Pretrained Model | 78.74 | 5.26 / 1.12 | 17.48 / 3.61 | 10.5 | A lightweight recognition model of PP-OCRv4 with high inference efficiency, suitable for deployment on various hardware devices, including edge devices. |

| PP-OCRv4_server_rec | Inference Model/Pretrained Model | 85.19 | 8.75 / 2.49 | 36.93 / 36.93 | 173 | The server-side model of PP-OCRv4, offering high inference accuracy and deployable on various servers. |

| en_PP-OCRv4_mobile_rec | Inference Model/Pretrained Model | 70.39 | 4.81 / 1.23 | 17.20 / 4.18 | 7.5 | An ultra-lightweight English recognition model trained based on the PP-OCRv4 recognition model, supporting English and numeric character recognition. |

👉Details of the Model List

* PP-OCRv5 Multi-Scenario Models| Model | Model Download Links | Avg Accuracy for Chinese Recognition (%) | Avg Accuracy for English Recognition (%) | Avg Accuracy for Traditional Chinese Recognition (%) | Avg Accuracy for Japanese Recognition (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Pretrained Model | 86.38 | 64.70 | 93.29 | 60.35 | 8.46 / 2.36 | 31.21 / 31.21 | 81 | PP-OCRv5_rec is a next-generation text recognition model. It aims to efficiently and accurately support the recognition of four major languages—Simplified Chinese, Traditional Chinese, English, and Japanese—as well as complex text scenarios such as handwriting, vertical text, pinyin, and rare characters using a single model. While maintaining recognition performance, it balances inference speed and model robustness, providing efficient and accurate technical support for document understanding in various scenarios. |

| PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 81.29 | 66.00 | 83.55 | 54.65 | 5.43 / 1.46 | 21.20 / 5.32 | 16 |

| Model | Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv4_server_rec_doc | Inference Model/Training Model | 86.58 | 8.69 / 2.78 | 37.93 / 37.93 | 182 | PP-OCRv4_server_rec_doc is built upon PP-OCRv4_server_rec and trained on mixed data including more Chinese document data and PP-OCR training data. It enhances recognition of traditional Chinese characters, Japanese, and special symbols, supporting 15,000+ characters. It improves both document-specific and general text recognition capabilities. |

| PP-OCRv4_mobile_rec | Inference Model/Training Model | 78.74 | 5.26 / 1.12 | 17.48 / 3.61 | 10.5 | Lightweight recognition model of PP-OCRv4 with high inference efficiency, deployable on various hardware devices including edge devices |

| PP-OCRv4_server_rec | Inference Model/Training Model | 85.19 | 8.75 / 2.49 | 36.93 / 36.93 | 173 | Server-side model of PP-OCRv4 with high inference accuracy, deployable on various server platforms |

| PP-OCRv3_mobile_rec | Inference Model/Training Model | 72.96 | 3.89 / 1.16 | 8.72 / 3.56 | 10.3 | Lightweight recognition model of PP-OCRv3 with high inference efficiency, deployable on various hardware devices including edge devices |

| Model | Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| ch_SVTRv2_rec | Inference Model/Training Model | 68.81 | 10.38 / 8.31 | 66.52 / 30.83 | 80.5 | SVTRv2 is a server-side text recognition model developed by the OpenOCR team from Fudan University Vision and Learning Lab (FVL). It won first prize in the PaddleOCR Algorithm Model Challenge - Task 1: OCR End-to-End Recognition, improving end-to-end recognition accuracy by 6% compared to PP-OCRv4 on List A. |

| Model | Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| ch_RepSVTR_rec | Inference Model/Training Model | 65.07 | 6.29 / 1.57 | 20.64 / 5.40 | 48.8 | RepSVTR is a mobile text recognition model based on SVTRv2. It won first prize in the PaddleOCR Algorithm Model Challenge - Task 1: OCR End-to-End Recognition, improving end-to-end recognition accuracy by 2.5% compared to PP-OCRv4 on List B while maintaining comparable inference speed. |

| Model | Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| en_PP-OCRv4_mobile_rec | Inference Model/Training Model | 70.39 | 4.81 / 1.23 | 17.20 / 4.18 | 7.5 | Ultra-lightweight English recognition model based on PP-OCRv4, supporting English and digit recognition |

| en_PP-OCRv3_mobile_rec | Inference Model/Training Model | 70.69 | 3.56 / 0.78 | 8.44 / 5.78 | 17.3 | Ultra-lightweight English recognition model based on PP-OCRv3, supporting English and digit recognition |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| korean_PP-OCRv5_mobile_rec | Inference Model/Pre-trained Model | 88.0 | 5.43 / 1.46 | 21.20 / 5.32 | 14 | An ultra-lightweight Korean text recognition model trained based on the PP-OCRv5 recognition framework. Supports Korean, English and numeric text recognition. |

| latin_PP-OCRv5_mobile_rec | Inference Model/Pre-trained Model | 84.7 | 5.43 / 1.46 | 21.20 / 5.32 | 14 | A Latin-script text recognition model trained based on the PP-OCRv5 recognition framework. Supports most Latin alphabet languages and numeric text recognition. |

| eslav_PP-OCRv5_mobile_rec | Inference Model/Pre-trained Model | 81.6 | 5.43 / 1.46 | 21.20 / 5.32 | 14 | An East Slavic language recognition model trained based on the PP-OCRv5 recognition framework. Supports East Slavic languages, English and numeric text recognition. |

| korean_PP-OCRv3_mobile_rec | Inference Model/Training Model | 60.21 | 3.73 / 0.98 | 8.76 / 2.91 | 9.6 | Ultra-lightweight Korean recognition model based on PP-OCRv3, supporting Korean and numeric recognition |

| japan_PP-OCRv3_mobile_rec | Inference Model/Training Model | 45.69 | 3.86 / 1.01 | 8.62 / 2.92 | 9.8 | Ultra-lightweight Japanese recognition model based on PP-OCRv3, supporting Japanese and numeric recognition |

| chinese_cht_PP-OCRv3_mobile_rec | Inference Model/Training Model | 82.06 | 3.90 / 1.16 | 9.24 / 3.18 | 10.8 | Ultra-lightweight Traditional Chinese recognition model based on PP-OCRv3, supporting Traditional Chinese and numeric recognition |

| te_PP-OCRv3_mobile_rec | Inference Model/Training Model | 95.88 | 3.59 / 0.81 | 8.28 / 6.21 | 8.7 | Ultra-lightweight Telugu recognition model based on PP-OCRv3, supporting Telugu and numeric recognition |

| ka_PP-OCRv3_mobile_rec | Inference Model/Training Model | 96.96 | 3.49 / 0.89 | 8.63 / 2.77 | 17.4 | Ultra-lightweight Kannada recognition model based on PP-OCRv3, supporting Kannada and numeric recognition |

| ta_PP-OCRv3_mobile_rec | Inference Model/Training Model | 76.83 | 3.49 / 0.86 | 8.35 / 3.41 | 8.7 | Ultra-lightweight Tamil recognition model based on PP-OCRv3, supporting Tamil and numeric recognition |

| latin_PP-OCRv3_mobile_rec | Inference Model/Training Model | 76.93 | 3.53 / 0.78 | 8.50 / 6.83 | 8.7 | Ultra-lightweight Latin recognition model based on PP-OCRv3, supporting Latin and numeric recognition |

| arabic_PP-OCRv3_mobile_rec | Inference Model/Training Model | 73.55 | 3.60 / 0.83 | 8.44 / 4.69 | 17.3 | Ultra-lightweight Arabic recognition model based on PP-OCRv3, supporting Arabic and numeric recognition |

| cyrillic_PP-OCRv3_mobile_rec | Inference Model/Training Model | 94.28 | 3.56 / 0.79 | 8.22 / 2.76 | 8.7 | Ultra-lightweight Cyrillic recognition model based on PP-OCRv3, supporting Cyrillic and numeric recognition |

| devanagari_PP-OCRv3_mobile_rec | Inference Model/Training Model | 96.44 | 3.60 / 0.78 | 6.95 / 2.87 | 8.7 | Ultra-lightweight Devanagari recognition model based on PP-OCRv3, supporting Devanagari and numeric recognition |

Test Environment Details:

- Performance Test Environment

- Test Datasets:

- Document Image Orientation Classification Model: PaddleX in-house dataset covering ID cards and documents, with 1,000 images.

- Text Image Correction Model: DocUNet.

- Text Detection Model: PaddleOCR in-house Chinese dataset covering street views, web images, documents, and handwriting, with 500 images for detection.

- Chinese Recognition Model: PaddleOCR in-house Chinese dataset covering street views, web images, documents, and handwriting, with 11,000 images for recognition.

- ch_SVTRv2_rec: PaddleOCR Algorithm Challenge - Task 1: OCR End-to-End Recognition A-set evaluation data.

- ch_RepSVTR_rec: PaddleOCR Algorithm Challenge - Task 1: OCR End-to-End Recognition B-set evaluation data.

- English Recognition Model: PaddleX in-house English dataset.

- Multilingual Recognition Model: PaddleX in-house multilingual dataset.

- Text Line Orientation Classification Model: PaddleX in-house dataset covering ID cards and documents, with 1,000 images.

- Hardware Configuration:

- GPU: NVIDIA Tesla T4

- CPU: Intel Xeon Gold 6271C @ 2.60GHz

- Software Environment:

- Ubuntu 20.04 / CUDA 11.8 / cuDNN 8.9 / TensorRT 8.6.1.6

- paddlepaddle 3.0.0 / paddleocr 3.0.3

- Test Datasets:

- Inference Mode Description

| Mode | GPU Configuration | CPU Configuration | Acceleration Techniques |

|---|---|---|---|

| Standard Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of precision types and acceleration strategies | FP32 Precision / 8 Threads | Optimal backend selection (Paddle/OpenVINO/TRT, etc.) |

If you prioritize model accuracy, choose models with higher accuracy; if inference speed is critical, select faster models; if model size matters, opt for smaller models.

2. Quick Start¶

Before using the general OCR pipeline locally, ensure you have installed the wheel package by following the Installation Guide. Once installed, you can experience OCR via the command line or Python integration.

Please note: If you encounter issues such as the program becoming unresponsive, unexpected program termination, running out of memory resources, or extremely slow inference during execution, please try adjusting the configuration according to the documentation, such as disabling unnecessary features or using lighter-weight models.

2.1 Command Line¶

Run a single command to quickly test the OCR pipeline. Before running the code below, please download the example image locally:

# Default: Uses PP-OCRv5 model

paddleocr ocr -i https://paddle-model-ecology.bj.bcebos.com/paddlex/imgs/demo_image/general_ocr_002.png \

--use_doc_orientation_classify False \

--use_doc_unwarping False \

--use_textline_orientation False \

--save_path ./output \

--device gpu:0

# Use PP-OCRv4 model by --ocr_version PP-OCRv4

paddleocr ocr -i ./general_ocr_002.png --ocr_version PP-OCRv4

Command line supports more parameter settings. Click to expand for detailed instructions on command line parameters.

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

input |

Data to be predicted, required. Local path of an image file or PDF file: /root/data/img.jpg; URL link, such as the network URL of an image file or PDF file: Example; Local directory, which must contain images to be predicted, such as the local path: /root/data/ (currently, predicting PDFs in a directory is not supported; PDFs need to specify the exact file path).

|

str |

|

save_path |

Path to save inference result files. If not set, inference results will not be saved locally. | str |

|

doc_orientation_classify_model_name |

Name of the document orientation classification model. If not set, the pipeline default model will be used. | str |

|

doc_orientation_classify_model_dir |

Directory path of the document orientation classification model. If not set, the official model will be downloaded. | str |

|

doc_unwarping_model_name |

Name of the text image unwarping model. If not set, the pipeline default model will be used. | str |

|

doc_unwarping_model_dir |

Directory path of the text image unwarping model. If not set, the official model will be downloaded. | str |

|

text_detection_model_name |

Name of the text detection model. If not set, the pipeline default model will be used. | str |

|

text_detection_model_dir |

Directory path of the text detection model. If not set, the official model will be downloaded. | str |

|

textline_orientation_model_name |

Name of the text line orientation model. If not set, the pipeline default model will be used. | str |

|

textline_orientation_model_dir |

Directory path of the text line orientation model. If not set, the official model will be downloaded. | str |

|

textline_orientation_batch_size |

Batch size for the text line orientation model. If not set, the default batch size will be 1. |

int |

|

text_recognition_model_name |

Name of the text recognition model. If not set, the pipeline default model will be used. | str |

|

text_recognition_model_dir |

Directory path of the text recognition model. If not set, the official model will be downloaded. | str |

|

text_recognition_batch_size |

Batch size for the text recognition model. If not set, the default batch size will be 1. |

int |

|

use_doc_orientation_classify |

Whether to load and use the document orientation classification module. If not set, the pipeline's initialized value for this parameter (defaults to True) will be used. |

bool |

|

use_doc_unwarping |

Whether to load and use the text image unwarping module. If not set, the pipeline's initialized value for this parameter (defaults to True) will be used. |

bool |

|

use_textline_orientation |

Whether to load and use the text line orientation module. If not set, the pipeline's initialized value for this parameter (defaults to True) will be used. |

bool |

|

text_det_limit_side_len |

Image side length limitation for text detection.

Any integer greater than 0. If not set, the pipeline's initialized value for this parameter (defaults to 64) will be used.

|

int |

|

text_det_limit_type |

Type of side length limit for text detection.

Supports min and max. min means ensuring the shortest side of the image is not smaller than det_limit_side_len, and max means ensuring the longest side of the image is not larger than limit_side_len. If not set, the pipeline's initialized value for this parameter (defaults to min) will be used.

|

str |

|

text_det_thresh |

Pixel threshold for text detection. In the output probability map, pixels with scores higher than this threshold will be considered text pixels.Any floating-point number greater than 0. If not set, the pipeline's initialized value for this parameter (defaults to 0.3) will be used.

|

float |

|

text_det_box_thresh |

Text detection box threshold. If the average score of all pixels within the detected result boundary is higher than this threshold, the result will be considered a text region.

Any floating-point number greater than 0. If not set, the pipeline's initialized value for this parameter (defaults to 0.6) will be used.

|

float |

|

text_det_unclip_ratio |

Text detection expansion coefficient. This method is used to expand the text region—the larger the value, the larger the expanded area.

Any floating-point number greater than 0. If not set, the pipeline's initialized value for this parameter (defaults to 2.0) will be used.

|

float |

|

text_det_input_shape |

Input shape for text detection, you can set three values to represent C, H, and W. | int |

|

text_rec_score_thresh |

Text recognition threshold. Text results with scores higher than this threshold will be retained.Any floating-point number greater than 0

. If not set, the pipeline's initialized value for this parameter (defaults to 0.0, i.e., no threshold) will be used.

|

float |

|

text_rec_input_shape |

Input shape for text recognition. | tuple |

|

lang |

OCR model language to use. The table in the appendix lists all the supported languages. | str |

|

ocr_version |

Version of OCR models.

ocr_version supports all lang options. Please refer to the correspondence table in the appendix for details.

|

str |

|

det_model_dir |

Deprecated. Please refer text_detection_model_dir , they cannot be specified simultaneously with the new parameters. |

str |

|

det_limit_side_len |

Deprecated. Please refer text_det_limit_side_len , they cannot be specified simultaneously with the new parameters. |

int |

|

det_limit_type |

Deprecated. Please refer text_det_limit_type , they cannot be specified simultaneously with the new parameters.

|

str |

|

det_db_thresh |

Deprecated. Please refer text_det_thresh , they cannot be specified simultaneously with the new parameters.

|

float |

|

det_db_box_thresh |

Deprecated. Please refer text_det_box_thresh , they cannot be specified simultaneously with the new parameters.

|

float |

|

det_db_unclip_ratio |

Deprecated. Please refer text_det_unclip_ratio , they cannot be specified simultaneously with the new parameters.

|

float |

|

rec_model_dir |

Deprecated. Please refer text_recognition_model_dir , they cannot be specified simultaneously with the new parameters. |

str |

|

rec_batch_num |

Deprecated. Please refer text_recognition_batch_size , they cannot be specified simultaneously with the new parameters. |

int |

|

use_angle_cls |

Deprecated. Please refer use_textline_orientation , they cannot be specified simultaneously with the new parameters. |

bool |

|

cls_model_dir |

Deprecated. Please refer textline_orientation_model_dir , they cannot be specified simultaneously with the new parameters. |

str |

|

cls_batch_num |

Deprecated. Please refer textline_orientation_batch_size , they cannot be specified simultaneously with the new parameters. |

int |

|

device |

Device for inference. Supports specifying a specific card number:

|

str |

|

enable_hpi |

Whether to enable high-performance inference. | bool |

False |

use_tensorrt |

Whether to use the Paddle Inference TensorRT subgraph engine. If the model does not support acceleration through TensorRT, setting this flag will not enable acceleration. For Paddle with CUDA version 11.8, the compatible TensorRT version is 8.x (x>=6), and it is recommended to install TensorRT 8.6.1.6. |

bool |

False |

precision |

Computational precision, such as fp32, fp16. | str |

fp32 |

enable_mkldnn |

Whether to enable MKL-DNN acceleration for inference. If MKL-DNN is unavailable or the model does not support it, acceleration will not be used even if this flag is set. | bool |

True |

mkldnn_cache_capacity |

MKL-DNN cache capacity. | int |

10 |

cpu_threads |

Number of threads used for inference on CPU. | int |

8 |

paddlex_config |

Path to the PaddleX pipeline configuration file. | str |

Results are printed to the terminal:

{'res': {'input_path': './general_ocr_002.png', 'page_index': None, 'model_settings': {'use_doc_preprocessor': True, 'use_textline_orientation': False}, 'doc_preprocessor_res': {'input_path': None, 'page_index': None, 'model_settings': {'use_doc_orientation_classify': False, 'use_doc_unwarping': False}, 'angle': -1}, 'dt_polys': array([[[ 3, 10],

...,

[ 4, 30]],

...,

[[ 99, 456],

...,

[ 99, 479]]], dtype=int16), 'text_det_params': {'limit_side_len': 736, 'limit_type': 'min', 'thresh': 0.3, 'max_side_limit': 4000, 'box_thresh': 0.6, 'unclip_ratio': 1.5}, 'text_type': 'general', 'textline_orientation_angles': array([-1, ..., -1]), 'text_rec_score_thresh': 0.0, 'rec_texts': ['www.997700', '', 'Cm', '登机牌', 'BOARDING', 'PASS', 'CLASS', '序号SERIAL NO.', '座位号', 'SEAT NO.', '航班FLIGHT', '日期DATE', '舱位', '', 'W', '035', '12F', 'MU2379', '03DEc', '始发地', 'FROM', '登机口', 'GATE', '登机时间BDT', '目的地TO', '福州', 'TAIYUAN', 'G11', 'FUZHOU', '身份识别IDNO.', '姓名NAME', 'ZHANGQIWEI', '票号TKT NO.', '张祺伟', '票价FARE', 'ETKT7813699238489/1', '登机口于起飞前10分钟关闭 GATESCL0SE10MINUTESBEFOREDEPARTURETIME'], 'rec_scores': array([0.67634439, ..., 0.97416091]), 'rec_polys': array([[[ 3, 10],

...,

[ 4, 30]],

...,

[[ 99, 456],

...,

[ 99, 479]]], dtype=int16), 'rec_boxes': array([[ 3, ..., 30],

...,

[ 99, ..., 479]], dtype=int16)}}

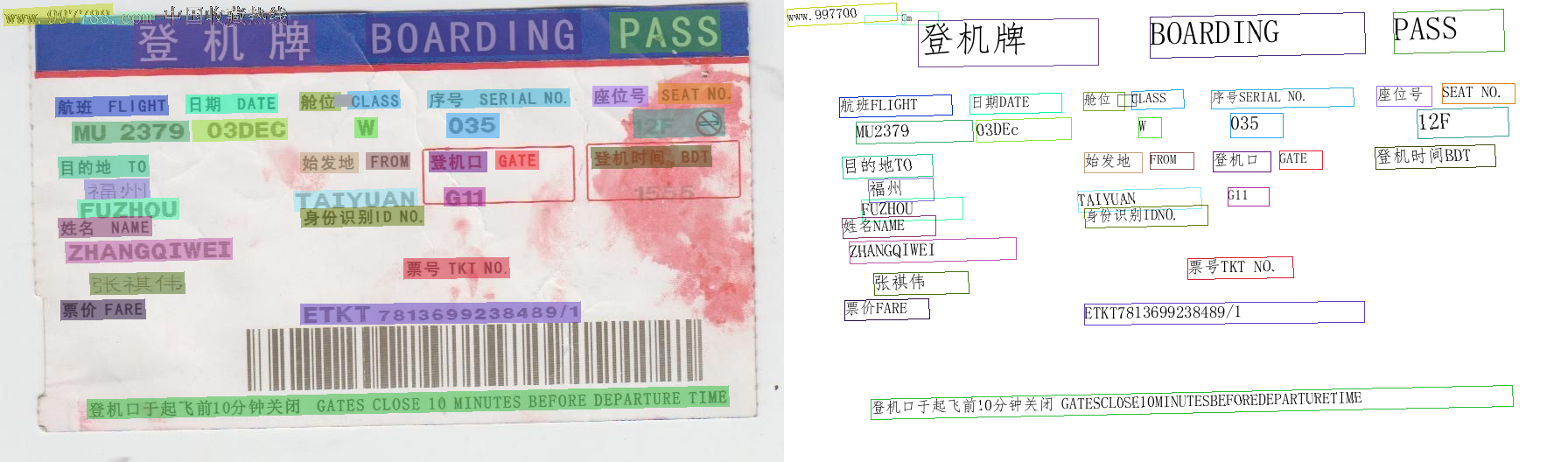

If save_path is specified, the visualization results will be saved under save_path. The visualization output is shown below:

2.2 Python Script Integration¶

The command-line method is for quick testing. For project integration, you can achieve OCR inference with just a few lines of code:

from paddleocr import PaddleOCR

ocr = PaddleOCR(

use_doc_orientation_classify=False, # Disables document orientation classification model via this parameter

use_doc_unwarping=False, # Disables text image rectification model via this parameter

use_textline_orientation=False, # Disables text line orientation classification model via this parameter

)

# ocr = PaddleOCR(lang="en") # Uses English model by specifying language parameter

# ocr = PaddleOCR(ocr_version="PP-OCRv4") # Uses other PP-OCR versions via version parameter

# ocr = PaddleOCR(device="gpu") # Enables GPU acceleration for model inference via device parameter

# ocr = PaddleOCR(

# text_detection_model_name="PP-OCRv5_mobile_det",

# text_recognition_model_name="PP-OCRv5_mobile_rec",

# use_doc_orientation_classify=False,

# use_doc_unwarping=False,

# use_textline_orientation=False,

# ) # Switch to PP-OCRv5_mobile models

result = ocr.predict("./general_ocr_002.png")

for res in result:

res.print()

res.save_to_img("output")

res.save_to_json("output")

In the above Python script, the following steps are performed:

(1) Instantiate the OCR pipeline object via PaddleOCR(), with specific parameter descriptions as follows:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

doc_orientation_classify_model_name |

Name of the document orientation classification model. If set to None, the pipeline's default model will be used. |

str|None |

None |

doc_orientation_classify_model_dir |

Directory path of the document orientation classification model. If set to None, the official model will be downloaded. |

str|None |

None |

doc_unwarping_model_name |

Name of the text image unwarping model. If set to None, the pipeline's default model will be used. |

str|None |

None |

doc_unwarping_model_dir |

Directory path of the text image unwarping model. If set to None, the official model will be downloaded. |

str|None |

None |

text_detection_model_name |

Name of the text detection model. If set to None, the pipeline's default model will be used. |

str|None |

None |

text_detection_model_dir |

Directory path of the text detection model. If set to None, the official model will be downloaded. |

str|None |

None |

textline_orientation_model_name |

Name of the text line orientation model. If set to None, the pipeline's default model will be used. |

str|None |

None |

textline_orientation_model_dir |

Directory path of the text line orientation model. If set to None, the official model will be downloaded. |

str|None |

None |

textline_orientation_batch_size |

Batch size for the text line orientation model. If set to None, the default batch size will be 1. |

int|None |

None |

text_recognition_model_name |

Name of the text recognition model. If set to None, the pipeline's default model will be used. |

str|None |

None |

text_recognition_model_dir |

Directory path of the text recognition model. If set to None, the official model will be downloaded. |

str|None |

None |

text_recognition_batch_size |

Batch size for the text recognition model. If set to None, the default batch size will be 1. |

int|None |

None |

use_doc_orientation_classify |

Whether to load and use the document orientation classification module. If set to None, the pipeline's initialized value for this parameter (defaults to True) will be used. |

bool|None |

None |

use_doc_unwarping |

Whether to load and use the text image unwarping module. If set to None, the pipeline's initialized value for this parameter (defaults to True) will be used. |

bool|None |

None |

use_textline_orientation |

Whether to load and use the text line orientation module. If set to None, the pipeline's initialized value for this parameter (defaults to True) will be used. |

bool|None |

None |

text_det_limit_side_len |

Image side length limitation for text detection.

|

int|None |

None |

text_det_limit_type |

Type of side length limit for text detection.

|

str|None |

None |

text_det_thresh |

Pixel threshold for text detection. Pixels with scores higher than this threshold in the output probability map will be considered text pixels.

|

float|None |

None |

text_det_box_thresh |

Box threshold for text detection. A detection result will be considered a text region if the average score of all pixels within the bounding box is higher than this threshold.

|

float|None |

None |

text_det_unclip_ratio |

Dilation coefficient for text detection. This method is used to dilate the text region, and the larger this value, the larger the dilated area.

|

float|None |

None |

text_det_input_shape |

Input shape for text detection. | tuple |

None |

text_rec_score_thresh |

Recognition score threshold for text. Text results with scores higher than this threshold will be retained.

|

float|None |

None |

text_rec_input_shape |

Input shape for text recognition. | tuple |

None |

lang |

OCR model language to use. The table in the appendix lists all the supported languages. | str|None |

None |

ocr_version |

Version of OCR models.

ocr_version supports all lang options. Please refer to the correspondence table in the appendix for details.

|

str|None |

None |

device |

Device for inference. Supports specifying a specific card number:

|

str|None |

None |

enable_hpi |

Whether to enable high-performance inference. | bool |

False |

use_tensorrt |

Whether to use the Paddle Inference TensorRT subgraph engine. If the model does not support acceleration through TensorRT, setting this flag will not enable acceleration. For Paddle with CUDA version 11.8, the compatible TensorRT version is 8.x (x>=6), and it is recommended to install TensorRT 8.6.1.6. |

bool |

False |

precision |

Computational precision, such as fp32, fp16. | str |

"fp32" |

enable_mkldnn |

Whether to enable MKL-DNN acceleration for inference. If MKL-DNN is unavailable or the model does not support it, acceleration will not be used even if this flag is set. | bool |

True |

mkldnn_cache_capacity |

MKL-DNN cache capacity. | int |

10 |

cpu_threads |

Number of threads used for CPU inference. | int |

8 |

paddlex_config |

Path to the PaddleX pipeline configuration file. | str|None |

None |

(2) Invoke the predict() method of the OCR pipeline object for inference prediction, which returns a results list. Additionally, the pipeline provides the predict_iter() method. Both methods are completely consistent in parameter acceptance and result return, except that predict_iter() returns a generator, which can process and obtain prediction results incrementally, suitable for handling large datasets or scenarios where memory saving is desired. You can choose to use either of these two methods according to actual needs. The following are the parameters and descriptions of the predict() method:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

input |

Data to be predicted, supporting multiple input types, required.

|

Python Var|str|list |

|

use_doc_orientation_classify |

Whether to use the document orientation classification module during inference. | bool|None |

None |

use_doc_unwarping |

Whether to use the text image unwarping module during inference. | bool|None |

None |

use_textline_orientation |

Whether to use the text line orientation classification module during inference. | bool|None |

None |

text_det_limit_side_len |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

int|None |

None |

text_det_limit_type |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

str|None |

None |

text_det_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

text_det_box_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

text_det_unclip_ratio |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

text_rec_score_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

(3) Process the prediction results. The prediction result of each sample is a corresponding Result object, which supports operations of printing, saving as an image, and saving as a json file:

| Method | Method Description | Parameter | Parameter Type | Parameter Description | Default Value |

|---|---|---|---|---|---|

print() |

Print the results to the terminal | format_json |

bool |

Whether to format the output content with JSON indentation. |

True |

indent |

int |

Specify the indentation level to beautify the output JSON data and make it more readable, only valid when format_json is True. |

4 | ||

ensure_ascii |

bool |

Control whether to escape non-ASCII characters as Unicode. When set to True, all non-ASCII characters will be escaped; False retains the original characters, only valid when format_json is True. |

False |

||

save_to_json() |

Save the results as a json-formatted file. | save_path |

str |

File path to save. When it is a directory, the saved file name will be consistent with the input file type name. | No default |

indent |

int |

Specify the indentation level to beautify the output JSON data and make it more readable, only valid when format_json is True. |

4 | ||

ensure_ascii |

bool |

Control whether to escape non-ASCII characters as Unicode. When set to True, all non-ASCII characters will be escaped; False retains the original characters, only valid when format_json is True. |

False |

||

save_to_img() |

Save the results as an image-formatted file | save_path |

str |

File path to save, supporting directory or file path. | No default |

- Calling the

print()method will print the results to the terminal. The content printed to the terminal is explained as follows:input_path:(str)Input path of the image to be predictedpage_index:(Union[int, None])If the input is a PDF file, it indicates which page of the PDF it is; otherwise, it isNonemodel_settings:(Dict[str, bool])Model parameters configured for the pipelineuse_doc_preprocessor:(bool)Control whether to enable the document preprocessing sub-pipelineuse_textline_orientation:(bool)Control whether to enable the text line orientation classification function

doc_preprocessor_res:(Dict[str, Union[str, Dict[str, bool], int]])Output results of the document preprocessing sub-pipeline. Only exists whenuse_doc_preprocessor=Trueinput_path:(Union[str, None])Image path accepted by the image preprocessing sub-pipeline. When the input isnumpy.ndarray, it is saved asNonemodel_settings:(Dict)Model configuration parameters of the preprocessing sub-pipelineuse_doc_orientation_classify:(bool)Control whether to enable document orientation classificationuse_doc_unwarping:(bool)Control whether to enable text image unwarping

angle:(int)Prediction result of document orientation classification. When enabled, the values are [0,1,2,3], corresponding to [0°,90°,180°,270°]; when disabled, it is -1

dt_polys:(List[numpy.ndarray])List of text detection polygon boxes. Each detection box is represented by a numpy array of 4 vertex coordinates, with the array shape being (4, 2) and the data type being int16dt_scores:(List[float])List of confidence scores for text detection boxestext_det_params:(Dict[str, Dict[str, int, float]])Configuration parameters for the text detection modulelimit_side_len:(int)Side length limit value during image preprocessinglimit_type:(str)Processing method for side length limitsthresh:(float)Confidence threshold for text pixel classificationbox_thresh:(float)Confidence threshold for text detection boxesunclip_ratio:(float)Dilation coefficient for text detection boxestext_type:(str)Type of text detection, currently fixed as "general"

textline_orientation_angles:(List[int])Prediction results of text line orientation classification. When enabled, actual angle values are returned (e.g., [0,0,1]); when disabled, [-1,-1,-1] is returnedtext_rec_score_thresh:(float)Filtering threshold for text recognition resultsrec_texts:(List[str])List of text recognition results, containing only texts with confidence scores exceedingtext_rec_score_threshrec_scores:(List[float])List of text recognition confidence scores, filtered bytext_rec_score_threshrec_polys:(List[numpy.ndarray])List of text detection boxes filtered by confidence, in the same format asdt_polysrec_boxes:(numpy.ndarray)Array of rectangular bounding boxes for detection boxes, with shape (n, 4) and dtype int16. Each row represents the [x_min, y_min, x_max, y_max] coordinates of a rectangular box, where (x_min, y_min) is the top-left coordinate and (x_max, y_max) is the bottom-right coordinate

- Calling the

save_to_json()method will save the above content to the specifiedsave_path. If a directory is specified, the save path will besave_path/{your_img_basename}_res.json. If a file is specified, it will be saved directly to that file. Since json files do not support saving numpy arrays,numpy.arraytypes will be converted to list form. - Calling the

save_to_img()method will save the visualization results to the specifiedsave_path. If a directory is specified, the save path will besave_path/{your_img_basename}_ocr_res_img.{your_img_extension}. If a file is specified, it will be saved directly to that file. (The pipeline usually generates many result images, so it is not recommended to directly specify a specific file path, as multiple images will be overwritten, leaving only the last one.)

Additionally, you can also obtain the visualized image with results and prediction results through attributes, as follows:

| Attribute | Attribute Description |

|---|---|

json |

Get the prediction results in json format |

img |

Get the visualized image in dict format |

- The prediction results obtained by the

jsonattribute are in dict format, and the content is consistent with that saved by calling thesave_to_json()method. - The

imgattribute returns a dictionary-type result. The keys areocr_res_imgandpreprocessed_img, with corresponding values being twoImage.Imageobjects: one for displaying the visualized image of OCR results and the other for displaying the visualized image of image preprocessing. If the image preprocessing submodule is not used, onlyocr_res_imgwill be included in the dictionary.

3. Development Integration/Deployment¶

If the general OCR pipeline meets your requirements for inference speed and accuracy, you can proceed directly with development integration/deployment.

If you need to apply the general OCR pipeline directly in your Python project, you can refer to the sample code in 2.2 Python Script Integration.

Additionally, PaddleOCR provides two other deployment methods, detailed as follows:

🚀 High-Performance Inference: In real-world production environments, many applications have stringent performance requirements (especially for response speed) to ensure system efficiency and smooth user experience. To address this, PaddleOCR offers high-performance inference capabilities, which deeply optimize model inference and pre/post-processing to achieve significant end-to-end speed improvements. For detailed high-performance inference workflows, refer to the High-Performance Inference Guide.

☁️ Service Deployment: Service deployment is a common form of deployment in production environments. By encapsulating inference functionality as a service, clients can access these services via network requests to obtain inference results. For detailed pipeline service deployment workflows, refer to the Service Deployment Guide.

Below are the API reference for basic service deployment and examples of multi-language service calls:

API Reference

For the main operations provided by the service:

- The HTTP request method is POST.

- Both the request body and response body are JSON data (JSON objects).

- When the request is processed successfully, the response status code is

200, and the response body has the following attributes:

| Name | Type | Description |

|---|---|---|

logId |

string |

UUID of the request. |

errorCode |

integer |

Error code. Fixed as 0. |

errorMsg |

string |

Error message. Fixed as "Success". |

result |

object |

Operation result. |

- When the request fails, the response body has the following attributes:

| Name | Type | Description |

|---|---|---|

logId |

string |

UUID of the request. |

errorCode |

integer |

Error code. Same as the response status code. |

errorMsg |

string |

Error message. |

The main operations provided by the service are as follows:

infer

Obtain OCR results for an image.

POST /ocr

- The request body has the following attributes:

| Name | Type | Description | Required |

|---|---|---|---|

file |

string |

A server-accessible URL to an image or PDF file, or the Base64-encoded content of such a file. By default, for PDF files with more than 10 pages, only the first 10 pages are processed. To remove the page limit, add the following configuration to the pipeline config file: |

Yes |

fileType |

integer | null |

File type. 0 for PDF, 1 for image. If omitted, the type is inferred from the URL. |

No |

useDocOrientationClassify |

boolean | null |

Refer to the use_doc_orientation_classify parameter in the pipeline object's predict method. |

No |

useDocUnwarping |

boolean | null |

Refer to the use_doc_unwarping parameter in the pipeline object's predict method. |

No |

useTextlineOrientation |

boolean | null |

Refer to the use_textline_orientation parameter in the pipeline object's predict method. |

No |

textDetLimitSideLen |

integer | null |

Refer to the text_det_limit_side_len parameter in the pipeline object's predict method. |

No |

textDetLimitType |

string | null |

Refer to the text_det_limit_type parameter in the pipeline object's predict method. |

No |

textDetThresh |

number | null |

Refer to the text_det_thresh parameter in the pipeline object's predict method. |

No |

textDetBoxThresh |

number | null |

Refer to the text_det_box_thresh parameter in the pipeline object's predict method. |

No |

textDetUnclipRatio |

number | null |

Refer to the text_det_unclip_ratio parameter in the pipeline object's predict method. |

No |

textRecScoreThresh |

number | null |

Refer to the text_rec_score_thresh parameter in the pipeline object's predict method. |

No |

visualize |

boolean | null |

Whether to return the final visualization image and intermediate images during the processing.

For example, adding the following setting to the pipeline config file: visualize parameter in the request.If neither the request body nor the configuration file is set (If visualize is set to null in the request and not defined in the configuration file), the image is returned by default.

|

No |

- When the request is successful, the

resultin the response body has the following attributes:

| Name | Type | Description |

|---|---|---|

ocrResults |

object |

OCR results. The array length is 1 (for image input) or the number of processed document pages (for PDF input). For PDF input, each element represents the result for a corresponding page. |

dataInfo |

object |

Input data information. |

Each element in ocrResults is an object with the following attributes:

| Name | Type | Description |

|---|---|---|

prunedResult |

object |

A simplified version of the res field in the JSON output of the pipeline object's predict method, excluding input_path and page_index. |

ocrImage |

string | null |

OCR result image with detected text regions highlighted. JPEG format, Base64-encoded. |

docPreprocessingImage |

string | null |

Visualization of preprocessing results. JPEG format, Base64-encoded. |

inputImage |

string | null |

Input image. JPEG format, Base64-encoded. |

Multi-Language Service Call Examples

Python

import base64

import requests

API_URL = "http://localhost:8080/ocr"

file_path = "./demo.jpg"

with open(file_path, "rb") as file:

file_bytes = file.read()

file_data = base64.b64encode(file_bytes).decode("ascii")

payload = {"file": file_data, "fileType": 1}

response = requests.post(API_URL, json=payload)

assert response.status_code == 200

result = response.json()["result"]

for i, res in enumerate(result["ocrResults"]):

print(res["prunedResult"])

ocr_img_path = f"ocr_{i}.jpg"

with open(ocr_img_path, "wb") as f:

f.write(base64.b64decode(res["ocrImage"]))

print(f"Output image saved at {ocr_img_path}")

C++

#include <iostream>

#include <fstream>

#include <vector>

#include <string>

#include "cpp-httplib/httplib.h" // https://github.com/Huiyicc/cpp-httplib

#include "nlohmann/json.hpp" // https://github.com/nlohmann/json

#include "base64.hpp" // https://github.com/tobiaslocker/base64

int main() {

httplib::Client client("localhost", 8080);

const std::string filePath = "./demo.jpg";

std::ifstream file(filePath, std::ios::binary | std::ios::ate);

if (!file) {

std::cerr << "Error opening file." << std::endl;

return 1;

}

std::streamsize size = file.tellg();

file.seekg(0, std::ios::beg);

std::vector buffer(size);

if (!file.read(buffer.data(), size)) {

std::cerr << "Error reading file." << std::endl;

return 1;

}

std::string bufferStr(buffer.data(), static_cast(size));

std::string encodedFile = base64::to_base64(bufferStr);

nlohmann::json jsonObj;

jsonObj["file"] = encodedFile;

jsonObj["fileType"] = 1;

auto response = client.Post("/ocr", jsonObj.dump(), "application/json");

if (response && response->status == 200) {

nlohmann::json jsonResponse = nlohmann::json::parse(response->body);

auto result = jsonResponse["result"];

if (!result.is_object() || !result["ocrResults"].is_array()) {

std::cerr << "Unexpected response structure." << std::endl;

return 1;

}

for (size_t i = 0; i < result["ocrResults"].size(); ++i) {

auto ocrResult = result["ocrResults"][i];

std::cout << ocrResult["prunedResult"] << std::endl;

std::string ocrImgPath = "ocr_" + std::to_string(i) + ".jpg";

std::string encodedImage = ocrResult["ocrImage"];

std::string decodedImage = base64::from_base64(encodedImage);

std::ofstream outputImage(ocrImgPath, std::ios::binary);

if (outputImage.is_open()) {

outputImage.write(decodedImage.c_str(), static_cast(decodedImage.size()));

outputImage.close();

std::cout << "Output image saved at " << ocrImgPath << std::endl;

} else {

std::cerr << "Unable to open file for writing: " << ocrImgPath << std::endl;

}

}

} else {

std::cerr << "Failed to send HTTP request." << std::endl;

if (response) {

std::cerr << "HTTP status code: " << response->status << std::endl;

std::cerr << "Response body: " << response->body << std::endl;

}

return 1;

}

return 0;

}

Java

import okhttp3.*;

import com.fasterxml.jackson.databind.ObjectMapper;

import com.fasterxml.jackson.databind.JsonNode;

import com.fasterxml.jackson.databind.node.ObjectNode;

import java.io.File;

import java.io.FileOutputStream;

import java.io.IOException;

import java.util.Base64;

public class Main {

public static void main(String[] args) throws IOException {

String API_URL = "http://localhost:8080/ocr";

String imagePath = "./demo.jpg";

File file = new File(imagePath);

byte[] fileContent = java.nio.file.Files.readAllBytes(file.toPath());

String base64Image = Base64.getEncoder().encodeToString(fileContent);

ObjectMapper objectMapper = new ObjectMapper();

ObjectNode payload = objectMapper.createObjectNode();

payload.put("file", base64Image);

payload.put("fileType", 1);

OkHttpClient client = new OkHttpClient();

MediaType JSON = MediaType.get("application/json; charset=utf-8");

RequestBody body = RequestBody.create(JSON, payload.toString());

Request request = new Request.Builder()

.url(API_URL)

.post(body)

.build();

try (Response response = client.newCall(request).execute()) {

if (response.isSuccessful()) {

String responseBody = response.body().string();

JsonNode root = objectMapper.readTree(responseBody);

JsonNode result = root.get("result");

JsonNode ocrResults = result.get("ocrResults");

for (int i = 0; i < ocrResults.size(); i++) {

JsonNode item = ocrResults.get(i);

JsonNode prunedResult = item.get("prunedResult");

System.out.println("Pruned Result [" + i + "]: " + prunedResult.toString());

String ocrImageBase64 = item.get("ocrImage").asText();

byte[] ocrImageBytes = Base64.getDecoder().decode(ocrImageBase64);

String ocrImgPath = "ocr_result_" + i + ".jpg";

try (FileOutputStream fos = new FileOutputStream(ocrImgPath)) {

fos.write(ocrImageBytes);

System.out.println("Saved OCR image to: " + ocrImgPath);

}

}

} else {

System.err.println("Request failed with HTTP code: " + response.code());

}

}

}

}

Go

package main

import (

"bytes"

"encoding/base64"

"encoding/json"

"fmt"

"io/ioutil"

"net/http"

)

func main() {

API_URL := "http://localhost:8080/ocr"

filePath := "./demo.jpg"

fileBytes, err := ioutil.ReadFile(filePath)

if err != nil {

fmt.Printf("Error reading file: %v\n", err)

return

}

fileData := base64.StdEncoding.EncodeToString(fileBytes)

payload := map[string]interface{}{

"file": fileData,

"fileType": 1,

}

payloadBytes, err := json.Marshal(payload)

if err != nil {

fmt.Printf("Error marshaling payload: %v\n", err)

return

}

client := &http.Client{}

req, err := http.NewRequest("POST", API_URL, bytes.NewBuffer(payloadBytes))

if err != nil {

fmt.Printf("Error creating request: %v\n", err)

return

}

req.Header.Set("Content-Type", "application/json")

res, err := client.Do(req)

if err != nil {

fmt.Printf("Error sending request: %v\n", err)

return

}

defer res.Body.Close()

if res.StatusCode != http.StatusOK {

fmt.Printf("Unexpected status code: %d\n", res.StatusCode)

return

}

body, err := ioutil.ReadAll(res.Body)

if err != nil {

fmt.Printf("Error reading response body: %v\n", err)

return

}

type OcrResult struct {

PrunedResult map[string]interface{} `json:"prunedResult"`

OcrImage *string `json:"ocrImage"`

}

type Response struct {

Result struct {

OcrResults []OcrResult `json:"ocrResults"`

DataInfo interface{} `json:"dataInfo"`

} `json:"result"`

}

var respData Response

if err := json.Unmarshal(body, &respData); err != nil {

fmt.Printf("Error unmarshaling response: %v\n", err)

return

}

for i, res := range respData.Result.OcrResults {

if res.OcrImage != nil {

imgBytes, err := base64.StdEncoding.DecodeString(*res.OcrImage)

if err != nil {

fmt.Printf("Error decoding image %d: %v\n", i, err)

continue

}

filename := fmt.Sprintf("ocr_%d.jpg", i)

if err := ioutil.WriteFile(filename, imgBytes, 0644); err != nil {

fmt.Printf("Error saving image %s: %v\n", filename, err)

continue

}

fmt.Printf("Output image saved at %s\n", filename)

}

}

}

C#

using System;

using System.IO;

using System.Net.Http;

using System.Text;

using System.Threading.Tasks;

using Newtonsoft.Json.Linq;

class Program

{

static readonly string API_URL = "http://localhost:8080/ocr";

static readonly string inputFilePath = "./demo.jpg";

static async Task Main(string[] args)

{

var httpClient = new HttpClient();

byte[] fileBytes = File.ReadAllBytes(inputFilePath);

string fileData = Convert.ToBase64String(fileBytes);

var payload = new JObject

{

{ "file", fileData },

{ "fileType", 1 }

};

var content = new StringContent(payload.ToString(), Encoding.UTF8, "application/json");

HttpResponseMessage response = await httpClient.PostAsync(API_URL, content);

response.EnsureSuccessStatusCode();

string responseBody = await response.Content.ReadAsStringAsync();

JObject jsonResponse = JObject.Parse(responseBody);

JArray ocrResults = (JArray)jsonResponse["result"]["ocrResults"];

for (int i = 0; i < ocrResults.Count; i++)

{

var res = ocrResults[i];

Console.WriteLine($"[{i}] prunedResult:\n{res["prunedResult"]}");

string base64Image = res["ocrImage"]?.ToString();

if (!string.IsNullOrEmpty(base64Image))

{

string outputPath = $"ocr_{i}.jpg";

byte[] imageBytes = Convert.FromBase64String(base64Image);

File.WriteAllBytes(outputPath, imageBytes);

Console.WriteLine($"OCR image saved to {outputPath}");

}

else

{

Console.WriteLine($"OCR image at index {i} is null.");

}

}

}

}

Node.js

const axios = require('axios');

const fs = require('fs');

const path = require('path');

const API_URL = 'http://localhost:8080/layout-parsing';

const imagePath = './demo.jpg';

const fileType = 1;

function encodeImageToBase64(filePath) {

const bitmap = fs.readFileSync(filePath);

return Buffer.from(bitmap).toString('base64');

}

const payload = {

file: encodeImageToBase64(imagePath),

fileType: fileType

};

axios.post(API_URL, payload)

.then(response => {

const results = response.data.result.layoutParsingResults;

results.forEach((res, index) => {

console.log(`\n[${index}] prunedResult:`);

console.log(res.prunedResult);

const outputImages = res.outputImages;

if (outputImages) {

Object.entries(outputImages).forEach(([imgName, base64Img]) => {

const imgPath = `${imgName}_${index}.jpg`;

fs.writeFileSync(imgPath, Buffer.from(base64Img, 'base64'));

console.log(`Output image saved at ${imgPath}`);

});

} else {

console.log(`[${index}] No outputImages.`);

}

});

})

.catch(error => {

console.error('Error during API request:', error.message || error);

});

PHP

<?php

$API_URL = "http://localhost:8080/ocr";

$image_path = "./demo.jpg";

$image_data = base64_encode(file_get_contents($image_path));

$payload = array(

"file" => $image_data,

"fileType" => 1

);

$ch = curl_init($API_URL);

curl_setopt($ch, CURLOPT_POST, true);

curl_setopt($ch, CURLOPT_POSTFIELDS, json_encode($payload));

curl_setopt($ch, CURLOPT_HTTPHEADER, array('Content-Type: application/json'));

curl_setopt($ch, CURLOPT_RETURNTRANSFER, true);

$response = curl_exec($ch);

curl_close($ch);

$result = json_decode($response, true)["result"]["ocrResults"];

foreach ($result as $i => $item) {

echo "[$i] prunedResult:\n";

print_r($item["prunedResult"]);

if (!empty($item["ocrImage"])) {

$output_img_path = "ocr_{$i}.jpg";

file_put_contents($output_img_path, base64_decode($item["ocrImage"]));

echo "OCR image saved at $output_img_path\n";

} else {

echo "No ocrImage found for item $i\n";

}

}

?>

4. Custom Development¶

If the default model weights provided by the General OCR Pipeline do not meet your expectations in terms of accuracy or speed for your specific scenario, you can leverage your own domain-specific or application-specific data to further fine-tune the existing models, thereby improving the recognition performance of the General OCR Pipeline in your use case.

4.1 Model Fine-Tuning¶

The general OCR pipeline consists of multiple modules. If the pipeline's performance does not meet expectations, the issue may stem from any of these modules. You can analyze poorly recognized images to identify the problematic module and refer to the corresponding fine-tuning tutorials in the table below for adjustments.

| Scenario | Module to Fine-Tune | Fine-Tuning Reference |

|---|---|---|

| Inaccurate whole-image rotation correction | Document orientation classification module | Link |

| Inaccurate image distortion correction | Text image unwarping module | Fine-tuning not supported |

| Inaccurate textline rotation correction | Textline orientation classification module | Link |

| Text detection misses | Text detection module | Link |

| Incorrect text recognition | Text recognition module | Link |

4.2 Model Deployment¶

After you complete fine-tuning training using a private dataset, you can obtain a local model weight file. You can then use the fine-tuned model weights by specifying the local model save path through parameters or by customizing the pipeline configuration file.

4.2.1 Specify the local model path through parameters¶

When initializing the pipeline object, specify the local model path through parameters. Take the usage of the weights after fine-tuning the text detection model as an example, as follows:

Command line mode:

# Specify the local model path via --text_detection_model_dir

paddleocr ocr -i ./general_ocr_002.png --text_detection_model_dir your_det_model_path

# PP-OCRv5_server_det model is used as the default text detection model. If you do not fine-tune this model, modify the model name by using --text_detection_model_name

paddleocr ocr -i ./general_ocr_002.png --text_detection_model_name PP-OCRv5_mobile_det --text_detection_model_dir your_v5_mobile_det_model_path

Script mode:

from paddleocr import PaddleOCR

# Specify the local model path via text_detection_model_dir

pipeline = PaddleOCR(text_detection_model_dir="./your_det_model_path")

# PP-OCRv5_server_det model is used as the default text detection model. If you do not fine-tune this model, modify the model name by using text_detection_model_name

# pipeline = PaddleOCR(text_detection_model_name="PP-OCRv5_mobile_det", text_detection_model_dir="./your_v5_mobile_det_model_path")

4.2.2 Specify the local model path through the configuration file¶

1.Obtain the pipeline configuration file

Call the export_paddlex_config_to_yaml method of the General OCR Pipeline object in PaddleOCR to export the current pipeline configuration as a YAML file:

from paddleocr import PaddleOCR

pipeline = PaddleOCR()

pipeline.export_paddlex_config_to_yaml("PaddleOCR.yaml")

2.Modify the Configuration File

After obtaining the default pipeline configuration file, replace the paths of the default model weights with the local paths of your fine-tuned model weights. For example:

......

SubModules:

TextDetection:

box_thresh: 0.6

limit_side_len: 64

limit_type: min

max_side_limit: 4000

model_dir: null # Replace with the path to your fine-tuned text detection model weights

model_name: PP-OCRv5_server_det # If the name of the fine-tuned model is different from the default model name, please modify it here as well

module_name: text_detection

thresh: 0.3

unclip_ratio: 1.5

TextLineOrientation:

batch_size: 6

model_dir: null # Replace with the path to your fine-tuned text LineOrientation model weights

model_name: PP-LCNet_x1_0_textline_ori # If the name of the fine-tuned model is different from the default model name, please modify it here as well

module_name: textline_orientation

TextRecognition:

batch_size: 6

model_dir: null # Replace with the path to your fine-tuned text recognition model weights

model_name: PP-OCRv5_server_rec # If the name of the fine-tuned model is different from the default model name, please modify it here as well

module_name: text_recognition

score_thresh: 0.0

......

The pipeline configuration file includes not only the parameters supported by the PaddleOCR CLI and Python API but also advanced configurations. For detailed instructions, refer to the PaddleX Pipeline Usage Overview and adjust the configurations as needed.

3.Load the Configuration File in CLI

After modifying the configuration file, specify its path using the --paddlex_config parameter in the command line. PaddleOCR will read the file and apply the configurations. Example:

4.Load the Configuration File in Python API

When initializing the pipeline object, pass the path of the PaddleX pipeline configuration file or a configuration dictionary via the paddlex_config parameter. PaddleOCR will read and apply the configurations. Example:

5. Appendix¶

Supported Languages

lang |

Language Name |

|---|---|

abq | Abaza |

af | Afrikaans |

ang | Old English |

ar | Arabic |

ava | Avaric |

az | Azerbaijani |

be | Belarusian |

bg | Bulgarian |

bgc | Haryanvi |

bh | Bihari |

bho | Bhojpuri |

bs | Bosnian |

ch | Chinese (Simplified) |

che | Chechen |

chinese_cht | Chinese (Traditional) |

cs | Czech |

cy | Welsh |

da | Danish |

dar | Dargwa |

de or german | German |

en | English |

es | Spanish |

et | Estonian |

fa | Persian |

fr or french | French |

ga | Irish |

gom | Konkani |

hi | Hindi |

hr | Croatian |

hu | Hungarian |

id | Indonesian |

inh | Ingush |

is | Icelandic |

it | Italian |

japan | Japanese |

ka | Georgian |

kbd | Kabardian |

korean | Korean |

ku | Kurdish |

la | Latin |

lbe | Lak |

lez | Lezghian |

lt | Lithuanian |

lv | Latvian |

mah | Magahi |

mai | Maithili |

mi | Maori |

mn | Mongolian |

mr | Marathi |

ms | Malay |

mt | Maltese |

ne | Nepali |

new | Newari |

nl | Dutch |

no | Norwegian |

oc | Occitan |

pi | Pali |

pl | Polish |

pt | Portuguese |

ro | Romanian |

rs_cyrillic | Serbian (Cyrillic) |

rs_latin | Serbian (Latin) |

ru | Russian |

sa | Sanskrit |

sck | Sadri |

sk | Slovak |

sl | Slovenian |

sq | Albanian |

sv | Swedish |

sw | Swahili |

tab | Tabassaran |

ta | Tamil |

te | Telugu |

tl | Tagalog |

tr | Turkish |

ug | Uyghur |

uk | Ukrainian |

ur | Urdu |

uz | Uzbek |

vi | Vietnamese |

Correspondence Between OCR Model Versions and Supported Languages

ocr_version |

Supported lang |

|---|---|

PP-OCRv5 |

ch, en, fr, de, japan, korean, chinese_cht, af, it, es, bs, pt, cs, cy, da, et, ga, hr, hu, rslatin, id, oc, is, lt, mi, ms, nl, no, pl, sk, sl, sq, sv, sw, tl, tr, uz, la, ru, be, uk |

PP-OCRv4 |

ch, en |

PP-OCRv3 |

abq, af, ady, ang, ar, ava, az, be,

bg, bgc, bh, bho, bs, ch, che,

chinese_cht, cs, cy, da, dar, de, german,

en, es, et, fa, fr, french, ga, gom,

hi, hr, hu, id, inh, is, it, japan,

ka, kbd, korean, ku, la, lbe, lez, lt,

lv, mah, mai, mi, mn, mr, ms, mt,

ne, new, nl, no, oc, pi, pl, pt,

ro, rs_cyrillic, rs_latin, ru, sa, sck, sk,

sl, sq, sv, sw, ta, tab, te, tl,

tr, ug, uk, ur, uz, vi

|