PP-ChatOCRv4-doc Pipeline Usage Tutorial¶

1. Introduction to PP-ChatOCRv4-doc Pipeline¶

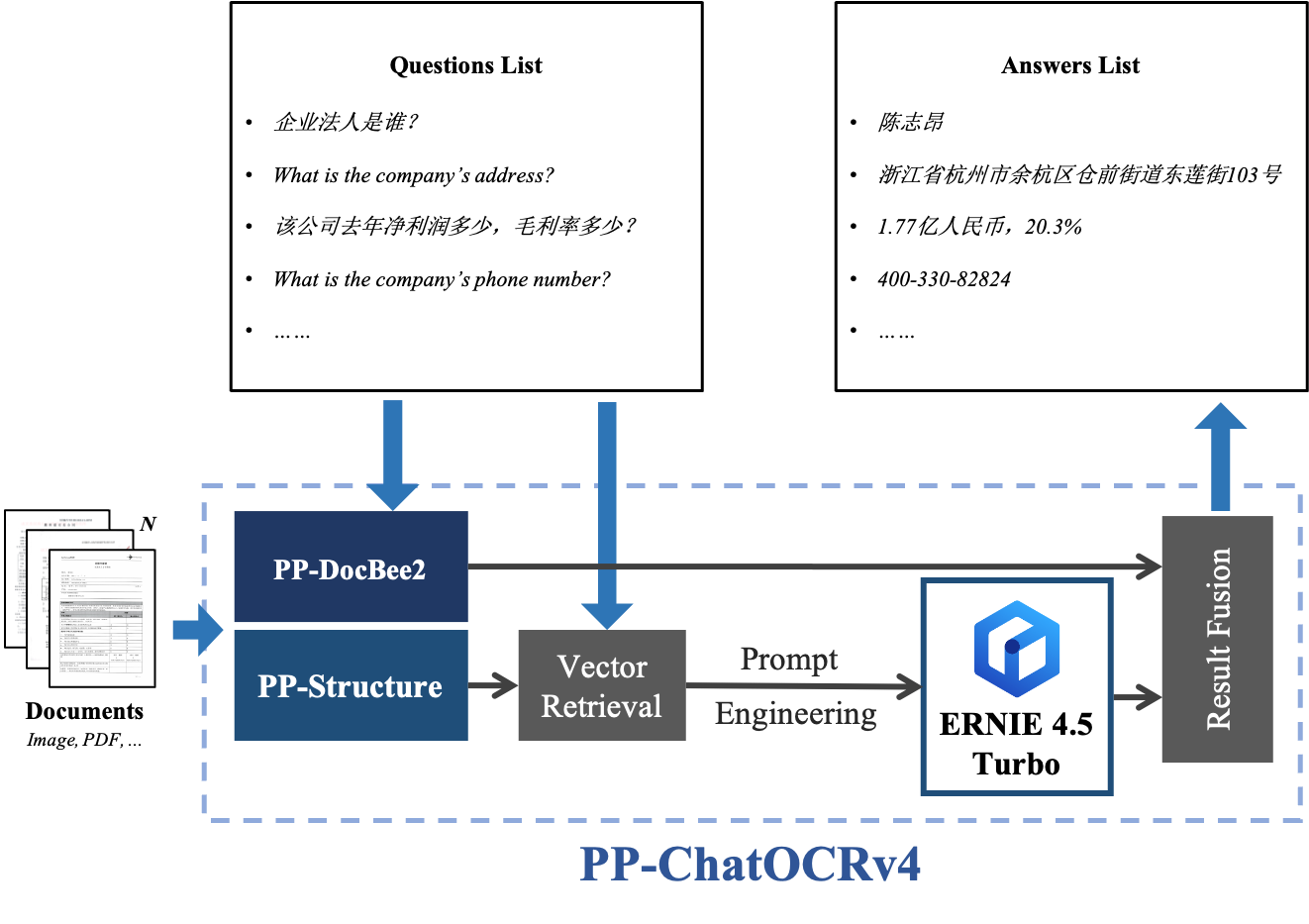

PP-ChatOCRv4-doc is a unique document and image intelligent analysis solution from PaddlePaddle, combining LLM, MLLM, and OCR technologies to address complex document information extraction challenges such as layout analysis, rare characters, multi-page PDFs, tables, and seal text recognition. Integrated with ERNIE Bot, it fuses massive data and knowledge, achieving high accuracy and wide applicability. This pipeline also provides flexible service deployment options, supporting deployment on various hardware. Furthermore, it offers custom development capabilities, allowing you to train and fine-tune models on your own datasets, with seamless integration of trained models.

The PP-ChatOCRv4 pipeline includes the following 9 modules. Each module can be trained and inferred independently and includes multiple models. For more details, please click on the respective module to view the documentation.

- Document Image Orientation Classification Module (Optional)

- Text Image Unwarping Module (Optional)

- Layout Detection Module

- Table Structure Recognition Module (Optional)

- Text Detection Module

- Text Recognition Module

- Text Line Orientation Classification Module(Optional)

- Formula Recognition Module (Optional)

- Seal Text Detection Module (Optional)

In this pipeline, you can choose the model to use based on the benchmark data below.

Document Image Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Acc (%) | GPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High-Performance Mode] |

Model Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-LCNet_x1_0_doc_ori | Inference Model/Training Model | 99.06 | 2.62 / 0.59 | 3.24 / 1.19 | 7 | Document image classification model based on PP-LCNet_x1_0, with four categories: 0°, 90°, 180°, and 270°. |

Text Image Unwarp Module (Optional):

| Model | Model Download Link | CER | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| UVDoc | Inference Model/Training Model | 0.179 | 19.05 / 19.05 | - / 869.82 | 30.3 | High-precision Text Image Unwarping model. |

Layout Detection Module Model:

* The layout detection model includes 20 common categories: document title, paragraph title, text, page number, abstract, table, references, footnotes, header, footer, algorithm, formula, formula number, image, table, seal, figure_table title, chart, and sidebar text and lists of references| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-DocLayout_plus-L | Inference Model/Training Model | 83.2 | 53.03 / 17.23 | 634.62 / 378.32 | 126.01 | A higher-precision layout area localization model trained on a self-built dataset containing Chinese and English papers, PPT, multi-layout magazines, contracts, books, exams, ancient books and research reports using RT-DETR-L |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-DocBlockLayout | Inference Model/Training Model | 95.9 | 34.60 / 28.54 | 506.43 / 256.83 | 123.92 | A layout block localization model trained on a self-built dataset containing Chinese and English papers, PPT, multi-layout magazines, contracts, books, exams, ancient books and research reports using RT-DETR-L |

| Model | Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-DocLayout-L | Inference Model/Pretrained Model | 90.4 | 33.59 / 33.59 | 503.01 / 251.08 | 123.76 | A high-precision layout area localization model trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using RT-DETR-L. |

| PP-DocLayout-M | Inference Model/Pretrained Model | 75.2 | 13.03 / 4.72 | 43.39 / 24.44 | 22.578 | A layout area localization model with balanced precision and efficiency, trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using PicoDet-L. |

| PP-DocLayout-S | Inference Model/Pretrained Model | 70.9 | 11.54 / 3.86 | 18.53 / 6.29 | 4.834 | A high-efficiency layout area localization model trained on a self-built dataset containing Chinese and English papers, magazines, contracts, books, exams, and research reports using PicoDet-S. |

👉 Details of Model List

* Table Layout Detection Model| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet_layout_1x_table | Inference Model/Training Model | 97.5 | 9.57 / 6.63 | 27.66 / 16.75 | 7.4 | A high-efficiency layout area localization model trained on a self-built dataset using PicoDet-1x, capable of detecting table regions. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet-S_layout_3cls | Inference Model/Training Model | 88.2 | 8.43 / 3.44 | 17.60 / 6.51 | 4.8 | A high-efficiency layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-S. |

| PicoDet-L_layout_3cls | Inference Model/Training Model | 89.0 | 12.80 / 9.57 | 45.04 / 23.86 | 22.6 | A balanced efficiency and precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-L. |

Table Classification Module Models:

| RT-DETR-H_layout_3cls | Inference Model/Training Model | 95.8 | 114.80 / 25.65 | 924.38 / 924.38 | 470.1 | A high-precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using RT-DETR-H. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet_layout_1x | Inference Model/Training Model | 97.8 | 9.62 / 6.75 | 26.96 / 12.77 | 7.4 | A high-efficiency English document layout area localization model trained on the PubLayNet dataset using PicoDet-1x. |

| Model | Model Download Link | mAP(0.5) (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PicoDet-S_layout_17cls | Inference Model/Training Model | 87.4 | 8.80 / 3.62 | 17.51 / 6.35 | 4.8 | A high-efficiency layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-S. |

| PicoDet-L_layout_17cls | Inference Model/Training Model | 89.0 | 12.60 / 10.27 | 43.70 / 24.42 | 22.6 | A balanced efficiency and precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using PicoDet-L. |

| RT-DETR-H_layout_17cls | Inference Model/Training Model | 98.3 | 115.29 / 101.18 | 964.75 / 964.75 | 470.2 | A high-precision layout area localization model trained on a self-built dataset of Chinese and English papers, magazines, and research reports using RT-DETR-H. |

Table Structure Recognition Module Models (Optional):

| Model | Model Download Link | Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| SLANet | Inference Model/Training Model | 59.52 | 23.96 / 21.75 | - / 43.12 | 6.9 | SLANet is a table structure recognition model developed by Baidu PaddleX Team. The model significantly improves the accuracy and inference speed of table structure recognition by adopting a CPU-friendly lightweight backbone network PP-LCNet, a high-low-level feature fusion module CSP-PAN, and a feature decoding module SLA Head that aligns structural and positional information. |

| SLANet_plus | Inference Model/Training Model | 63.69 | 23.43 / 22.16 | - / 41.80 | 6.9 | SLANet_plus is an enhanced version of SLANet, the table structure recognition model developed by Baidu PaddleX Team. Compared to SLANet, SLANet_plus significantly improves the recognition ability for wireless and complex tables and reduces the model's sensitivity to the accuracy of table positioning, enabling more accurate recognition even with offset table positioning. |

Text Detection Module Models

| Model | Model Download Link | Detection Hmean (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_det | Inference Model/Training Model | 83.8 | 89.55 / 70.19 | 383.15 / 383.15 | 84.3 | PP-OCRv5 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv5_mobile_det | Inference Model/Training Model | 79.0 | 10.67 / 6.36 | 57.77 / 28.15 | 4.7 | PP-OCRv5 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

| PP-OCRv4_server_det | Inference Model/Training Model | 69.2 | 127.82 / 98.87 | 585.95 / 489.77 | 109 | PP-OCRv4 server-side text detection model with higher accuracy, suitable for deployment on high-performance servers |

| PP-OCRv4_mobile_det | Inference Model/Training Model | 63.8 | 9.87 / 4.17 | 56.60 / 20.79 | 4.7 | PP-OCRv4 mobile-side text detection model with higher efficiency, suitable for deployment on edge devices |

Text Recognition Module Models

| Model | Model Download Links | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Pretrained Model | 86.38 | 8.46 / 2.36 | 31.21 / 31.21 | 81 | PP-OCRv5_rec is a next-generation text recognition model. It aims to efficiently and accurately support the recognition of four major languages—Simplified Chinese, Traditional Chinese, English, and Japanese—as well as complex text scenarios such as handwriting, vertical text, pinyin, and rare characters using a single model. While maintaining recognition performance, it balances inference speed and model robustness, providing efficient and accurate technical support for document understanding in various scenarios. |

| PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 81.29 | 5.43 / 1.46 | 21.20 / 5.32 | 16 | |

| PP-OCRv4_server_rec_doc | Inference Model/Pretrained Model | 86.58 | 8.69 / 2.78 | 37.93 / 37.93 | 182 | PP-OCRv4_server_rec_doc is trained on a mixed dataset of more Chinese document data and PP-OCR training data, building upon PP-OCRv4_server_rec. It enhances the recognition capabilities for some Traditional Chinese characters, Japanese characters, and special symbols, supporting over 15,000 characters. In addition to improving document-related text recognition, it also enhances general text recognition capabilities. |

| PP-OCRv4_mobile_rec | Inference Model/Pretrained Model | 78.74 | 5.26 / 1.12 | 17.48 / 3.61 | 10.5 | A lightweight recognition model of PP-OCRv4 with high inference efficiency, suitable for deployment on various hardware devices, including edge devices. |

| PP-OCRv4_server_rec | Inference Model/Pretrained Model | 85.19 | 8.75 / 2.49 | 36.93 / 36.93 | 173 | The server-side model of PP-OCRv4, offering high inference accuracy and deployable on various servers. |

| en_PP-OCRv4_mobile_rec | Inference Model/Pretrained Model | 70.39 | 4.81 / 1.23 | 17.20 / 4.18 | 7.5 | An ultra-lightweight English recognition model trained based on the PP-OCRv4 recognition model, supporting English and numeric character recognition. |

| Model | Download Link | Chinese Avg Accuracy (%) | English Avg Accuracy (%) | Traditional Chinese Avg Accuracy (%) | Japanese Avg Accuracy (%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | Inference Model/Pretrained Model | 86.38 | 64.70 | 93.29 | 60.35 | 8.46 / 2.36 | 31.21 / 31.21 | 81 | PP-OCRv5_server_rec is a new-generation text recognition model. It efficiently and accurately supports four major languages: Simplified Chinese, Traditional Chinese, English, and Japanese, as well as handwriting, vertical text, pinyin, and rare characters, offering robust and efficient support for document understanding. |

| PP-OCRv5_mobile_rec | Inference Model/Pretrained Model | 81.29 | 66.00 | 83.55 | 54.65 | 5.43 / 1.46 | 21.20 / 5.32 | 136 | PP-OCRv5_mobile_rec is a new-generation text recognition model. It efficiently and accurately supports four major languages: Simplified Chinese, Traditional Chinese, English, and Japanese, as well as handwriting, vertical text, pinyin, and rare characters, offering robust and efficient support for document understanding. |

| Model | Download Link | Avg Accuracy (%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv4_server_rec_doc | Inference Model/Pretrained Model | 86.58 | 8.69 / 2.78 | 37.93 / 37.93 | 182 | Based on PP-OCRv4_server_rec, trained on additional Chinese documents and PP-OCR mixed data. It supports over 15,000 characters including Traditional Chinese, Japanese, and special symbols, enhancing both document-specific and general text recognition accuracy. |

| PP-OCRv4_mobile_rec | Inference Model/Pretrained Model | 78.74 | 5.26 / 1.12 | 17.48 / 3.61 | 10.5 | Lightweight model of PP-OCRv4 with high inference efficiency, suitable for deployment on various edge devices. |

| PP-OCRv4_server_rec | Inference Model/Pretrained Model | 85.19 | 8.75 / 2.49 | 36.93 / 36.93 | 173 | Server-side model of PP-OCRv4 with high recognition accuracy, suitable for deployment on various servers. |

| PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 72.96 | 3.89 / 1.16 | 8.72 / 3.56 | 10.3 | Lightweight model of PP-OCRv3 with high inference efficiency, suitable for deployment on various edge devices. |

| Model | Model Download Link | Recognition Avg Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| ch_SVTRv2_rec | Inference Model/Training Model | 68.81 | 10.38 / 8.31 | 66.52 / 30.83 | 80.5 | SVTRv2 is a server-side text recognition model developed by the OpenOCR team at the Vision and Learning Lab (FVL) of Fudan University. It won the first prize in the OCR End-to-End Recognition Task of the PaddleOCR Algorithm Model Challenge, with a 6% improvement in end-to-end recognition accuracy compared to PP-OCRv4 on the A-list. |

| Model | Model Download Link | Recognition Avg Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| ch_RepSVTR_rec | Inference Model/Training Model | 65.07 | 6.29 / 1.57 | 20.64 / 5.40 | 48.8 | The RepSVTR text recognition model is a mobile-oriented text recognition model based on SVTRv2. It won the first prize in the OCR End-to-End Recognition Task of the PaddleOCR Algorithm Model Challenge, with a 2.5% improvement in end-to-end recognition accuracy compared to PP-OCRv4 on the B-list, while maintaining similar inference speed. |

| Model | Download Link | Avg Accuracy (%) | GPU Inference Time (ms) [Standard Mode / High Performance Mode] |

CPU Inference Time (ms) [Standard Mode / High Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| en_PP-OCRv4_mobile_rec | Inference Model/Pretrained Model | 70.39 | 4.81 / 1.23 | 17.20 / 4.18 | 7.5 | Ultra-lightweight English recognition model trained on PP-OCRv4, supporting English and number recognition. |

| en_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 70.69 | 3.56 / 0.78 | 8.44 / 5.78 | 17.3 | Ultra-lightweight English recognition model trained on PP-OCRv3, supporting English and number recognition. |

| Model | Model Download Link | Recognition Avg Accuracy(%) | GPU Inference Time (ms) [Normal / High Performance] |

CPU Inference Time (ms) [Normal / High Performance] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| korean_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 60.21 | 3.73 / 0.98 | 8.76 / 2.91 | 9.6 | An ultra-lightweight Korean text recognition model trained based on PP-OCRv3, supporting Korean and digits recognition |

| japan_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 45.69 | 3.86 / 1.01 | 8.62 / 2.92 | 9.8 | An ultra-lightweight Japanese text recognition model trained based on PP-OCRv3, supporting Japanese and digits recognition |

| chinese_cht_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 82.06 | 3.90 / 1.16 | 9.24 / 3.18 | 10.8 | An ultra-lightweight Traditional Chinese text recognition model trained based on PP-OCRv3, supporting Traditional Chinese and digits recognition |

| te_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 95.88 | 3.59 / 0.81 | 8.28 / 6.21 | 8.7 | An ultra-lightweight Telugu text recognition model trained based on PP-OCRv3, supporting Telugu and digits recognition |

| ka_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 96.96 | 3.49 / 0.89 | 8.63 / 2.77 | 17.4 | An ultra-lightweight Kannada text recognition model trained based on PP-OCRv3, supporting Kannada and digits recognition |

| ta_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 76.83 | 3.49 / 0.86 | 8.35 / 3.41 | 8.7 | An ultra-lightweight Tamil text recognition model trained based on PP-OCRv3, supporting Tamil and digits recognition |

| latin_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 76.93 | 3.53 / 0.78 | 8.50 / 6.83 | 8.7 | An ultra-lightweight Latin text recognition model trained based on PP-OCRv3, supporting Latin and digits recognition |

| arabic_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 73.55 | 3.60 / 0.83 | 8.44 / 4.69 | 17.3 | An ultra-lightweight Arabic script recognition model trained based on PP-OCRv3, supporting Arabic script and digits recognition |

| cyrillic_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 94.28 | 3.56 / 0.79 | 8.22 / 2.76 | 8.7 | An ultra-lightweight Cyrillic script recognition model trained based on PP-OCRv3, supporting Cyrillic script and digits recognition |

| devanagari_PP-OCRv3_mobile_rec | Inference Model/Pretrained Model | 96.44 | 3.60 / 0.78 | 6.95 / 2.87 | 8.7 | An ultra-lightweight Devanagari script recognition model trained based on PP-OCRv3, supporting Devanagari script and digits recognition |

Text Line Orientation Classification Module (Optional):

| Model | Model Download Link | Top-1 Accuracy (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) | Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-LCNet_x0_25_textline_ori | Inference Model/Training Model | 98.85 | 2.16 / 0.41 | 2.37 / 0.73 | 0.96 | Text line classification model based on PP-LCNet_x0_25, with two classes: 0 degrees and 180 degrees |

Formula Recognition Module Models (Optional):

| Model | Model Download Link | En-BLEU(%) | Zh-BLEU(%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Introduction |

|---|---|---|---|---|---|---|---|

| UniMERNet | Inference Model/Training Model | 85.91 | 43.50 | 1311.84 / 1311.84 | - / 8288.07 | 1530 | UniMERNet is a formula recognition model developed by Shanghai AI Lab. It uses Donut Swin as the encoder and MBartDecoder as the decoder. The model is trained on a dataset of one million samples, including simple formulas, complex formulas, scanned formulas, and handwritten formulas, significantly improving the recognition accuracy of real-world formulas. | PP-FormulaNet-S | Inference Model/Training Model | 87.00 | 45.71 | 182.25 / 182.25 | - / 254.39 | 224 | PP-FormulaNet is an advanced formula recognition model developed by the Baidu PaddlePaddle Vision Team. The PP-FormulaNet-S version uses PP-HGNetV2-B4 as its backbone network. Through parallel masking and model distillation techniques, it significantly improves inference speed while maintaining high recognition accuracy, making it suitable for applications requiring fast inference. The PP-FormulaNet-L version, on the other hand, uses Vary_VIT_B as its backbone network and is trained on a large-scale formula dataset, showing significant improvements in recognizing complex formulas compared to PP-FormulaNet-S. | PP-FormulaNet-L | Inference Model/Training Model | 90.36 | 45.78 | 1482.03 / 1482.03 | - / 3131.54 | 695 | PP-FormulaNet_plus-S | Inference Model/Training Model | 88.71 | 53.32 | 179.20 / 179.20 | - / 260.99 | 248 | PP-FormulaNet_plus is an enhanced version of the formula recognition model developed by the Baidu PaddlePaddle Vision Team, building upon the original PP-FormulaNet. Compared to the original version, PP-FormulaNet_plus utilizes a more diverse formula dataset during training, including sources such as Chinese dissertations, professional books, textbooks, exam papers, and mathematics journals. This expansion significantly improves the model’s recognition capabilities. Among the models, PP-FormulaNet_plus-M and PP-FormulaNet_plus-L have added support for Chinese formulas and increased the maximum number of predicted tokens for formulas from 1,024 to 2,560, greatly enhancing the recognition performance for complex formulas. Meanwhile, the PP-FormulaNet_plus-S model focuses on improving the recognition of English formulas. With these improvements, the PP-FormulaNet_plus series models perform exceptionally well in handling complex and diverse formula recognition tasks. |

| PP-FormulaNet_plus-M | Inference Model/Training Model | 91.45 | 89.76 | 1040.27 / 1040.27 | - / 1615.80 | 592 | |

| PP-FormulaNet_plus-L | Inference Model/Training Model | 92.22 | 90.64 | 1476.07 / 1476.07 | - / 3125.58 | 698 | |

| LaTeX_OCR_rec | Inference Model/Training Model | 74.55 | 39.96 | 1088.89 / 1088.89 | - / - | 99 | LaTeX-OCR is a formula recognition algorithm based on an autoregressive large model. It uses Hybrid ViT as the backbone network and a transformer as the decoder, significantly improving the accuracy of formula recognition. |

Seal Text Detection Module Models (Optional):

| Model | Model Download Link | Detection Hmean (%) | GPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

CPU Inference Time (ms) [Normal Mode / High-Performance Mode] |

Model Storage Size (MB) | Description |

|---|---|---|---|---|---|---|

| PP-OCRv4_server_seal_det | Inference Model/Training Model | 98.40 | 124.64 / 91.57 | 545.68 / 439.86 | 109 | PP-OCRv4's server-side seal text detection model, featuring higher accuracy, suitable for deployment on better-equipped servers |

| PP-OCRv4_mobile_seal_det | Inference Model/Training Model | 96.36 | 9.70 / 3.56 | 50.38 / 19.64 | 4.7 | PP-OCRv4's mobile seal text detection model, offering higher efficiency, suitable for deployment on edge devices |

Test Environment Description:

- Performance Test Environment

- Test Dataset:

- Text Image Rectification Model: DocUNet

- Layout Region Detection Model: A self-built layout analysis dataset using PaddleOCR, containing 10,000 images of common document types such as Chinese and English papers, magazines, and research reports.

- Table Structure Recognition Model: A self-built English table recognition dataset using PaddleX.

- Text Detection Model: A self-built Chinese dataset using PaddleOCR, covering multiple scenarios such as street scenes, web images, documents, and handwriting, with 500 images for detection.

- Chinese Recognition Model: A self-built Chinese dataset using PaddleOCR, covering multiple scenarios such as street scenes, web images, documents, and handwriting, with 11,000 images for text recognition.

- ch_SVTRv2_rec: Evaluation set A for "OCR End-to-End Recognition Task" in the PaddleOCR Algorithm Model Challenge

- ch_RepSVTR_rec: Evaluation set B for "OCR End-to-End Recognition Task" in the PaddleOCR Algorithm Model Challenge

- English Recognition Model: A self-built English dataset using PaddleX.

- Multilingual Recognition Model: A self-built multilingual dataset using PaddleX.

- Text Line Orientation Classification Model: A self-built dataset using PaddleOCR, covering various scenarios such as ID cards and documents, containing 1000 images.

- Seal Text Detection Model: A self-built dataset using PaddleOCR, containing 500 images of circular seal textures.

- Hardware Configuration:

- GPU: NVIDIA Tesla T4

- CPU: Intel Xeon Gold 6271C @ 2.60GHz

- Software Environment:

- Ubuntu 20.04 / CUDA 11.8 / cuDNN 8.9 / TensorRT 8.6.1.6

- paddlepaddle 3.0.0 / paddleocr 3.0.3

- Test Dataset:

- Inference Mode Description

| Mode | GPU Configuration | CPU Configuration | Acceleration Technology Combination |

|---|---|---|---|

| Normal Mode | FP32 Precision / No TRT Acceleration | FP32 Precision / 8 Threads | PaddleInference |

| High-Performance Mode | Optimal combination of pre-selected precision types and acceleration strategies | FP32 Precision / 8 Threads | Pre-selected optimal backend (Paddle/OpenVINO/TRT, etc.) |

If you prioritize model accuracy, choose a model with higher accuracy. If you prioritize inference speed, select a model with faster inference. If you prioritize model storage size, choose a model with a smaller storage size.

2. Quick Start¶

The pre-trained pipelines provided by PaddleOCR allow for quick experience of their effects. You can locally use Python to experience the effects of the PP-ChatOCRv4-doc pipeline.

Please note: If you encounter issues such as the program becoming unresponsive, unexpected program termination, running out of memory resources, or extremely slow inference during execution, please try adjusting the configuration according to the documentation, such as disabling unnecessary features or using lighter-weight models.

Before using the PP-ChatOCRv4-doc pipeline locally, ensure you have completed the installation of the PaddleOCR wheel package according to the PaddleOCR Local Installation Tutorial. If you wish to selectively install dependencies, please refer to the relevant instructions in the installation guide. The dependency group corresponding to this pipeline is ie.

Before performing model inference, you first need to prepare the API key for the large language model. PP-ChatOCRv4 supports large model services on the Baidu Cloud Qianfan Platform or the locally deployed standard OpenAI interface. If using the Baidu Cloud Qianfan Platform, refer to Authentication and Authorization to obtain the API key. If using a locally deployed large model service, refer to the PaddleNLP Large Model Deployment Documentation for deployment of the dialogue interface and vectorization interface for large models, and fill in the corresponding base_url and api_key. If you need to use a multimodal large model for data fusion, refer to the OpenAI service deployment in the PaddleMIX Model Documentation for multimodal large model deployment, and fill in the corresponding base_url and api_key.

Note: If local deployment of a multimodal large model is restricted due to the local environment, you can comment out the lines containing the mllm variable in the code and only use the large language model for information extraction.

2.1 Command Line Experience¶

After updating the configuration file, you can complete quick inference using just a few lines of Python code. You can use the test file for testing:

paddleocr pp_chatocrv4_doc -i vehicle_certificate-1.png -k 驾驶室准乘人数 --qianfan_api_key your_api_key

# 通过 --invoke_mllm 和 --pp_docbee_base_url 使用多模态大模型

paddleocr pp_chatocrv4_doc -i vehicle_certificate-1.png -k 驾驶室准乘人数 --qianfan_api_key your_api_key --invoke_mllm True --pp_docbee_base_url http://127.0.0.1:8080/

The command line supports more parameter configurations. Click to expand for a detailed explanation of the command line parameters.

| Parameter | Description | Type | Default |

|---|---|---|---|

input |

Data to be predicted, required. Such as the local path of an image file or PDF file: /root/data/img.jpg; URL link, such as the network URL of an image file or PDF file: Example; Local directory, which should contain images to be predicted, such as the local path: /root/data/ (currently does not support prediction of PDF files in directories, PDF files need to be specified to the specific file path).

|

str |

|

keys |

Keys for information extraction. | str |

|

save_path |

Specify the path to save the inference results file. If not set, the inference results will not be saved locally. | str |

|

invoke_mllm |

Whether to load and use a multimodal large model. If not set, the default is False. |

bool |

|

layout_detection_model_name |

The name of the layout detection model. If not set, the default model in pipeline will be used. | str |

|

layout_detection_model_dir |

The directory path of the layout detection model. If not set, the official model will be downloaded. | str |

|

doc_orientation_classify_model_name |

The name of the document orientation classification model. If not set, the default model in pipeline will be used. | str |

|

doc_orientation_classify_model_dir |

The directory path of the document orientation classification model. If not set, the official model will be downloaded. | str |

|

doc_unwarping_model_name |

The name of the text image unwarping model. If not set, the default model in pipeline will be used. | str |

|

doc_unwarping_model_dir |

The directory path of the text image unwarping model. If not set, the official model will be downloaded. | str |

|

text_detection_model_name |

Name of the text detection model. If not set, the pipeline's default model will be used. | str |

|

text_detection_model_dir |

Directory path of the text detection model. If not set, the official model will be downloaded. | str |

|

text_recognition_model_name |

Name of the text recognition model. If not set, the pipeline's default model will be used. | str |

|

text_recognition_model_dir |

Directory path of the text recognition model. If not set, the official model will be downloaded. | str |

|

text_recognition_batch_size |

Batch size for the text recognition model. If not set, the default batch size will be 1. |

int |

|

table_structure_recognition_model_name |

Name of the table structure recognition model. If not set, the official model will be downloaded. | str |

|

table_structure_recognition_model_dir |

Directory path of the table structure recognition model. If not set, the official model will be downloaded. | str |

|

seal_text_detection_model_name |

The name of the seal text detection model. If not set, the pipeline's default model will be used. | str |

|

seal_text_detection_model_dir |

The directory path of the seal text detection model. If not set, the official model will be downloaded. | str |

|

seal_text_recognition_model_name |

The name of the seal text recognition model. If not set, the default model of the pipeline will be used. | str |

|

seal_text_recognition_model_dir |

The directory path of the seal text recognition model. If not set, the official model will be downloaded. | str |

|

seal_text_recognition_batch_size |

The batch size for the seal text recognition model. If not set, the batch size will default to 1. |

int |

|

use_doc_orientation_classify |

Whether to load and use the document orientation classification module. If not set, the parameter value initialized by the pipeline will be used, which defaults to True. |

bool |

|

use_doc_unwarping |

Whether to load and use the text image unwarping module. If not set, the parameter value initialized by the pipeline will be used, which defaults to True. |

bool |

|

use_textline_orientation |

Whether to load and use the text line orientation classification module. If not set, the parameter value initialized by the pipeline will be used, which defaults to True. |

bool |

|

use_seal_recognition |

Whether to load and use the seal text recognition sub-pipeline. If not set, the parameter's value initialized during pipeline setup will be used, defaulting to True. |

bool |

|

use_table_recognition |

Whether to load and use the table recognition sub-pipeline. If not set, the parameter's value initialized during pipeline setup will be used, defaulting to True. |

bool |

|

layout_threshold |

Score threshold for the layout model. Any value between 0-1. If not set, the default value is used, which is 0.5.

|

float |

|

layout_nms |

Whether to use Non-Maximum Suppression (NMS) as post-processing for layout detection. If not set, the parameter will be set to the value initialized in the pipeline, which defaults to True by default.

|

bool |

|

layout_unclip_ratio |

Unclip ratio for detected boxes in layout detection model. Any float > 0. If not set, the default is 1.0.

|

float |

|

layout_merge_bboxes_mode |

The merging mode for the detection boxes output by the model in layout region detection.

large.

|

str |

|

text_det_limit_side_len |

Image side length limitation for text detection.

Any integer greater than 0. If not set, the pipeline's initialized value for this parameter (initialized to 960) will be used.

|

int |

|

text_det_limit_type |

Type of side length limit for text detection.

Supports min and max. min means ensuring the shortest side of the image is not smaller than det_limit_side_len, and max means ensuring the longest side of the image is not larger than limit_side_len. If not set, the pipeline's initialized value for this parameter (initialized to max) will be used.

|

str |

|

text_det_thresh |

Pixel threshold for text detection. In the output probability map, pixels with scores higher than this threshold will be considered text pixels.

Any floating-point number greater than 0

. If not set, the pipeline's initialized value for this parameter (0.3) will be used.

|

float |

|

text_det_box_thresh |

Text detection box threshold. If the average score of all pixels within the detected result boundary is higher than this threshold, the result will be considered a text region.

Any floating-point number greater than 0. If not set, the pipeline's initialized value for this parameter (0.6) will be used.

|

float |

|

text_det_unclip_ratio |

Text detection expansion coefficient. This method is used to expand the text region—the larger the value, the larger the expanded area.

Any floating-point number greater than 0

. If not set, the pipeline's initialized value for this parameter (2.0) will be used.

|

float |

|

text_rec_score_thresh |

Text recognition threshold. Text results with scores higher than this threshold will be retained.

Any floating-point number greater than 0

. If not set, the pipeline's initialized value for this parameter (0.0, i.e., no threshold) will be used.

|

float |

|

seal_det_limit_side_len |

Image side length limit for seal text detection.

Any integer > 0. If not set, the default is 736.

|

int | don’t

|

seal_det_limit_type |

Limit type for image side in seal text detection.

supports min and max; min ensures shortest side ≥ det_limit_side_len, max ensures longest side ≤ limit_side_len. If not set, the default is min.

|

str |

|

seal_det_thresh |

Pixel threshold. Pixels with scores above this value in the probability map are considered text.

Any float > 0

If not set, the default is 0.2.

|

float |

|

seal_det_box_thresh |

Box threshold. Boxes with average pixel scores above this value are considered text regions.Any float > 0. If not set, the default is 0.6.

|

float |

|

seal_det_unclip_ratio |

Expansion ratio for seal text detection. Higher value means larger expansion area.

any float > 0. If not set, the default is 0.5.

|

float |

|

seal_rec_score_thresh |

Recognition score threshold. Text results above this value will be kept.

Any float > 0

If not set, the default is 0.0 (no threshold).

|

float |

qianfan_api_key |

API key for the Qianfan Platform. | str |

pp_docbee_base_url |

URL for the multimodal large language model service. | str |

device |

The device used for inference. You can specify a particular card number:

|

str |

|

enable_hpi |

Whether to enable the high-performance inference plugin. | bool |

False |

use_tensorrt |

Whether to use the Paddle Inference TensorRT subgraph engine. If the model does not support acceleration through TensorRT, setting this flag will not enable acceleration. For Paddle with CUDA version 11.8, the compatible TensorRT version is 8.x (x>=6), and it is recommended to install TensorRT 8.6.1.6. |

bool |

False |

precision |

Compute precision, such as FP32 or FP16. | str |

fp32 |

enable_mkldnn |

Whether to enable MKL-DNN acceleration for inference. If MKL-DNN is unavailable or the model does not support it, acceleration will not be used even if this flag is set. | bool |

True |

mkldnn_cache_capacity |

MKL-DNN cache capacity. | int |

10 |

cpu_threads |

The number of threads to use when performing inference on the CPU. | int |

8 |

paddlex_config |

Path to PaddleX pipeline configuration file. | str |

This method will print the results to the terminal. The content printed to the terminal is explained as follows:

2.2 Python Script Experience¶

The command-line method is for a quick experience and to view results. Generally, in projects, integration via code is often required. You can download the Test File and use the following example code for inference:

from paddleocr import PPChatOCRv4Doc

chat_bot_config = {

"module_name": "chat_bot",

"model_name": "ernie-3.5-8k",

"base_url": "https://qianfan.baidubce.com/v2",

"api_type": "openai",

"api_key": "api_key", # your api_key

}

retriever_config = {

"module_name": "retriever",

"model_name": "embedding-v1",

"base_url": "https://qianfan.baidubce.com/v2",

"api_type": "qianfan",

"api_key": "api_key", # your api_key

}

mllm_chat_bot_config = {

"module_name": "chat_bot",

"model_name": "PP-DocBee2",

"base_url": "http://127.0.0.1:8080/", # your local mllm service url

"api_type": "openai",

"api_key": "api_key", # your api_key

}

pipeline = PPChatOCRv4Doc()

visual_predict_res = pipeline.visual_predict(

input="vehicle_certificate-1.png",

use_doc_orientation_classify=False,

use_doc_unwarping=False,

use_common_ocr=True,

use_seal_recognition=True,

use_table_recognition=True,

)

visual_info_list = []

for res in visual_predict_res:

visual_info_list.append(res["visual_info"])

layout_parsing_result = res["layout_parsing_result"]

vector_info = pipeline.build_vector(

visual_info_list, flag_save_bytes_vector=True, retriever_config=retriever_config

)

mllm_predict_res = pipeline.mllm_pred(

input="vehicle_certificate-1.png",

key_list=["Cab Seating Capacity"], # Translated: 驾驶室准乘人数

mllm_chat_bot_config=mllm_chat_bot_config,

)

mllm_predict_info = mllm_predict_res["mllm_res"]

chat_result = pipeline.chat(

key_list=["Cab Seating Capacity"], # Translated: 驾驶室准乘人数

visual_info=visual_info_list,

vector_info=vector_info,

mllm_predict_info=mllm_predict_info,

chat_bot_config=chat_bot_config,

retriever_config=retriever_config,

)

print(chat_result)

After running, the output is as follows:

The prediction process, API description, and output description for PP-ChatOCRv4 are as follows:

(1) Call PPChatOCRv4Doc to instantiate the PP-ChatOCRv4 pipeline object.

The relevant parameter descriptions are as follows:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

layout_detection_model_name |

The name of the model used for layout region detection. If set toNone, the pipeline's default model will be used. |

str|None |

None |

layout_detection_model_dir |

The directory path of the layout region detection model. If set toNone, the official model will be downloaded. |

str|None |

None |

doc_orientation_classify_model_name |

The name of the document orientation classification model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

doc_orientation_classify_model_dir |

The directory path of the document orientation classification model. If set toNone, the official model will be downloaded. |

str|None |

None |

doc_unwarping_model_name |

The name of the document unwarping model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

doc_unwarping_model_dir |

The directory path of the document unwarping model. If set toNone, the official model will be downloaded. |

str|None |

None |

text_detection_model_name |

The name of the text detection model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

text_detection_model_dir |

The directory path of the text detection model. If set toNone, the official model will be downloaded. |

str|None |

None |

text_recognition_model_name |

The name of the text recognition model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

text_recognition_model_dir |

The directory path of the text recognition model. If set toNone, the official model will be downloaded. |

str|None |

None |

text_recognition_batch_size |

The batch size for the text recognition model. If set toNone, the batch size will default to 1. |

int|None |

None |

table_structure_recognition_model_name |

The name of the table structure recognition model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

table_structure_recognition_model_dir |

The directory path of the table structure recognition model. If set toNone, the official model will be downloaded. |

str|None |

None |

seal_text_detection_model_name |

The name of the seal text detection model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

seal_text_detection_model_dir |

The directory path of the seal text detection model. If set toNone, the official model will be downloaded. |

str|None |

None |

seal_text_recognition_model_name |

The name of the seal text recognition model. If set toNone, the pipeline's default model will be used. |

str|None |

None |

seal_text_recognition_model_dir |

The directory path of the seal text recognition model. If set toNone, the official model will be downloaded. |

str|None |

None |

seal_text_recognition_batch_size |

The batch size for the seal text recognition model. If set toNone, the batch size will default to 1. |

int|None |

None |

use_doc_orientation_classify |

Whether to load and use the document orientation classification module. If set toNone, the value initialized by the pipeline for this parameter will be used (defaults to True). |

bool|None |

None |

use_doc_unwarping |

Whether to load and use the document unwarping module. If set toNone, the value initialized by the pipeline for this parameter will be used (defaults to True). |

bool|None |

None |

use_textline_orientation |

Whether to load and use the text line orientation classification function. If set toNone, the value initialized by the pipeline for this parameter will be used (defaults to True). |

bool|None |

None |

use_seal_recognition |

Whether to load and use the seal text recognition sub-pipeline. If set toNone, the value initialized by the pipeline for this parameter will be used (defaults to True). |

bool|None |

None |

use_table_recognition |

Whether to load and use the table recognition sub-pipeline. If set toNone, the value initialized by the pipeline for this parameter will be used (defaults to True). |

bool|None |

None |

layout_threshold |

Layout model score threshold.

|

float|dict|None |

None |

layout_nms |

Whether to use Non-Maximum Suppression (NMS) as post-processing for layout detection. If set to None, the parameter will be set to the value initialized in the pipeline, which is set to True by default. |

bool|None |

None |

layout_unclip_ratio |

Expansion factor for the detection boxes of the layout region detection model.

|

float|Tuple[float,float]|dict|None |

None |

layout_merge_bboxes_mode |

Method for filtering overlapping boxes in layout region detection.

|

str|dict|None |

None |

text_det_limit_side_len |

Image side length limitation for text detection.

|

int|None |

None |

text_det_limit_type |

Type of side length limit for text detection.

|

str|None |

None |

text_det_thresh |

Detection pixel threshold. In the output probability map, pixels with scores greater than this threshold are considered text pixels.

|

float|None |

None |

text_det_box_thresh |

Detection box threshold. If the average score of all pixels within a detection result's bounding box is greater than this threshold, the result is considered a text region.

|

float|None |

None |

text_det_unclip_ratio |

Text detection expansion factor. This method is used to expand text regions; the larger the value, the larger the expanded area.

|

float|None |

None |

text_rec_score_thresh |

Text recognition threshold. Text results with scores greater than this threshold will be kept.

|

float|None |

None |

seal_det_limit_side_len |

Image side length limit for seal text detection.

|

int|None |

None |

seal_det_limit_type |

Type of image side length limit for seal text detection.

|

str|None |

None |

seal_det_thresh |

Detection pixel threshold. In the output probability map, pixels with scores greater than this threshold are considered text pixels.

|

float|None |

None |

seal_det_box_thresh |

Detection box threshold. If the average score of all pixels within a detection result's bounding box is greater than this threshold, the result is considered a text region.

|

float|None |

None |

seal_det_unclip_ratio |

Seal text detection expansion factor. This method is used to expand text regions; the larger the value, the larger the expanded area.

|

float|None |

None |

seal_rec_score_thresh |

Seal text recognition threshold. Text results with scores greater than this threshold will be kept.

|

float|None |

None |

retriever_config |

Configuration parameters for the vector retrieval large model. The configuration content is the following dictionary:

|

dict|None |

None |

mllm_chat_bot_config |

Configuration parameters for the multimodal large model. The configuration content is the following dictionary:

|

dict|None |

None |

chat_bot_config |

Configuration information for the large language model. The configuration content is the following dictionary:

|

dict|None |

None |

device |

Device used for inference. Supports specifying a specific card number:

|

str|None |

None |

enable_hpi |

Whether to enable high-performance inference. | bool |

False |

use_tensorrt |

Whether to use the Paddle Inference TensorRT subgraph engine. If the model does not support acceleration through TensorRT, setting this flag will not enable acceleration. For Paddle with CUDA version 11.8, the compatible TensorRT version is 8.x (x>=6), and it is recommended to install TensorRT 8.6.1.6. |

bool |

False |

precision |

Computation precision, e.g., fp32, fp16. | str |

"fp32" |

enable_mkldnn |

Whether to enable MKL-DNN acceleration for inference. If MKL-DNN is unavailable or the model does not support it, acceleration will not be used even if this flag is set. | bool |

True |

mkldnn_cache_capacity |

MKL-DNN cache capacity. | int |

10 |

cpu_threads |

Number of threads used when performing inference on CPU. | int |

8 |

paddlex_config |

PaddleX pipeline configuration file path. | str|None |

None |

(2) Call the visual_predict() method of the PP-ChatOCRv4 pipeline object to obtain visual prediction results. This method returns a list of results. Additionally, the pipeline also provides the visual_predict_iter() method. Both are identical in terms of parameter acceptance and result return, with the difference being that visual_predict_iter() returns a generator, allowing for step-by-step processing and retrieval of prediction results, suitable for handling large datasets or scenarios where memory saving is desired. You can choose either of these two methods based on your actual needs. The following are the parameters and their descriptions for the visual_predict() method:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

input |

Data to be predicted, supports multiple input types, required.

|

Python Var|str|list |

|

use_doc_orientation_classify |

Whether to use the document orientation classification module during inference. | bool|None |

None |

use_doc_unwarping |

Whether to use the document image unwarping module during inference. | bool|None |

None |

use_textline_orientation |

Whether to use the text line orientation classification module during inference. | bool|None |

None |

use_seal_recognition |

Whether to use the seal text recognition sub-pipeline during inference. | bool|None |

None |

use_table_recognition |

Whether to use the table recognition sub-pipeline during inference. | bool|None |

None |

layout_threshold |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|dict|None |

None |

layout_nms |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

bool|None |

None |

layout_unclip_ratio |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|Tuple[float,float]|dict|None |

None |

layout_merge_bboxes_mode |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

str|dict|None |

None |

text_det_limit_side_len |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

int|None |

None |

text_det_limit_type |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

str|None |

None |

text_det_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

text_det_box_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

text_det_unclip_ratio |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

text_rec_score_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

seal_det_limit_side_len |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

int|None |

None |

seal_det_limit_type |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

str|None |

None |

seal_det_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

seal_det_box_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

seal_det_unclip_ratio |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

seal_rec_score_thresh |

Same meaning as the instantiation parameters. If set to None, the instantiation value is used; otherwise, this parameter takes precedence. |

float|None |

None |

(3) Process the visual prediction results.

The prediction result for each sample is of `dict` type, containing two fields: `visual_info` and `layout_parsing_result`. Visual information (including `normal_text_dict`, `table_text_list`, `table_html_list`, etc.) is obtained through `visual_info`, and the information for each sample is placed in the `visual_info_list` list. The content of this list will later be fed into the large language model. Of course, you can also obtain the layout parsing results through `layout_parsing_result`. This result contains content such as tables, text, and images found in the file or image, and supports operations like printing, saving as an image, and saving as a `json` file:......

for res in visual_predict_res:

visual_info_list.append(res["visual_info"])

layout_parsing_result = res["layout_parsing_result"]

layout_parsing_result.print()

layout_parsing_result.save_to_img("./output")

layout_parsing_result.save_to_json("./output")

layout_parsing_result.save_to_xlsx("./output")

layout_parsing_result.save_to_html("./output")

......

| Method | Method Description | Parameter | Parameter Type | Parameter Description | Default Value |

|---|---|---|---|---|---|

print() |

Prints the result to the terminal | format_json |

bool |

Whether to format the output content using JSON indentation. |

True |

indent |

int |

Specifies the indentation level to beautify the output JSON data for better readability, effective only when format_json is True. |

4 | ||

ensure_ascii |

bool |

Controls whether to escape non-ASCII characters to Unicode. Set to True to escape all non-ASCII characters; False to preserve original characters, effective only when format_json is True. |

False |

||

save_to_json() |

Saves the result as a JSON format file | save_path |

str |

Save file path. When it's a directory, the saved file name will be consistent with the input file name. | None |

indent |

int |

Specifies the indentation level to beautify the output JSON data for better readability, effective only when format_json is True. |

4 | ||

ensure_ascii |

bool |

Controls whether to escape non-ASCII characters to Unicode. Set to True to escape all non-ASCII characters; False to preserve original characters, effective only when format_json is True. |

False |

||

save_to_img() |

Saves the visualization images of various intermediate modules as PNG format images. | save_path |

str |

Save file path, supports directory or file path. | None |

save_to_html() |

Saves the tables in the file as HTML format files. | save_path |

str |

Save file path, supports directory or file path. | None |

save_to_xlsx() |

Saves the tables in the file as XLSX format files. | save_path |

str |

Save file path, supports directory or file path. | None |

| Property | Property Description |

|---|---|

json |

Gets the prediction results in json format. |

img |

Gets the visualization images in dict format. |

(4) Call the build_vector() method of the PP-ChatOCRv4 pipeline object to build vectors for the text content.

The following are the parameters and their descriptions for the `build_vector()` method:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

visual_info |

Visual information, can be a dictionary containing visual information, or a list of such dictionaries. | list|dict |

|

min_characters |

Minimum number of characters. A positive integer greater than 0, can be determined based on the token length supported by the large language model. | int |

3500 |

block_size |

Block size when building a vector library for long text. A positive integer greater than 0, can be determined based on the token length supported by the large language model. | int |

300 |

flag_save_bytes_vector |

Whether to save text as a binary file. | bool |

False |

retriever_config |

Configuration parameters for the vector retrieval large model, same as the parameter during instantiation. If set to None, uses instantiation parameters; otherwise, this parameter takes precedence. |

dict|None |

None |

(5) Call the mllm_pred() method of the PP-ChatOCRv4 pipeline object to get the extraction results from the multimodal large model.

The following are the parameters and their descriptions for the `mllm_pred()` method:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

input |

Data to be predicted, supports multiple input types, required.

|

Python Var|str |

|

key_list |

A single key or a list of keys used for extracting information. | Union[str, List[str]] |

None |

mllm_chat_bot_config |

Configuration parameters for the multimodal large model, same as the parameter during instantiation. If set to None, uses instantiation parameters; otherwise, this parameter takes precedence. |

dict|None |

None |

(6) Call the chat() method of the PP-ChatOCRv4 pipeline object to extract key information.

The following are the parameters and their descriptions for the `chat()` method:

| Parameter | Parameter Description | Parameter Type | Default Value |

|---|---|---|---|

key_list |

A single key or a list of keys used for extracting information. | Union[str, List[str]] |

|

visual_info |

Visual information result. | List[dict] |

|

use_vector_retrieval |

Whether to use vector retrieval. | bool |

True |

vector_info |

Vector information used for retrieval. | dict|None |

None |

min_characters |

Required minimum number of characters. A positive integer greater than 0. | int |

3500 |

text_task_description |

Description of the text task. | str|None |

None |

text_output_format |

Output format for text results. | str|None |

None |

text_rules_str |

Rules for generating text results. | str|None |

None |

text_few_shot_demo_text_content |

Text content for few-shot demonstration. | str|None |

None |

text_few_shot_demo_key_value_list |

Key-value list for few-shot demonstration. | str|None |

None |

table_task_description |

Description of the table task. | str|None |

None |

table_output_format |

Output format for table results. | str|None |

None |

table_rules_str |

Rules for generating table results. | str|None |

None |

table_few_shot_demo_text_content |

Text content for table few-shot demonstration. | str|None |

None |

table_few_shot_demo_key_value_list |

Key-value list for table few-shot demonstration. | str|None |

None |

mllm_predict_info |

Multimodal large model result. | dict|None |

None

|

mllm_integration_strategy |

Data fusion strategy for multimodal large model and large language model, supports using one of them separately or fusing the results of both. Options: "integration", "llm_only", and "mllm_only". | str |

"integration" |

chat_bot_config |

Configuration information for the large language model, same as the parameter during instantiation. | dict|None |

None |

retriever_config |

Configuration parameters for the vector retrieval large model, same as the parameter during instantiation. If set to None, uses instantiation parameters; otherwise, this parameter takes precedence. |

dict|None |

None |

3. Development Integration/Deployment¶

If the pipeline meets your requirements for inference speed and accuracy in production, you can proceed directly with development integration/deployment.

If you need to apply the pipeline directly in your Python project, you can refer to the sample code in 2.2 Python Script Experience.

Additionally, PaddleX provides two other deployment methods, detailed as follows:

🚀 High-Performance Inference: In actual production environments, many applications have stringent standards for the performance metrics of deployment strategies (especially response speed) to ensure efficient system operation and smooth user experience. To this end, PaddleX provides a high-performance inference plugin aimed at deeply optimizing model inference and pre/post-processing to significantly speed up the end-to-end process. For detailed instructions on high-performance inference, please refer to the High-Performance Inference Guide.

☁️ Serving: Serving is a common deployment form in actual production environments. By encapsulating the inference functionality as a service, clients can access these services through network requests to obtain inference results. PaddleX supports multiple serving solutions for pipelines. For detailed instructions on serving, please refer to the Service Deployment Guide.

Below are the API references for basic serving and multi-language service invocation examples:

API Reference

For the main operations provided by the service:

- The HTTP request method is POST.

- Both the request body and response body are JSON data (JSON objects).

- When the request is successfully processed, the response status code is

200, and the response body has the following properties:

| Name | Type | Meaning |

|---|---|---|

logId |

string |

UUID of the request. |

errorCode |

integer |

Error code. Fixed at 0. |

errorMsg |

string |

Error description. Fixed at "Success". |

result |

object |

Operation result. |

- When the request is not successfully processed, the response body has the following properties:

| Name | Type | Meaning |

|---|---|---|

logId |

string |

UUID of the request. |

errorCode |

integer |

Error code. Same as the response status code. |

errorMsg |

string |

Error description. |

The main operations provided by the service are as follows:

analyzeImages

Uses computer vision models to analyze images, obtain OCR, table recognition results, etc., and extract key information from the images.

POST /chatocr-visual

- Properties of the request body:

| Name | Type | Meaning | Required |

|---|---|---|---|

file |

string |

URL of an image file or PDF file accessible to the server, or Base64 encoded result of the content of the above file types. By default, for PDF files exceeding 10 pages, only the content of the first 10 pages will be processed. To remove the page limit, please add the following configuration to the pipeline configuration file: |

Yes |

fileType |

integer | null |

File type. 0 represents a PDF file, 1 represents an image file. If this property is not present in the request body, the file type will be inferred based on the URL. |

No |

useDocOrientationClassify |

boolean | null |

Please refer to the description of the use_doc_orientation_classify parameter of the pipeline object's visual_predict method. |

No |

useDocUnwarping |

boolean | null |

Please refer to the description of the use_doc_unwarping parameter of the pipeline object's visual_predict method. |

No |

useSealRecognition |

boolean | null |

Please refer to the description of the use_seal_recognition parameter of the pipeline object's visual_predict method. |

No |

useTableRecognition |

boolean | null |

Please refer to the description of the use_table_recognition parameter of the pipeline object's visual_predict method. |

No |

layoutThreshold |

number | null |

Please refer to the description of the layout_threshold parameter of the pipeline object's visual_predict method. |

No |

layoutNms |

boolean | null |

Please refer to the description of the layout_nms parameter of the pipeline object's visual_predict method. |

No |

layoutUnclipRatio |

number | array | object | null |

Please refer to the description of the layout_unclip_ratio parameter of the pipeline object's visual_predict method. |

No |

layoutMergeBboxesMode |

string | object | null |

Please refer to the description of the layout_merge_bboxes_mode parameter of the pipeline object's visual_predict method. |

No |

textDetLimitSideLen |

integer | null |

Please refer to the description of the text_det_limit_side_len parameter of the pipeline object's visual_predict method. |

No |

textDetLimitType |

string | null |

Please refer to the description of the text_det_limit_type parameter of the pipeline object's visual_predict method. |

No |

textDetThresh |

number | null |

Please refer to the description of the text_det_thresh parameter of the pipeline object's visual_predict method. |

No |

textDetBoxThresh |

number | null |

Please refer to the description of the text_det_box_thresh parameter of the pipeline object's visual_predict method. |

No |

textDetUnclipRatio |

number | null |

Please refer to the description of the text_det_unclip_ratio parameter of the pipeline object's visual_predict method. |

No |

textRecScoreThresh |

number | null |

Please refer to the description of the text_rec_score_thresh parameter of the pipeline object's visual_predict method. |

No |

sealDetLimitSideLen |

integer | null |

Please refer to the description of the seal_det_limit_side_len parameter of the pipeline object's visual_predict method. |

No |

sealDetLimitType |

string | null |

Please refer to the description of the seal_det_limit_type parameter of the pipeline object's visual_predict method. |

No |

sealDetThresh |

number | null |

Please refer to the description of the seal_det_thresh parameter of the pipeline object's visual_predict method. |

No |

sealDetBoxThresh |

number | null |

Please refer to the description of the seal_det_box_thresh parameter of the pipeline object's visual_predict method. |

No |

sealDetUnclipRatio |

number | null |

Please refer to the description of the seal_det_unclip_ratio parameter of the pipeline object's visual_predict method. |

No |

sealRecScoreThresh |

number | null |

Please refer to the description of the seal_rec_score_thresh parameter of the pipeline object's visual_predict method. |

No |

visualize |

boolean | null |

Whether to return the final visualization image and intermediate images during the processing.

For example, adding the following setting to the pipeline config file: visualize parameter in the request.If neither the request body nor the configuration file is set (If visualize is set to null in the request and not defined in the configuration file), the image is returned by default.

|

No |

- When the request is successfully processed, the

resultof the response body has the following properties:

| Name | Type | Meaning |

|---|---|---|

layoutParsingResults |

array |

Analysis results obtained using computer vision models. The array length is 1 (for image input) or the actual number of document pages processed (for PDF input). For PDF input, each element in the array represents the result of each page actually processed in the PDF file. |

visualInfo |

array |

Key information in the image, which can be used as input for other operations. |

dataInfo |

object |

Input data information. |

Each element in layoutParsingResults is an object with the following properties:

| Name | Type | Meaning |

|---|---|---|

prunedResult |

object |

A simplified version of the res field in the JSON representation of the layout_parsing_result generated by the pipeline object's visual_predict method, with the input_path and page_index fields removed. |

outputImages |

object | null |

Refer to the description of img attribute of the pipeline's visual prediction result. |

inputImage |

string | null |

Input image. The image is in JPEG format and encoded using Base64. |

buildVectorStore

Builds a vector database.

POST /chatocr-vector

- Properties of the request body:

| Name | Type | Meaning | Required |

|---|---|---|---|

visualInfo |

array |

Key information in the image. Provided by the analyzeImages operation. |

Yes |

minCharacters |

integer |

Please refer to the description of the min_characters parameter of the pipeline object's build_vector method. |

No |

blockSize |

integer |

Please refer to the description of the block_size parameter of the pipeline object's build_vector method. |

No |

retrieverConfig |

object | null |

Please refer to the description of the retriever_config parameter of the pipeline object's build_vector method. |

No |

- When the request is successfully processed, the

resultof the response body has the following properties:

| Name | Type | Meaning |

|---|---|---|

vectorInfo |

object |

Serialized result of the vector database, which can be used as input for other operations. |

invokeMLLMInvoke the MLLM.

POST /chatocr-mllm

- Properties of the request body:

| Name | Type | Meaning | Required |

|---|---|---|---|

image |

string |

URL of an image file accessible by the server or the Base64-encoded content of the image file. | Yes |

keyList |

array |

List of keys. | Yes |

mllmChatBotConfig |

object | null |

Please refer to the description of the mllm_chat_bot_config parameter of the pipeline object's mllm_pred method. |

No |

- When the request is successfully processed, the

resultof the response body has the following property:

| Name | Type | Meaning |

|---|---|---|

mllmPredictInfo |

object |

MLLM invocation result. |

chat

Interacts with large language models to extract key information using them.

POST /chatocr-chat

- Properties of the request body:

| Name | Type | Meaning | Required |

|---|---|---|---|

keyList |

array |

List of keys. | Yes |

visualInfo |

object |

Key information in the image. Provided by the analyzeImages operation. |

Yes |

useVectorRetrieval |

boolean |

Please refer to the description of the use_vector_retrieval parameter of the pipeline object's chat method. |

No |

vectorInfo |

object | null |

Serialized result of the vector database. Provided by the buildVectorStore operation. Please note that the deserialization process involves performing an unpickle operation. To prevent malicious attacks, be sure to use data from trusted sources. |

No |

minCharacters |

integer |

Please refer to the description of the min_characters parameter of the pipeline object's chat method. |

No |

textTaskDescription |

string |

Please refer to the description of the text_task_description parameter of the pipeline object's chat method. |

No |

textOutputFormat |

string | null |

Please refer to the description of the text_output_format parameter of the pipeline object's chat method. |

No |

textRulesStr |

string | null |

Please refer to the description of the text_rules_str parameter of the pipeline object's chat method. |

No |

textFewShotDemoTextContent |

string | null |

Please refer to the description of the text_few_shot_demo_text_content parameter of the pipeline object's chat method. |

No |

textFewShotDemoKeyValueList |

string | null |

Please refer to the description of the text_few_shot_demo_key_value_list parameter of the pipeline object's chat method. |

No |

tableTaskDescription |

string | null |

Please refer to the description of the table_task_description parameter of the pipeline object's chat method. |

No |

tableOutputFormat |

string | null |

Please refer to the description of the table_output_format parameter of the pipeline object's chat method. |

No |

tableRulesStr |

string | null |

Please refer to the description of the table_rules_str parameter of the pipeline object's chat method. |

No |

tableFewShotDemoTextContent |

string | null |

Please refer to the description of the table_few_shot_demo_text_content parameter of the pipeline object's chat method. |

No |

tableFewShotDemoKeyValueList |

string | null |

Please refer to the description of the table_few_shot_demo_key_value_list parameter of the pipeline object's chat method. |

No |

mllmPredictInfo |

object | null |

MLLM invocation result. Provided by the invokeMllm operation. |

No |

mllmIntegrationStrategy |

string |

Please refer to the description of the mllm_integration_strategy parameter of the pipeline object's chat method. |

No |

chatBotConfig |

object | null |

Please refer to the description of the chat_bot_config parameter of the pipeline object's chat method. |

No |

retrieverConfig |

object | null |