Visual Studio 2022 Community CMake Compilation Guide¶

PaddleOCR has been tested on Windows using Visual Studio 2022 Community. Microsoft started supporting direct CMake project management from Visual Studio 2017, but it wasn't fully stable and reliable until 2019. If you want to use CMake for project management and compilation, we recommend using Visual Studio 2022.

All examples below assume the working directory is D:\projects\cpp.

1. Environment Preparation¶

1.1 Install Required Dependencies¶

- Visual Studio 2019 or newer

- CUDA 10.2, cuDNN 7+ (only required for the GPU version of the prediction library). Additionally, the NVIDIA Computing Toolkit must be installed, and the NVIDIA cuDNN library must be downloaded.

- CMake 3.22+

Ensure that the above dependencies are installed before proceeding. In this tutorial the Community Edition of VS2022 was used.

1.2 Download PaddlePaddle C++ Prediction Library and OpenCV¶

1.2.1 Download PaddlePaddle C++ Prediction Library¶

PaddlePaddle C++ prediction libraries offer different precompiled versions for various CPU and CUDA configurations. Download the appropriate version from: C++ Prediction Library Download List

After extraction, the D:\projects\paddle_inference directory should contain:

paddle_inference

├── paddle # Core Paddle library and header files

|

├── third_party # Third-party dependencies and headers

|

└── version.txt # Version and compilation information

1.2.2 Install and Configure OpenCV¶

- Download OpenCV for Windows from the official release page.

- Run the downloaded executable and extract OpenCV to a specified directory, e.g.,

D:\projects\cpp\opencv.

1.2.3 Download PaddleOCR Code¶

2. Running the Project¶

Step 1: Create a Visual Studio Project¶

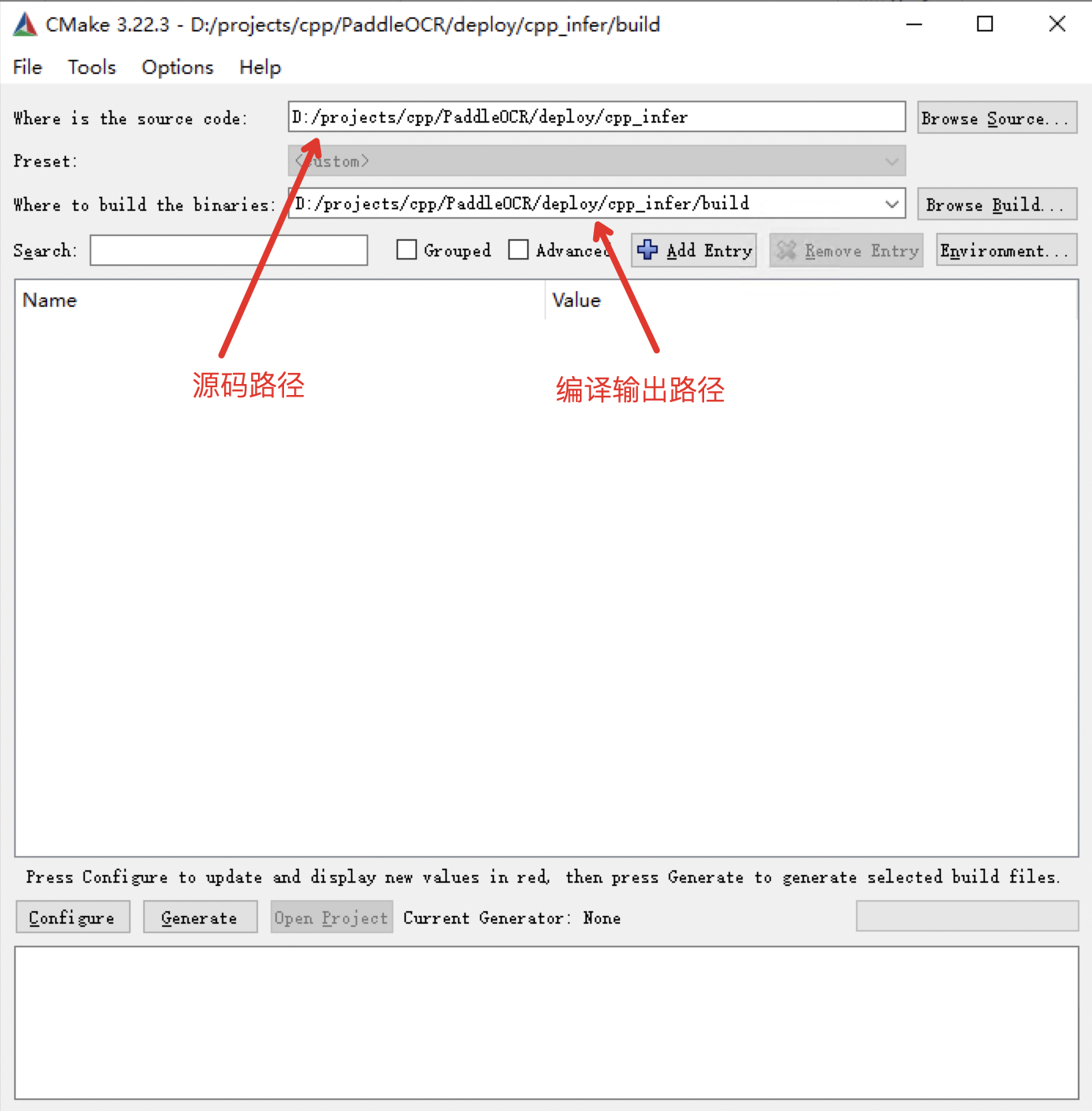

Once CMake is installed, open the cmake-gui application. Specify the source code directory in the first input box and the build output directory in the second input box.

Step 2: Run CMake Configuration¶

Click the Configure button at the bottom of the interface. The first time you run it, a prompt will appear asking for the Visual Studio configuration. Select your Visual Studio version and set the target platform to x64. Click Finish to start the configuration process.

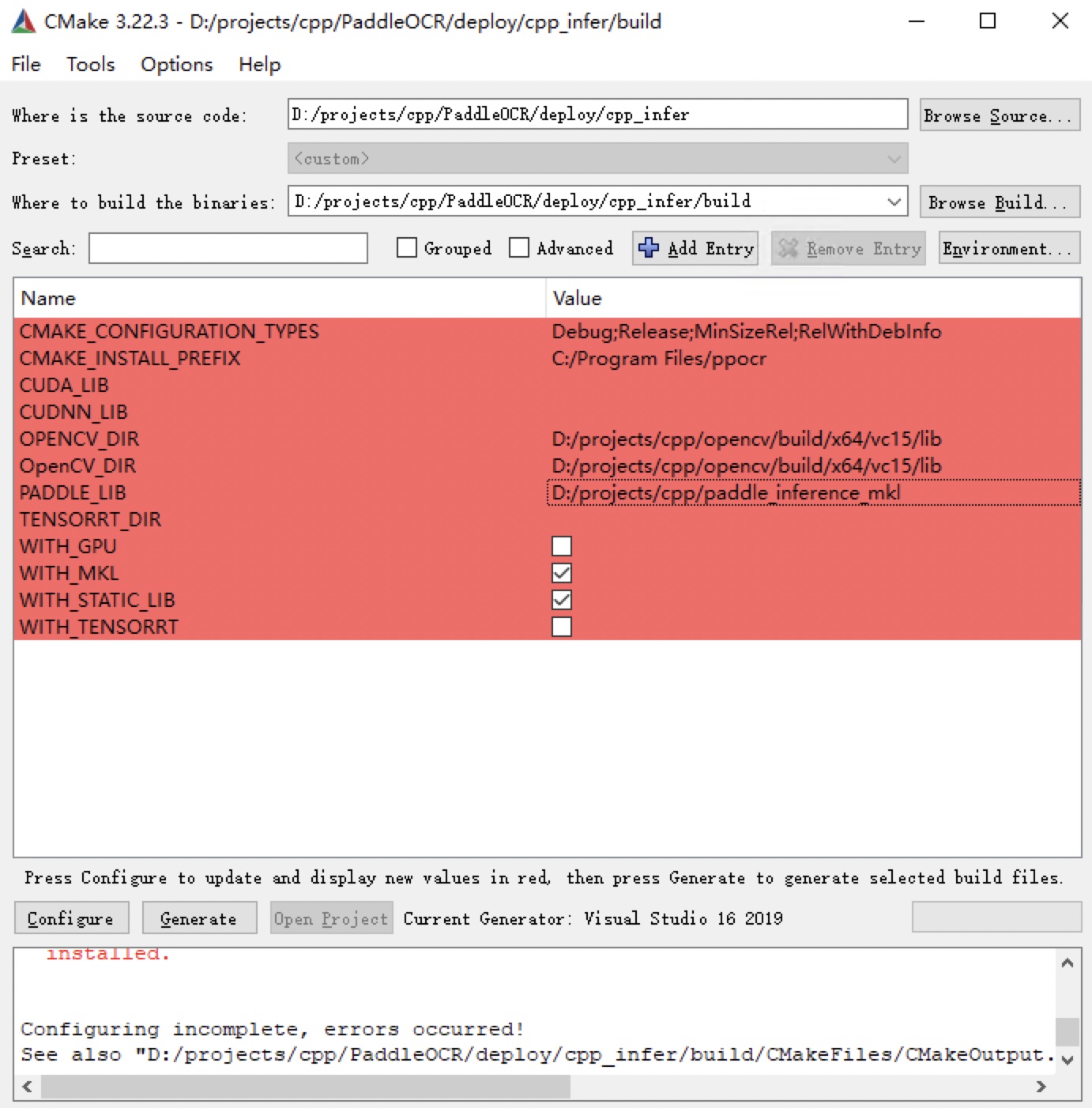

The first run will result in errors, which is expected. You now need to configure OpenCV and the prediction library.

-

For CPU version, configure the following variables:

-

OPENCV_DIR: Path to the OpenCVlibfolder OpenCV_DIR: Same asOPENCV_DIR-

PADDLE_LIB: Path to thepaddle_inferencefolder -

For GPU version, configure additional variables:

-

CUDA_LIB: CUDA path, e.g.,C:\Program Files\NVIDIA GPU Computing Toolkit\CUDA\v11.2\lib\x64 CUDNN_LIB: Path to extracted CuDNN library, e.g.,D:\CuDNN-8.9.7.29TENSORRT_DIR: Path to extracted TensorRT, e.g.,D:\TensorRT-8.0.1.6WITH_GPU: Check this optionWITH_TENSORRT: Check this option

Example configuration:

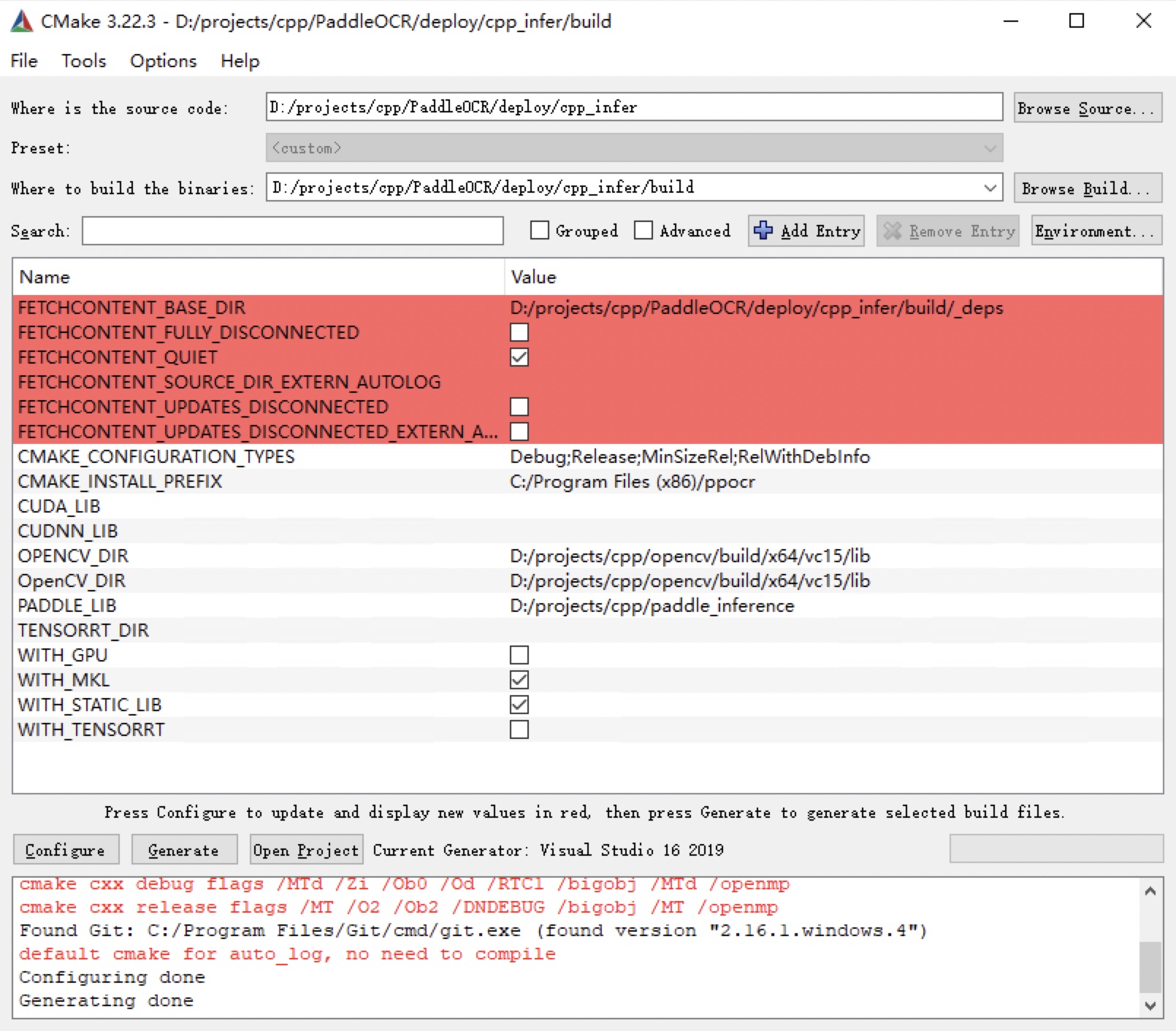

Once configured, click Configure again.

Note:

- If using

openblas, uncheckWITH_MKL. - If you encounter the error

unable to access 'https://github.com/LDOUBLEV/AutoLog.git/': gnutls_handshake() failed, updatedeploy/cpp_infer/external-cmake/auto-log.cmaketo usehttps://gitee.com/Double_V/AutoLog.

Step 3: Generate Visual Studio Project¶

Click Generate to create the .sln file for the Visual Studio project.

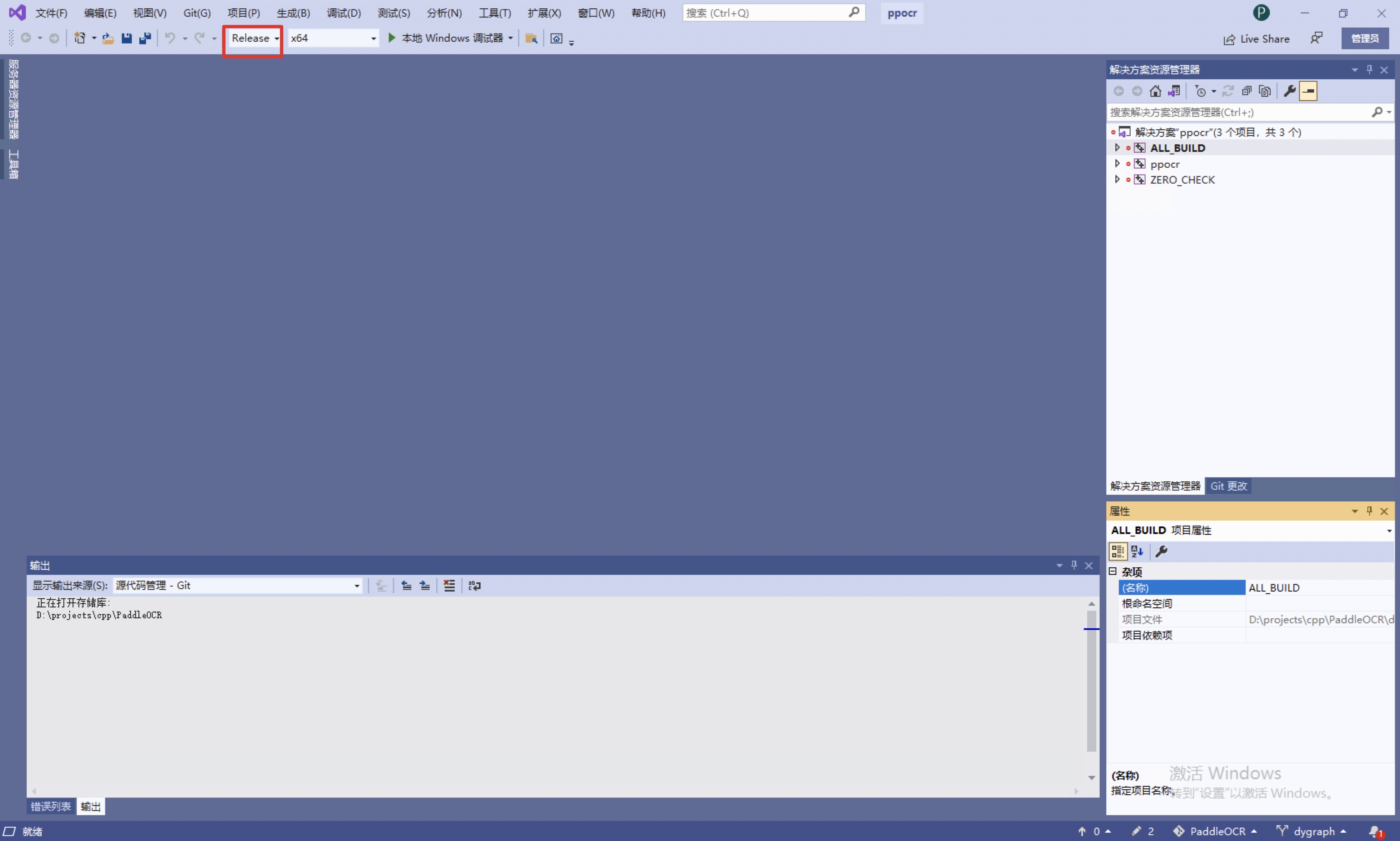

Click Open Project to launch the project in Visual Studio. The interface should look like this:

Before building the solution, perform the following steps:

- Change

DebugtoReleasemode. - Download dirent.h and copy it to the Visual Studio include directory, e.g.,

C:\Program Files\Microsoft Visual Studio\2022\Community\VC\Tools\MSVC\include.

Click Build -> Build Solution. Once completed, the ppocr.exe file should appear in the build/Release/ folder.

Before running, copy the following files to build/Release/:

paddle_inference/paddle/lib/paddle_inference.dllpaddle_inference/paddle/lib/common.dllpaddle_inference/third_party/install/mklml/lib/mklml.dllpaddle_inference/third_party/install/mklml/lib/libiomp5md.dllpaddle_inference/third_party/install/onednn/lib/mkldnn.dllopencv/build/x64/vc15/bin/opencv_world455.dll- If using the

openblasversion, also copypaddle_inference/third_party/install/openblas/lib/openblas.dll.

Step 4: Run the Prediction¶

The compiled executable is located in the build/Release/ directory. Open cmd and navigate to D:\projects\cpp\PaddleOCR\deploy\cpp_infer\:

Run the prediction using ppocr.exe. For more usage details, refer to the to the Instructions section of running the demo.

# Switch terminal encoding to UTF-8

CHCP 65001

# If using PowerShell, run this command before execution to fix character encoding issues:

$OutputEncoding = [console]::InputEncoding = [console]::OutputEncoding = New-Object System.Text.UTF8Encoding

# Execute prediction

.\build\Release\ppocr.exe system --det_model_dir=D:\projects\cpp\ch_PP-OCRv2_det_slim_quant_infer --rec_model_dir=D:\projects\cpp\ch_PP-OCRv2_rec_slim_quant_infer --image_dir=D:\projects\cpp\PaddleOCR\doc\imgs\11.jpg

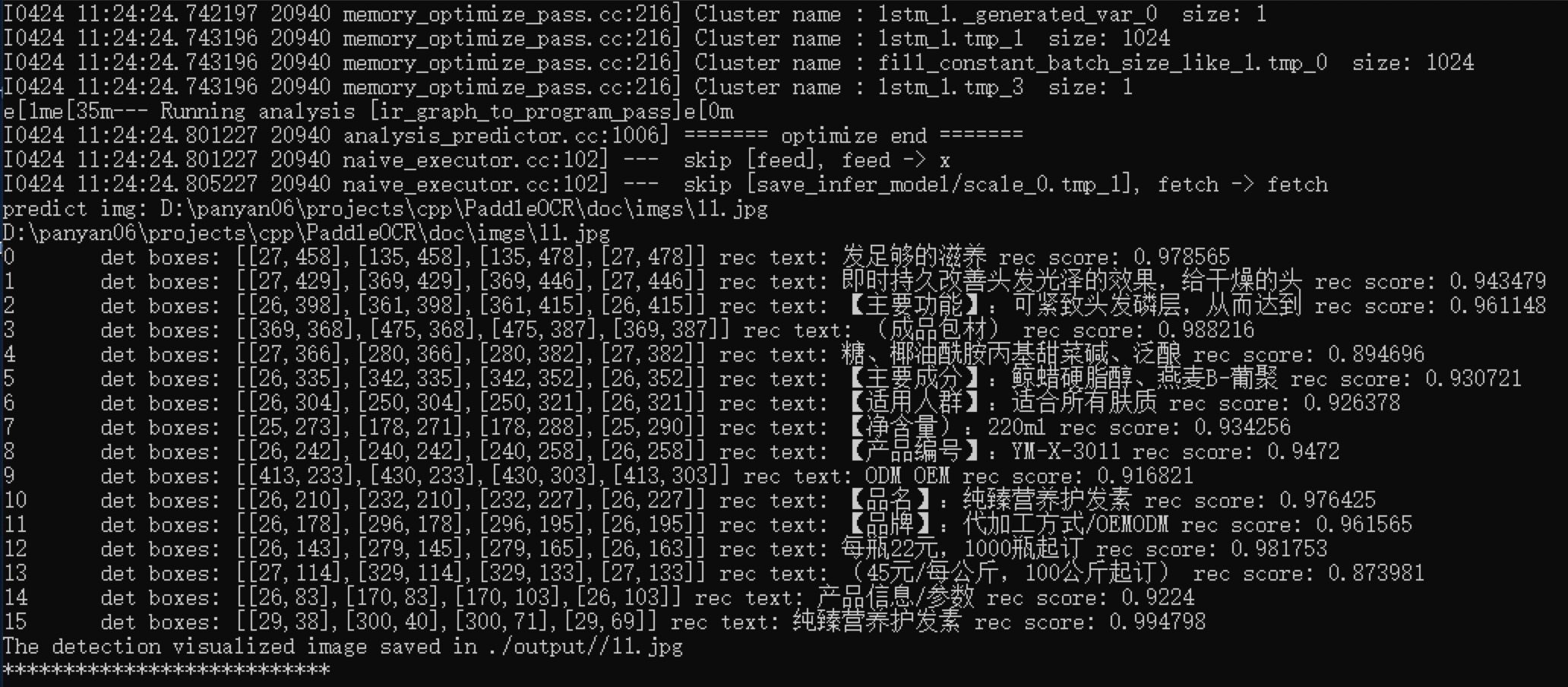

Sample result:¶

FAQ¶

- Issue: Application fails to start with error

(0xc0000142)andcmdoutput showsYou are using Paddle compiled with TensorRT, but TensorRT dynamic library is not found. - Solution: Copy all

.dllfiles from theTensorRTdirectory'slibfolder into thereleasedirectory and try running it again.