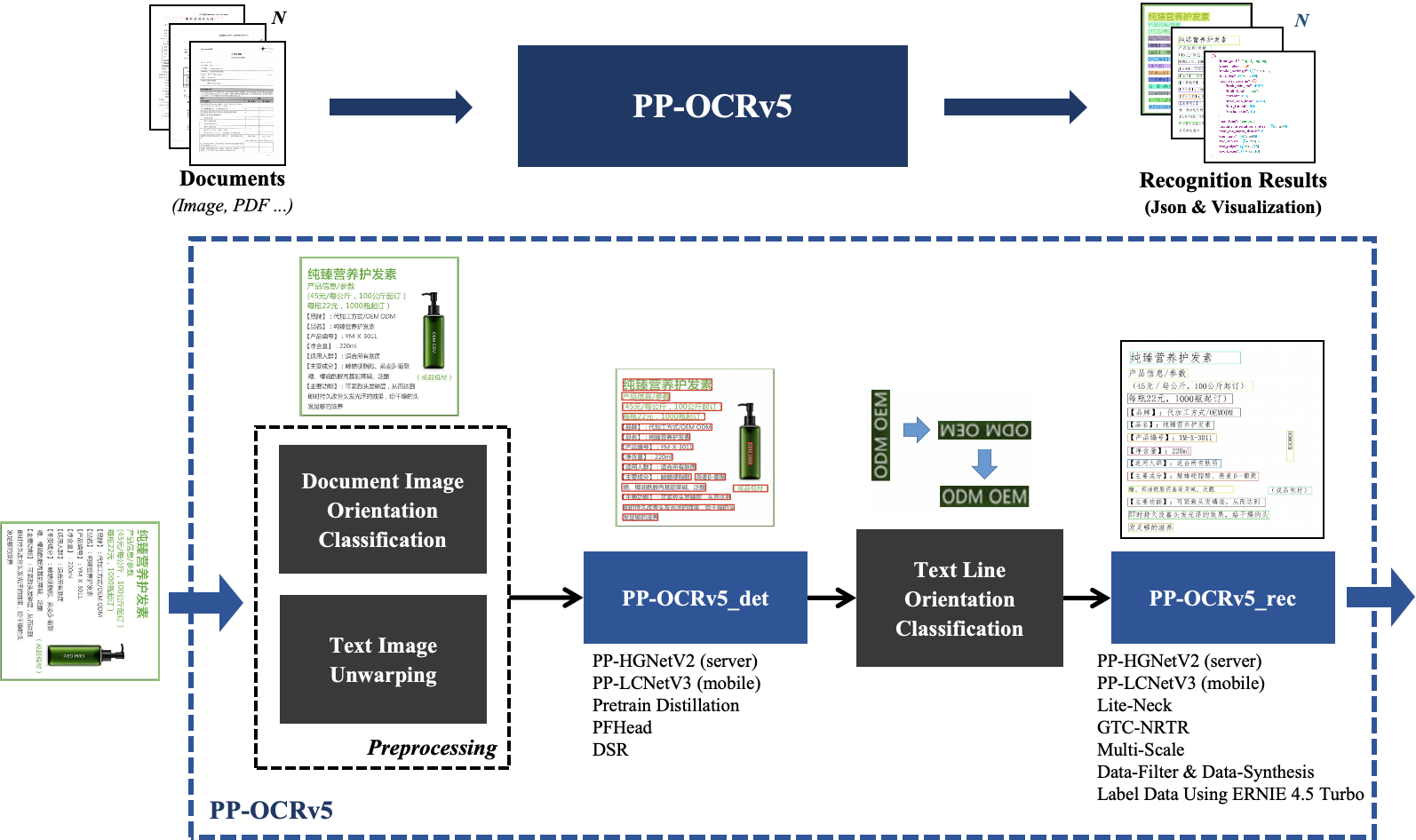

Introduction to PP-OCRv5¶

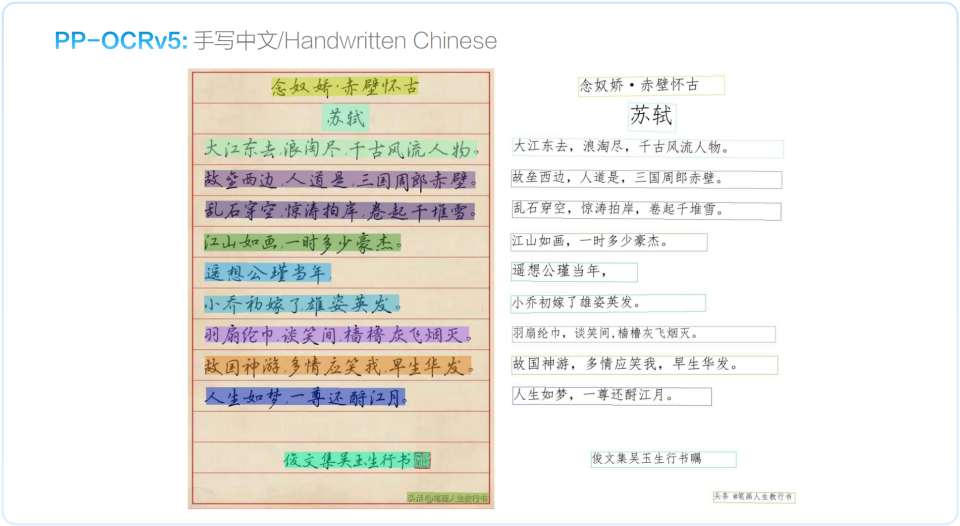

PP-OCRv5 is the new generation text recognition solution of PP-OCR, focusing on multi-scenario and multi-text type recognition. In terms of text types, PP-OCRv5 supports 5 major mainstream text types: Simplified Chinese, Chinese Pinyin, Traditional Chinese, English, and Japanese. For scenarios, PP-OCRv5 has upgraded recognition capabilities for challenging scenarios such as complex Chinese and English handwriting, vertical text, and uncommon characters. On internal complex evaluation sets across multiple scenarios, PP-OCRv5 achieved a 13 percentage point end-to-end improvement over PP-OCRv4.

Key Metrics¶

1. Text Detection Metrics¶

| Model | Handwritten Chinese | Handwritten English | Printed Chinese | Printed English | Traditional Chinese | Ancient Text | Japanese | General Scenario | Pinyin | Rotation | Distortion | Artistic Text | Average |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PP-OCRv5_server_det | 0.803 | 0.841 | 0.945 | 0.917 | 0.815 | 0.676 | 0.772 | 0.797 | 0.671 | 0.8 | 0.876 | 0.673 | 0.827 |

| PP-OCRv4_server_det | 0.706 | 0.249 | 0.888 | 0.690 | 0.759 | 0.473 | 0.685 | 0.715 | 0.542 | 0.366 | 0.775 | 0.583 | 0.662 |

| PP-OCRv5_mobile_det | 0.744 | 0.777 | 0.905 | 0.910 | 0.823 | 0.581 | 0.727 | 0.721 | 0.575 | 0.647 | 0.827 | 0.525 | 0.770 |

| PP-OCRv4_mobile_det | 0.583 | 0.369 | 0.872 | 0.773 | 0.663 | 0.231 | 0.634 | 0.710 | 0.430 | 0.299 | 0.715 | 0.549 | 0.624 |

Compared to PP-OCRv4, PP-OCRv5 shows significant improvement in all detection scenarios, especially in handwriting, ancient texts, and Japanese detection capabilities.

2. Text Recognition Metrics¶

| Evaluation Set Category | Handwritten Chinese | Handwritten English | Printed Chinese | Printed English | Traditional Chinese | Ancient Text | Japanese | Confusable Characters | General Scenario | Pinyin | Vertical Text | Artistic Text | Weighted Average |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| PP-OCRv5_server_rec | 0.5807 | 0.5806 | 0.9013 | 0.8679 | 0.7472 | 0.6039 | 0.7372 | 0.5946 | 0.8384 | 0.7435 | 0.9314 | 0.6397 | 0.8401 |

| PP-OCRv4_server_rec | 0.3626 | 0.2661 | 0.8486 | 0.6677 | 0.4097 | 0.3080 | 0.4623 | 0.5028 | 0.8362 | 0.2694 | 0.5455 | 0.5892 | 0.5735 |

| PP-OCRv5_mobile_rec | 0.4166 | 0.4944 | 0.8605 | 0.8753 | 0.7199 | 0.5786 | 0.7577 | 0.5570 | 0.7703 | 0.7248 | 0.8089 | 0.5398 | 0.8015 |

| PP-OCRv4_mobile_rec | 0.2980 | 0.2550 | 0.8398 | 0.6598 | 0.3218 | 0.2593 | 0.4724 | 0.4599 | 0.8106 | 0.2593 | 0.5924 | 0.5555 | 0.5301 |

A single model can cover multiple languages and text types, with recognition accuracy significantly ahead of previous generation products and mainstream open-source solutions.

PP-OCRv5 Demo Examples¶

Reference Data for Inference Performance¶

Test Environment:

- NVIDIA Tesla V100

- Intel Xeon Gold 6271C

- PaddlePaddle 3.0.0

Tested on 200 images (including both general and document images). During testing, images are read from disk, so the image reading time and other associated overhead are also included in the total time consumption. If the images are preloaded into memory, the average time per image can be further reduced by approximately 25 ms.

Unless otherwise specified:

- PP-OCRv4_mobile_det and PP-OCRv4_mobile_rec models are used.

- Document orientation classification, image correction, and text line orientation classification are not used.

text_det_limit_typeis set to"min"andtext_det_limit_side_lento732.

1. Comparison of Inference Performance Between PP-OCRv5 and PP-OCRv4¶

| Config | Description |

|---|---|

| v5_mobile | Uses PP-OCRv5_mobile_det and PP-OCRv5_mobile_rec models. |

| v4_mobile | Uses PP-OCRv4_mobile_det and PP-OCRv4_mobile_rec models. |

| v5_server | Uses PP-OCRv5_server_det and PP-OCRv5_server_rec models. |

| v4_server | Uses PP-OCRv4_server_det and PP-OCRv4_server_rec models. |

GPU, without high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) | Peak VRAM (MB) | Avg VRAM (MB) |

|---|---|---|---|---|---|---|---|

| v5_mobile | 0.56 | 1162 | 106.02 | 1576.43 | 1420.83 | 4342.00 | 3258.95 |

| v4_mobile | 0.27 | 2246 | 111.20 | 1392.22 | 1318.76 | 1304.00 | 1166.46 |

| v5_server | 0.70 | 929 | 105.31 | 1634.85 | 1428.55 | 5402.00 | 4685.13 |

| v4_server | 0.44 | 1418 | 106.96 | 1455.34 | 1346.95 | 6760.00 | 5817.46 |

GPU, with high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) | Peak VRAM (MB) | Avg VRAM (MB) |

|---|---|---|---|---|---|---|---|

| v5_mobile | 0.50 | 1301 | 106.50 | 1338.12 | 1155.86 | 4112.00 | 3536.36 |

| v4_mobile | 0.21 | 2887 | 114.09 | 1113.27 | 1054.46 | 2072.00 | 1840.59 |

| v5_server | 0.60 | 1084 | 105.73 | 1980.73 | 1776.20 | 12150.00 | 11849.40 |

| v4_server | 0.36 | 1687 | 104.15 | 1186.42 | 1065.67 | 13058.00 | 12679.00 |

CPU, without high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) |

|---|---|---|---|---|---|

| v5_mobile | 1.43 | 455 | 798.93 | 11695.40 | 6829.09 |

| v4_mobile | 1.09 | 556 | 813.16 | 11996.30 | 6834.25 |

| v5_server | 3.79 | 172 | 799.24 | 50216.00 | 27902.40 |

| v4_server | 4.22 | 148 | 803.74 | 51428.70 | 28593.60 |

CPU, with high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) |

|---|---|---|---|---|---|

| v5_mobile | 1.14 | 571 | 339.68 | 3245.17 | 2560.55 |

| v4_mobile | 0.68 | 892 | 443.00 | 3057.38 | 2329.44 |

| v5_server | 3.56 | 183 | 797.03 | 45664.70 | 26905.90 |

| v4_server | 4.22 | 148 | 803.74 | 51428.70 | 28593.60 |

Note: PP-OCRv5 uses a larger dictionary in the recognition model, which increases inference time and causes slower performance compared to PP-OCRv4.

2. Impact of Auxiliary Features on PP-OCRv5 Inference Performance¶

| Config | Description |

|---|---|

| base | No document orientation classification, no image correction, no text line orientation classification. |

| with_textline | Includes text line orientation classification only. |

| with_all | Includes document orientation classification, image correction, and text line orientation classification. |

GPU, without high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) | Peak VRAM (MB) | Avg VRAM (MB) |

|---|---|---|---|---|---|---|---|

| base | 0.56 | 1162 | 106.02 | 1576.43 | 1420.83 | 4342.00 | 3258.95 |

| with_textline | 0.59 | 1104 | 105.58 | 1765.64 | 1478.53 | 19.48 | 4350.00 |

| with_all | 1.02 | 600 | 104.92 | 1924.23 | 1628.50 | 10.96 | 2632.00 |

CPU, without high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) |

|---|---|---|---|---|---|

| base | 1.43 | 455 | 798.93 | 11695.40 | 6829.09 |

| with_textline | 1.50 | 434 | 799.47 | 12007.20 | 6882.22 |

| with_all | 1.93 | 316 | 646.49 | 11759.60 | 6940.54 |

Note: Auxiliary features such as image unwarping can impact inference accuracy. More features do not necessarily yield better results and may increase resource usage.

3. Impact of Input Scaling Strategy in Text Detection Module on PP-OCRv5 Inference Performance¶

| Config | Description |

|---|---|

| mobile_min_1280 | Uses min limit type and text_det_limit_side_len=1280 with PP-OCRv5_mobile models. |

| mobile_min_736 | Same as default, min, side_len=736. |

| mobile_max_960 | Uses max limit type and side_len=960. |

| mobile_max_640 | Uses max limit type and side_len=640. |

| server_min_1280 | Uses min, side_len=1280 with PP-OCRv5_server models. |

| server_min_736 | Same as default, min, side_len=736. |

| server_max_960 | Uses max, side_len=960. |

| server_max_640 | Uses max, side_len=640. |

GPU, without high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) | Peak VRAM (MB) | Avg VRAM (MB) |

|---|---|---|---|---|---|---|---|

| mobile_min_1280 | 0.61 | 1071 | 109.12 | 1663.71 | 1439.72 | 4202.00 | 3550.32 |

| mobile_min_736 | 0.56 | 1162 | 106.02 | 1576.43 | 1420.83 | 4342.00 | 3258.95 |

| mobile_max_960 | 0.48 | 1313 | 103.49 | 1587.25 | 1395.48 | 2642.00 | 2319.03 |

| mobile_max_640 | 0.42 | 1436 | 103.07 | 1651.14 | 1422.62 | 2530.00 | 2149.11 |

| server_min_1280 | 0.82 | 795 | 107.17 | 1678.16 | 1428.94 | 10368.00 | 8320.43 |

| server_min_736 | 0.70 | 929 | 105.31 | 1634.85 | 1428.55 | 5402.00 | 4685.13 |

| server_max_960 | 0.59 | 1073 | 103.03 | 1590.19 | 1383.62 | 2928.00 | 2079.47 |

| server_max_640 | 0.54 | 1099 | 102.63 | 1602.09 | 1416.49 | 3152.00 | 2737.81 |

CPU, without high-performance inference:

| Config | Avg Time/Image (s) | Avg Chars/sec | Avg CPU Usage (%) | Peak RAM (MB) | Avg RAM (MB) |

|---|---|---|---|---|---|

| mobile_min_1280 | 1.64 | 398 | 799.45 | 12344.10 | 7100.60 |

| mobile_min_736 | 1.43 | 455 | 798.93 | 11695.40 | 6829.09 |

| mobile_max_960 | 1.21 | 521 | 800.13 | 11099.10 | 6369.49 |

| mobile_max_640 | 1.01 | 597 | 802.52 | 9585.48 | 5573.52 |

| server_min_1280 | 4.48 | 145 | 800.49 | 50683.10 | 28273.30 |

| server_min_736 | 3.79 | 172 | 799.24 | 50216.00 | 27902.40 |

| server_max_960 | 2.67 | 237 | 797.63 | 49362.50 | 26075.60 |

| server_max_640 | 2.36 | 251 | 795.18 | 45656.10 | 24900.80 |

Deployment and Secondary Development¶

- Multiple System Support: Compatible with mainstream operating systems including Windows, Linux, and Mac.

- Multiple Hardware Support: Besides NVIDIA GPUs, it also supports inference and deployment on Intel CPU, Kunlun chips, Ascend, and other new hardware.

- High-Performance Inference Plugin: Recommended to combine with high-performance inference plugins to further improve inference speed. See High-Performance Inference Guide for details.

- Service Deployment: Supports highly stable service deployment solutions. See Service Deployment Guide for details.

- Secondary Development Capability: Supports custom dataset training, dictionary extension, and model fine-tuning. Example: To add Korean recognition, you can extend the dictionary and fine-tune the model, seamlessly integrating into existing pipelines. See Text Detection Module Usage Tutorial and Text Recognition Module Usage Tutorial for details.