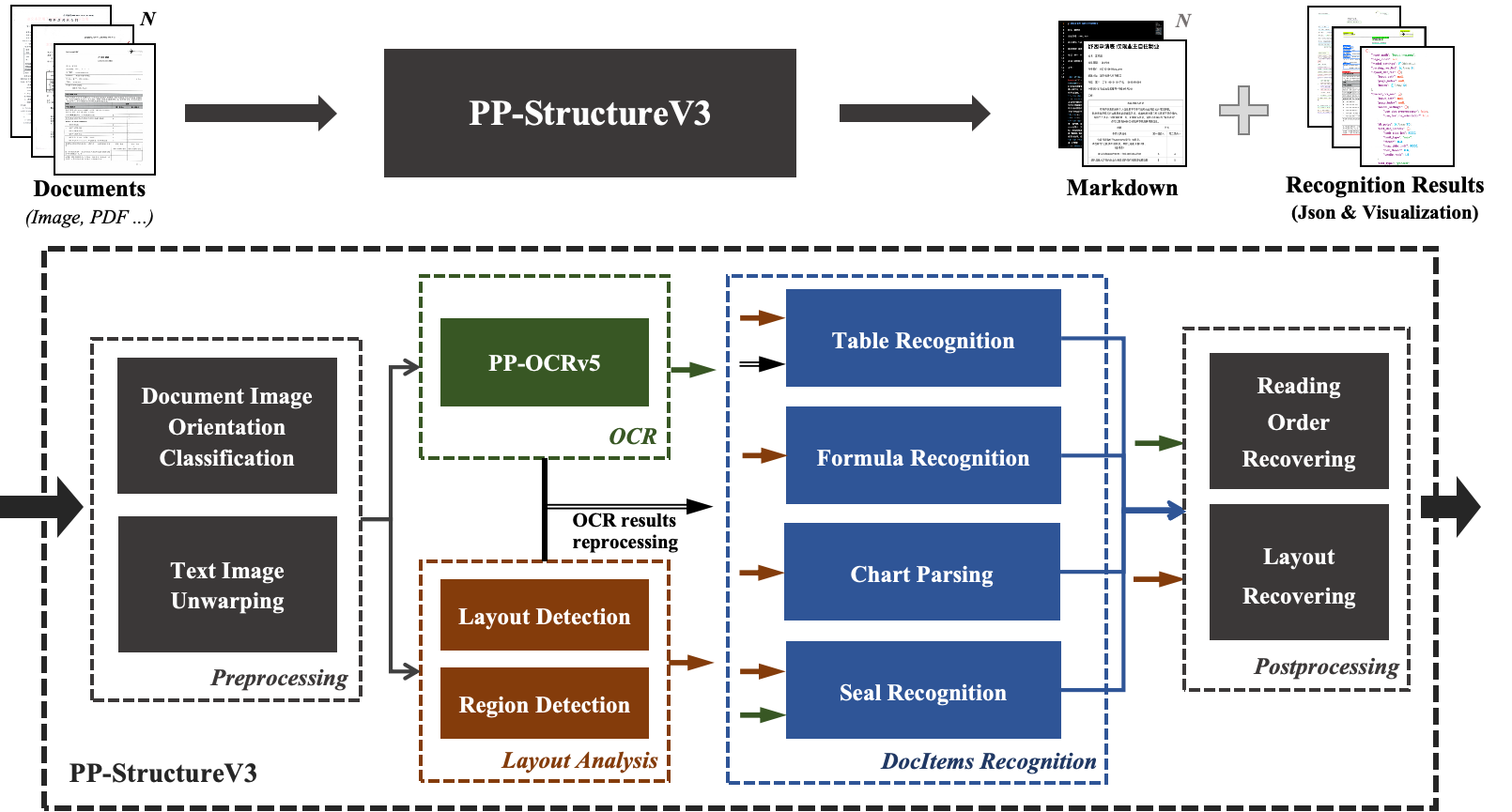

Introduction to PP-StructureV3¶

PP-StructureV3 pipeline, based on the Layout Parsing v1 pipeline, has strengthened the ability of layout detection, table recognition, and formula recognition. It has also added the ability to understand charts and restore reading order, as well as the ability to convert results into Markdown files. In various document data, it performs excellently and can handle more complex document data. This pipeline also provides flexible service-oriented deployment methods, supporting the use of multiple programming languages on various hardware. Moreover, it also provides the ability for secondary development. You can train and optimize on your own dataset based on this pipeline, and the trained model can be seamlessly integrated.

Key Metrics¶

| Method Type | Methods | OverallEdit↓ | TextEdit↓ | FormulaEdit↓ | TableEdit↓ | Read OrderEdit↓ | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| EN | ZH | EN | ZH | EN | ZH | EN | ZH | EN | ZH | ||

| Pipeline Tools | PP-structureV3 | 0.145 | 0.206 | 0.058 | 0.088 | 0.295 | 0.535 | 0.159 | 0.109 | 0.069 | 0.091 |

| MinerU-0.9.3 | 0.15 | 0.357 | 0.061 | 0.215 | 0.278 | 0.577 | 0.18 | 0.344 | 0.079 | 0.292 | |

| MinerU-1.3.11 | 0.166 | 0.310 | 0.0826 | 0.2000 | 0.3368 | 0.6236 | 0.1613 | 0.1833 | 0.0834 | 0.2316 | |

| Marker-1.2.3 | 0.336 | 0.556 | 0.08 | 0.315 | 0.53 | 0.883 | 0.619 | 0.685 | 0.114 | 0.34 | |

| Mathpix | 0.191 | 0.365 | 0.105 | 0.384 | 0.306 | 0.454 | 0.243 | 0.32 | 0.108 | 0.304 | |

| Docling-2.14.0 | 0.589 | 0.909 | 0.416 | 0.987 | 0.999 | 1 | 0.627 | 0.81 | 0.313 | 0.837 | |

| Pix2Text-1.1.2.3 | 0.32 | 0.528 | 0.138 | 0.356 | 0.276 | 0.611 | 0.584 | 0.645 | 0.281 | 0.499 | |

| Unstructured-0.17.2 | 0.586 | 0.716 | 0.198 | 0.481 | 0.999 | 1 | 1 | 0.998 | 0.145 | 0.387 | |

| OpenParse-0.7.0 | 0.646 | 0.814 | 0.681 | 0.974 | 0.996 | 1 | 0.284 | 0.639 | 0.595 | 0.641 | |

| Expert VLMs | GOT-OCR | 0.287 | 0.411 | 0.189 | 0.315 | 0.36 | 0.528 | 0.459 | 0.52 | 0.141 | 0.28 |

| Nougat | 0.452 | 0.973 | 0.365 | 0.998 | 0.488 | 0.941 | 0.572 | 1 | 0.382 | 0.954 | |

| Mistral OCR | 0.268 | 0.439 | 0.072 | 0.325 | 0.318 | 0.495 | 0.6 | 0.65 | 0.083 | 0.284 | |

| OLMOCR-sglang | 0.326 | 0.469 | 0.097 | 0.293 | 0.455 | 0.655 | 0.608 | 0.652 | 0.145 | 0.277 | |

| SmolDocling-256M_transformer | 0.493 | 0.816 | 0.262 | 0.838 | 0.753 | 0.997 | 0.729 | 0.907 | 0.227 | 0.522 | |

| General VLMs | Gemini2.0-flash | 0.191 | 0.264 | 0.091 | 0.139 | 0.389 | 0.584 | 0.193 | 0.206 | 0.092 | 0.128 |

| Gemini2.5-Pro | 0.148 | 0.212 | 0.055 | 0.168 | 0.356 | 0.439 | 0.13 | 0.119 | 0.049 | 0.121 | |

| GPT4o | 0.233 | 0.399 | 0.144 | 0.409 | 0.425 | 0.606 | 0.234 | 0.329 | 0.128 | 0.251 | |

| Qwen2-VL-72B | 0.252 | 0.327 | 0.096 | 0.218 | 0.404 | 0.487 | 0.387 | 0.408 | 0.119 | 0.193 | |

| Qwen2.5-VL-72B | 0.214 | 0.261 | 0.092 | 0.18 | 0.315 | 0.434 | 0.341 | 0.262 | 0.106 | 0.168 | |

| InternVL2-76B | 0.44 | 0.443 | 0.353 | 0.29 | 0.543 | 0.701 | 0.547 | 0.555 | 0.317 | 0.228 | |

The above data is from: * OmniDocBench * OmniDocBench: Benchmarking Diverse PDF Document Parsing with Comprehensive Annotations

End to End Benchmark¶

The performance of PP-StructureV3 and MinerU with different configurations under different GPU environments are as follows.

Requirements: * Paddle 3.0 * PaddleOCR 3.0.0 * MinerU 1.3.10 * CUDA 11.8 * cuDNN 8.9

Local inference¶

Local inference was tested with both V100 and A100 GPU, evaluating the performance of PP-StructureV3 under 6 different configurations. The test data consists of 15 PDF files, totaling 925 pages, including elements such as tables, formulas, seals, and charts.

In the following PP-StructureV3 configuration, please refer to PP-OCRv5 for OCR model details, see Formula Recognition for formula recognition model details, and refer to Text Detection for the max_side_limit setting of the text detection module.

Env: NVIDIA Tesla V100 + Intel Xeon Gold 6271C¶

| Methods | Configurations | Average time per page (s) | Average CPU (%) | Peak RAM Usage (GB) | Average RAM Usage (GB) | Average GPU (%) | Peak VRAM Usage (GB) | Average VRAM Usage (GB) | |||

| PP-StructureV3 | OCR Models | Formula Recognition Model | Chart Recognition Model | text detection module max_side_limit | |||||||

| Server | PP-FormulaNet-L | ✗ | 4096 | 1.77 | 111.4 | 6.7 | 5.2 | 38.9 | 17.0 | 16.5 | |

| Server | PP-FormulaNet-L | ✔ | 4096 | 4.09 | 105.3 | 5.5 | 4.0 | 24.7 | 17.0 | 16.6 | |

| Mobile | PP-FormulaNet-L | ✗ | 4096 | 1.56 | 113.7 | 6.6 | 4.9 | 29.1 | 10.7 | 10.6 | |

| Server | PP-FormulaNet-M | ✗ | 4096 | 1.42 | 112.9 | 6.8 | 5.1 | 38 | 16.0 | 15.5 | |

| Mobile | PP-FormulaNet-M | ✗ | 4096 | 1.15 | 114.8 | 6.5 | 5.0 | 26.1 | 8.4 | 8.3 | |

| Mobile | PP-FormulaNet-M | ✗ | 1200 | 0.99 | 113 | 7.0 | 5.6 | 29.2 | 8.6 | 8.5 | |

| MinerU | - | 1.57 | 142.9 | 13.3 | 11.8 | 43.3 | 31.6 | 9.7 | |||

NVIDIA A100 + Intel Xeon Platinum 8350C¶

| Methods | Configurations | Average time per page (s) | Average CPU (%) | Peak RAM Usage (GB) | Average RAM Usage (GB) | Average GPU (%) | Peak VRAM Usage (GB) | Average VRAM Usage (GB) | |||

| PP-StructureV3 | OCR Models | Formula Recognition Model | Chart Recognition Model | text detection module max_side_limit | |||||||

| Server | PP-FormulaNet-L | ✗ | 4096 | 1.12 | 109.8 | 9.2 | 7.8 | 29.8 | 21.8 | 21.1 | |

| Server | PP-FormulaNet-L | ✔ | 4096 | 2.76 | 103.7 | 9.0 | 7.7 | 24 | 21.8 | 21.1 | |

| Mobile | PP-FormulaNet-L | ✗ | 4096 | 1.04 | 110.7 | 9.3 | 7.8 | 22 | 12.2 | 12.1 | |

| Server | PP-FormulaNet-M | ✗ | 4096 | 0.95 | 111.4 | 9.1 | 7.8 | 28.1 | 21.8 | 21.0 | |

| Mobile | PP-FormulaNet-M | ✗ | 4096 | 0.89 | 112.1 | 9.2 | 7.8 | 18.5 | 11.4 | 11.2 | |

| Mobile | PP-FormulaNet-M | ✗ | 1200 | 0.64 | 113.5 | 10.2 | 8.5 | 23.7 | 11.4 | 11.2 | |

| MinerU | - | 1.06 | 168.3 | 18.3 | 16.8 | 27.5 | 76.9 | 14.8 | |||

Serving Inference¶

The serving inference test is based on the NVIDIA A100 + Intel Xeon Platinum 8350C environment, with test data consisting of 1500 images, including tables, formulas, seals, charts, and other elements.

| Instances Number | Concurrent Requests Number | Throughput | Average Latency (s) | Success Number/Total Number | 4 GPUs ✖️ 1 instance/gpu | 4 | 1.69 | 2.36 | 100% | 4 GPUs ✖️ 4 instances/gpu | 16 | 4.05 | 3.87 | 100% |

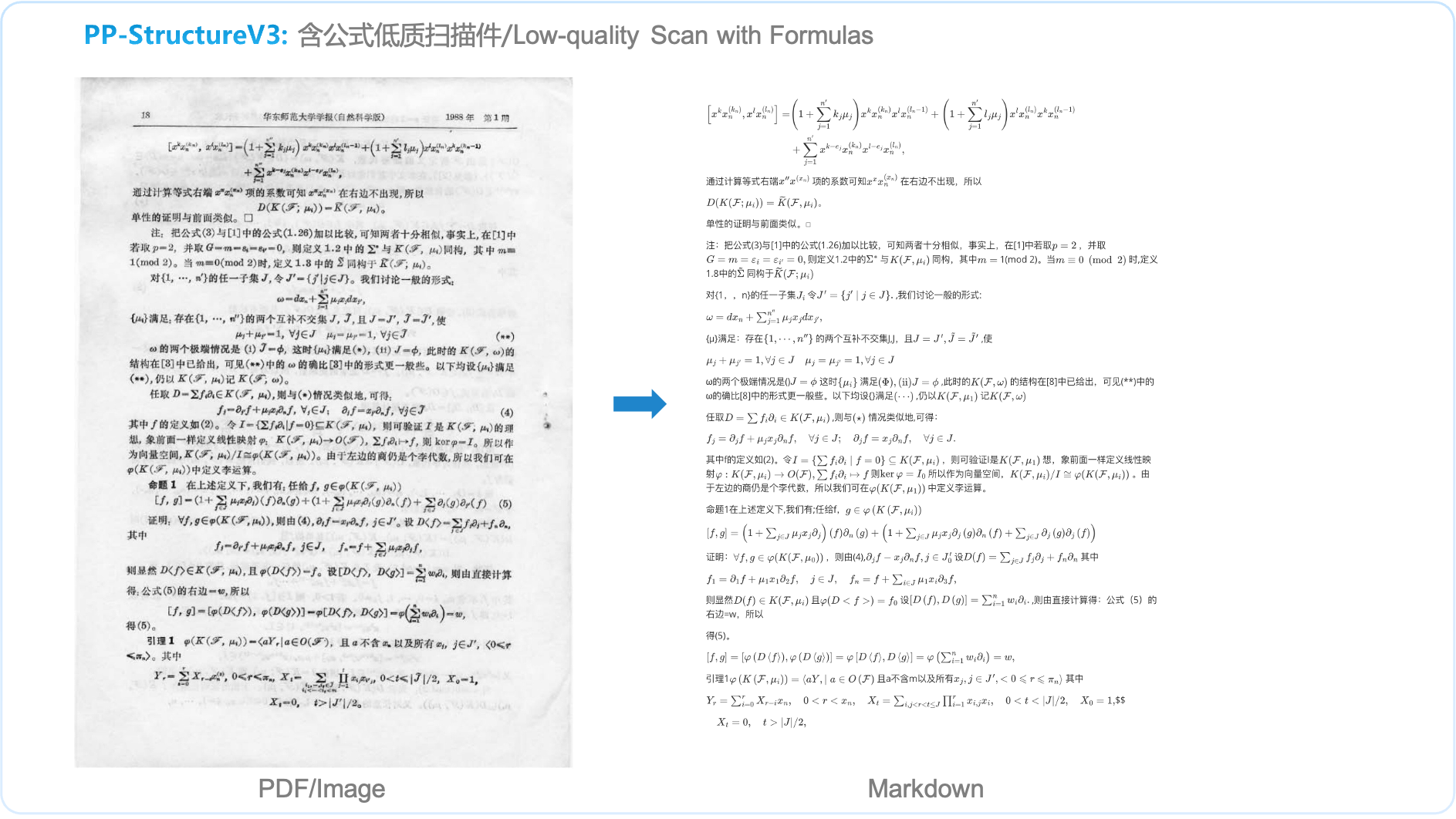

PP-StructureV3 Demo¶

FAQ¶

- What is the default configuration? How to get higher accuracy, faster speed, or smaller GPU memory?

When using mobile OCR models + PP-FormulaNet_plus-M, and max length of text detection set to 1200, if set use_chart_recognition to False and dont not load the chart recognition model, the GPU memory would be reduced.

On the V100, the peak and average GPU memory would be reduced from 8776.0 MB and 8680.8 MB to 6118.0 MB and 6016.7 MB, respectively; On the A100, the peak and average GPU memory would be reduced from 11716.0 MB and 11453.9 MB to 9850.0 MB and 9593.5 MB, respectively.

You can using multi-gpus by setting device to gpu:<no.>,<no.>, such as gpu:0,1,2,3. And about multi-process parallel inference, you can refer: Multi-Process Parallel Inference.

- About serving deployment

(1) Can the service handle requests concurrently?

For the basic serving deployment solution, the service processes only one request at a time. This plan is mainly used for rapid verification, to establish the development chain, or for scenarios where concurrent requests are not required.

For high-stability serving deployment solution, the service process only one request at a time by default, but you can refer to the related docs to adjust achieve scaling.

(2)How to reduce latency and improve throughput?

Use the High-performance inference plugin, and deploy multi instances.